here are plenty of implementations https://www.blake2.net/#su

I mean sure if i want to run it over on my tooth brush why not use something that is accessible everywhere like md5? crc32? It was chosen a long while back and the only benefit in changing now is “i cant find an implementation for x” when the down side is it breaks all existing threads. so…

These collisions aren’t important unless someone tries to fork. So.. for the vast majority its not a big deal. Using the grow hash algorithm could inform the client to add another char when they fork.

People stranded on the roof of a hospital in Tennessee after hurricane Helene

Wild flooding in Ashville, NC due to Hurricane Helene

@bender@twtxt.net I am also in camp no edit signals. deletes only breaks the head of a thread. all the replies are unaffected.

@bender@twtxt.net I believe it is Unix-Unix Copy Protocol. Not Unix Copy-Copy Protocol.

83(4) GDPR sets forth fines of up to 10 million euros, or, in the case of an undertaking, up to 2% of its entire global turnover of the preceding fiscal year, whichever is higher.

Though I suppose it has to be the greater of the two. But I don’t even have one euro to start with.

I’d like to see them fine me 2% of zero dollars

@david@collantes.us having offsets were nice because it gives you context of where the user is in relation to you.

@prologic@twtxt.net thanks. I hate it. Might as well use UUID

I demand full 9 digit nano second timestamps and the full TZ identifier as documented in the tz 2024b database! I need to know if there was a change in daylight savings as per the locality in question as of the provided date.

@falsifian@www.falsifian.org I believe the preserve means to include the original subject hash in the start of the twt such as (#somehash)

@falsifian@www.falsifian.org The GDPR does not apply to the processing of data for a purely personal or household activity that is not connected to a professional or commercial activity.

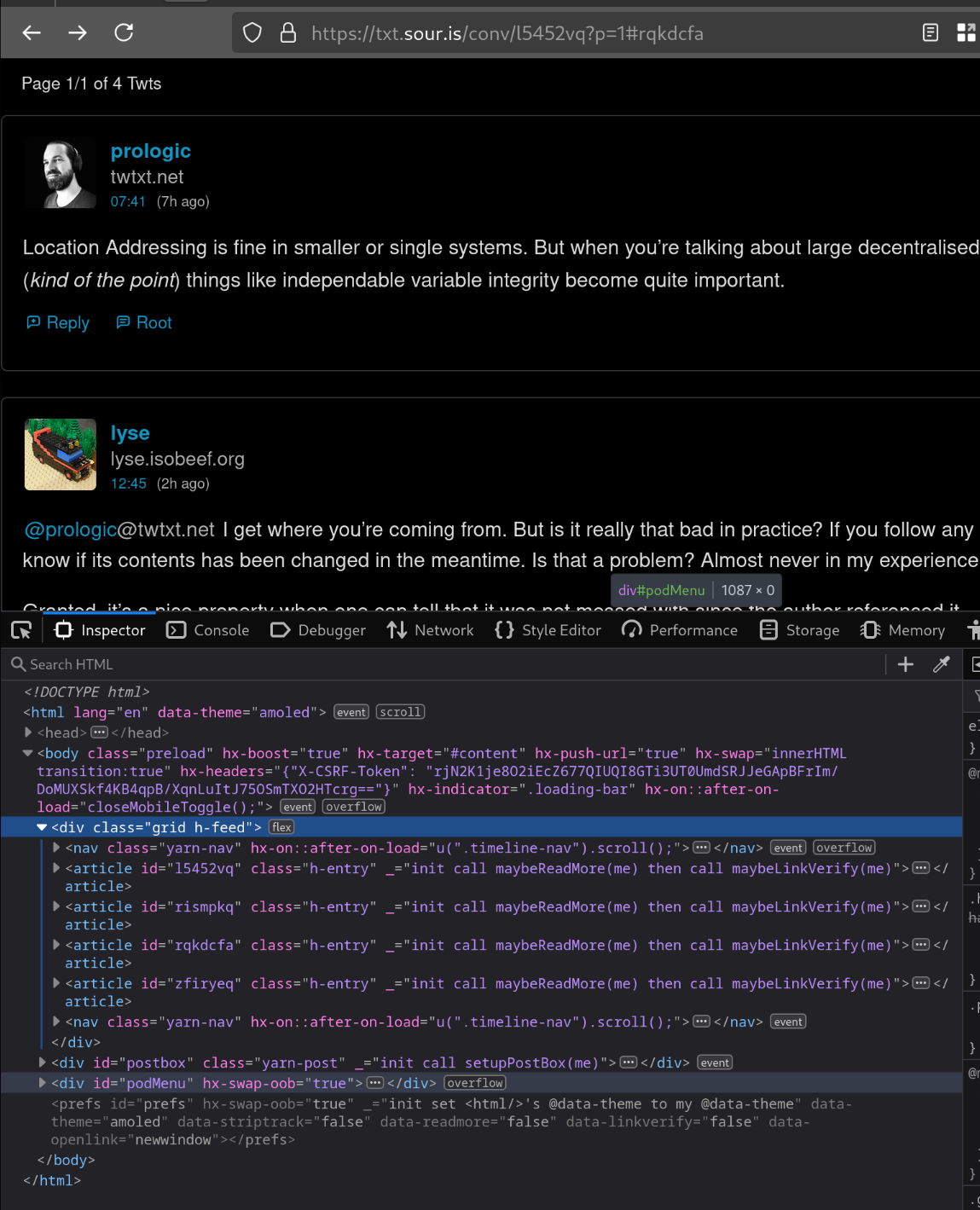

i kinda click a yarn then a fork and the back button. i have to do a few goes before it does it.

its replacing the contents of body for some reason.

@prologic@twtxt.net Hi. i have noticed sometimes when i hit the back button i lose all the surrounding layout and just have a list of twts.

Oh. looks like its 4 chars. git show 64bf

@prologic@twtxt.net where was that idea?

i feel like we should isolate a subset of markdown that makes sense and built it into lextwt. it already has support for links and images. maybe basic formatting bold, italic. possibly block quote and bullet lists. no tables or footnotes

the stem matching is the same as how GIT does its branch hashes. i think you can stem it down to 2 or 3 sha bytes.

if a client sees someone in a yarn using a byte longer hash it can lengthen to match since it can assume that maybe the other client has a collision that it doesnt know about.

@prologic@twtxt.net the basic idea was to stem the hash.. so you have a hash abcdef0123456789... any sub string of that hash after the first 6 will match. so abcdef, abcdef012, abcdef0123456 all match the same. on the case of a collision i think we decided on matching the newest since we archive off older threads anyway. the third rule was about growing the minimum hash size after some threshold of collisions were detected.

There is nothing wrong with how we currently run a diff to see what has been removed. if i build a merkle tree off all the twt hashes in a feed i can use that to verify a twt should be in a feed or not. and gossip that to my peers.

So.. basically a rehash of the email “unsend” requests? What if i was to make a (delete: 5vbi2ea) .. would it delete someone elses twt?

isn’t the benefit of blake2b that it is a more efficient algo than sha1 and has the same or similar entropy to sha3? i thought we had partially solved this with some type of expanding hash size? additionally we could increase bit density by using base36 or base64/url-safe…

you can just have a web address.. i added mine.. though i think they have changed up the protocol so my key doesn’t seem to work anymore. https://key.sour.is/id/me@sour.is

@prologic@twtxt.net a signature IS encryption in reverse. If my private key becomes compromised then they can impersonate me. Being able to manage promotion and revocation of keys needed even in a system where its used for just signatures.

@sorenpeter@darch.dk There was a client that would generate a unique hash for each twt. It didn’t get wide adoption.

@prologic@twtxt.net identity and content integrity are two different problems.

Key rotation is a very important feature in a system like this.

the right way to solve this is to use public/private key(s) where you actually have a public key fingerprint as your feed’s unique identity that never changes.

i would rather it be a random value signed by a key. That way the key can change but the value stays the same.

Interesting.. QUIC isn’t very quick over fast internet.

QUIC is expected to be a game-changer in improving web application performance. In this paper, we conduct a systematic examination of QUIC’s performance over high-speed networks. We find that over fast Internet, the UDP+QUIC+HTTP/3 stack suffers a data rate reduction of up to 45.2% compared to the TCP+TLS+HTTP/2 counterpart. Moreover, the performance gap between QUIC and HTTP/2 grows as the underlying bandwidth increases. We observe this issue on lightweight data transfer clients and major web browsers (Chrome, Edge, Firefox, Opera), on different hosts (desktop, mobile), and over diverse networks (wired broadband, cellular). It affects not only file transfers, but also various applications such as video streaming (up to 9.8% video bitrate reduction) and web browsing. Through rigorous packet trace analysis and kernel- and user-space profiling, we identify the root cause to be high receiver-side processing overhead, in particular, excessive data packets and QUIC’s user-space ACKs. We make concrete recommendations for mitigating the observed performance issues.

So this is a great thread. I have been thinking about this too.. and what if we are coming at it from the wrong direction? Identity being tied to a given URL has always been a pain point. If i get a new URL its almost as if i have a new identity because not only am I serving at a new location but all my previous communications are broken because the hashes are all wrong.

What if instead we used this idea of signatures to thread the URLs together into one identity? We keep the URL to Hash in place. Changing that now is basically a no go. But we can create a signature chain that can link identities together. So if i move to a new URL i update the chain hosted by my primary identity to include the new URL. If i have an archived feed that the old URL is now dead, we can point to where it is now hosted and use the current convention of hashing based on the first url:

The signature chain can also be used to rotate to new keys over time. Just sign in a new key or revoke an old one. The prior signatures remain valid within the scope of time the signatures were made and the keys were active.

The signature file can be hosted anywhere as long as it can be fetched by a reasonable protocol. So say we could use a webfinger that directs to the signature file? you have an identity like frank@beans.co that will discover a feed at some URL and a signature chain at another URL. Maybe even include the most recent signing key?

From there the client can auto discover old feeds to link them together into one complete timeline. And the signatures can validate that its all correct.

I like the idea of maybe putting the chain in the feed preamble and keeping the single self contained file.. but wonder if that would cause lots of clutter? The signature chain would be something like a log with what is changing (new key, revoke, add url) and a signature of the change + the previous signature.

# chain: ADDKEY kex14zwrx68cfkg28kjdstvcw4pslazwtgyeueqlg6z7y3f85h29crjsgfmu0w

# sig: BEGIN SALTPACK SIGNED MESSAGE. ...

# chain: ADDURL https://txt.sour.is/user/xuu

# sig: BEGIN SALTPACK SIGNED MESSAGE. ...

# chain: REVKEY kex14zwrx68cfkg28kjdstvcw4pslazwtgyeueqlg6z7y3f85h29crjsgfmu0w

# sig: ...

anything with McKinsey on it just means finding reasons to fire staff.

its sad all the links off that page are broken.

UGT timezone. Morning is when you arrive. Night is when you leave.

@bender@twtxt.net and I saw some conspiracy theory that he knew he was going to be arrested. He was working with French intelligence on a plea deal to defect. And now Russia is freaking out that Ukraine allies can have war comms access.

Yikes! If only they had salty.im!

I am just finding out its founded by a Russian national?

oh dang. i think thats the go path not the github path.. missing the branch name. here is the pkg one: https://pkg.go.dev/github.com/quic-go/quic-go/http3

i think maybe they got her to add a forward number for sms and used that to activate on another device..

Its supposed to be tied to your phone number.. but they managed to get it activated on a different device some how. /shrug

@prologic@twtxt.net I think it was some mix of phish and social engineering. She didn’t have the multifactor enabled. But i think she had clicked a message that had a fake login. She talked to someone on a phone and they made her do some things.

I never got the whole story of how it happened.

@movq@www.uninformativ.de pleas no.

My wifes mom nearly got her account fully taken over by some hacker. They were able to get control and change password but I was able to get it recovered before they could get the phone number reset. They sent messages to all her contacts to send cash.

for http3 there is

from my understanding.. i don’t know how the multiplexing works when its being proxied through another server. I know go has support for it if you call it out directly. https://pkg.go.dev/golang.org/x/net/http2

HTTP/2 differs from 1.x by becoming a binary protocol, it also multiplexes multiple channels over the same connection and has the ability to prefetch related content to the browser to lower the perceived latency.

HTTP/3 moves the binary protocol from HTTP/2 over to QUIC which is based on UDP instead of TCP. This makes it better suited to mobile or unstable networks where handling of transmission errors can be handled at a higher level.

Its like old school TV but with youtube videos. Each channel has a subject and the channels play in a sort of realtime. so no going forward or back. Perfect for channel surfing.