Pinellas County - Easy: 3.13 miles, 00:08:27 average pace, 00:26:24 duration

felt crazy relaxed and easy breathing. i did notice at around the mile and a half point (11:30 in?) my HR jumped. around this time i also noticed i had to switch to more mouth breathing because my nose was running. going to try to take note of this because if it is a fault on the watch side then i can not consider it a reliable measurement.

#running

Research Account

⌘ Read more

⌘ Read more

I have a habit of running system updates on my work laptop before going out for coffee. Like some kind of ritual to prepare to go into the world fully patched.

St Petersburg Distance Classic Marathon: 26.41 miles, 00:11:23 average pace, 05:00:39 duration

first marathon down. everything that could go wrong did. honestly i am just proud i did not quit. now i have to look at the run and figure out what i can tweak or add to my training. had a cramp start in my right quad at around mile 15. then around mile 18 both of my calves started to feel odd as if someone was lightly strumming my tendons. then they seized! this continued for the remainder of the marathon where i would walk then try to run and then stop when i had to. then during the entirety of the pace my nose would not stop dripping making it difficult to breathe. ha! also my shorts almost came down twice and i had to re-tie them while carrying my handheld water in my teeth. seriously, so many things i did not expect and had not happened in any previous runs.

really happy to be able to eat spicy food and have some alcoholic beverages again though!

#running #race

@prologic@twtxt.net ahhh! Its the dark reader plugin breaking the page.

Twtxt spec enhancement proposal thread 🧵

Adding attributes to individual twts similar to adding feed attributes in the heading comments.

https://git.mills.io/yarnsocial/go-lextwt/pulls/17

The basic use case would be for multilingual feeds where there is a default language and some twts will be written a different language.

As seen in the wild: https://eapl.mx/twtxt.txt

The attributes are formatted as [key=value]

They can show up in the twt anywhere it is not enclosed by another element such as codeblock or part of a markdown link.

> ?

@sorenpeter@darch.dk this makes sense as a quote twt that references a direct URL. If we go back to how it developed on twitter originally it was RT @nick: original text because it contained the original text the twitter algorithm would boost that text into trending.

i like the format (#hash) @<nick url> > "Quoted text"\nThen a comment

as it preserves the human read able. and has the hash for linking to the yarn. The comment part could be optional for just boosting the twt.

The only issue i think i would have would be that that yarn could then become a mess of repeated quotes. Unless the client knows to interpret them as multiple users have reposted/boosted the thread.

The format is also how iphone does reactions to SMS messages with +number liked: original SMS

Got a Dr checkup and the screw in my hand hasn’t moved. That’s the good news. Bad news is that there seems to be something going on with the internal healing. Nothing to do but wait.

Late fees for public libraries are not fines, they’re extra donations to keep the organisation going.

Pinellas County - Long Run (part I): 11.63 miles, 00:09:54 average pace, 01:55:08 duration

did not really want to run today. but i did it anyways and it felt fin until my dumb ass decided to go over one of the clearwater bridges. took everything out of me after those hills and had a hard time getting the legs moving again.

#running

Pinellas County - Long Run: 17.80 miles, 00:08:57 average pace, 02:39:13 duration

practiced a marathon pacing strategy (simulated) of 5km/10mi/10mi/5km. went pretty well even though i was going faster than the paces at each step. but overall i felt good. also scouted out one of the two overpasses i will have to climb during the race. definitely nothing compared to the regular ones. freezing! started at 33F and ended at 45F. pretty lonely out there because the cold kept everyone inside.

#running

Pinellas County - 90’ (part I): 4.53 miles, 00:08:41 average pace, 00:39:21 duration

whoa this run felt great. seemed very fun effort while the heart rate was relatively low with a nice pace. it was very cold out, 42F with a wind chill of 38F, but it didn’t matter once the engine was going. unfortunately, halfway through the run code brown sirens were blaring and had to cut it short.

#running

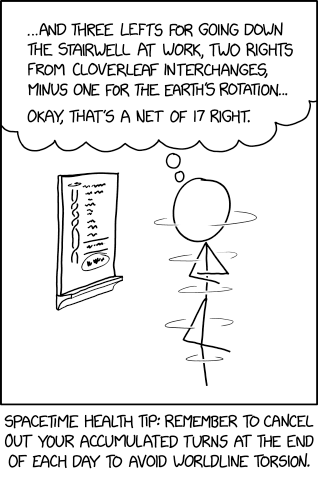

Net Rotations

⌘ Read more

⌘ Read more

So, I finally got day 17 to under a second on my machine. (in the test runner it takes 10)

I implemented a Fibonacci Heap to replace the priority queue to great success.

https://git.sour.is/xuu/advent-of-code/src/branch/main/search.go#L168-L268

OH MY FREAKING HECK. So.. I made my pather able to run as Dijkstra or A* if the interface includes a heuristic.. when i tried without the heuristic it finished faster :|

So now to figure out why its not working right.

man… day17 has been a struggle for me.. i have managed to implement A* but the solve still takes about 2 minutes for me.. not sure how some are able to get it under 10 seconds.

Solution: https://git.sour.is/xuu/advent-of-code/src/branch/main/day17/main.go

A* PathFind: https://git.sour.is/xuu/advent-of-code/src/branch/main/search.go

some seem to simplify the seen check to only be horizontal/vertical instead of each direction.. but it doesn’t give me the right answer

2023 you are withdrawing from the world but before you go I want to thank you for being the year that went between 2022 and 2024

Pinellas County - Long Run: 20.04 miles, 00:09:48 average pace, 03:16:16 duration

kept it chill with between 9:30 and 10:00. the first half almost felt too easy. it was not until i had to go over the overpasses again where my legs started to actually feel it a bit. the leg back i would just “rope” people in and then slow down for a bit to rest. this was a great confidence boost and a nice way to end the year.

#running

Pinellas County - Long Run: 16.66 miles, 00:09:16 average pace, 02:34:24 duration

whew, rough plan was 3 miles warm-up, then 1 mile on/off at 8:30 pace, then cool down the rest of the way. little bit fast for some intervals, and boy did that bridge wipe me out (the third interval?). during the cool down had to stop to find tissues and also going up the final bridge. not trying to kill myself being i still have parental duties with it being christmas eve and all. overall it was a great outing if i must say so.

#running

I fell with a stupid e-scooter and have been waiting at the hospital now for more than three hours. Not worth it. I almost broke my left elbow and right hand wrist. Got lucky because I was wearing a helmet. Not going to try that again. The wheels slipped on the wet zebra crossing paint in a turn.

My last working day of 2023 is going strong! Just a couple dozen things left to take care of.

Today’s Advent of Code puzzle was rather easy (luckily), so I spent the day doing two other things:

- Explore VGA a bit: How to draw pixels on DOS all by yourself without a library in graphics mode 12h?

- Explose XMS a bit: How can I use more than 640 kB / 1 MB on DOS?

Both are … quite awkward. 😬 For VGA, I’ll stick to using the Borland Graphics Interface for now. Mode 13h is great, all pixels are directly addressable – but it’s only 320x200. Mode 12h (640 x 480 with 16 colors) is pretty horrible to use with all the planes and what not.

As per this spec, I’ve written a small XMS example that uses 32 MB of memory:

https://movq.de/v/9ed329b401/xms.c

It works, but it appears the only way to make use of this memory is to copy data back and forth between conventional memory and extended memory. I don’t know how useful that is going to be. 🤔 But at least I know how it works now.

Thinking of building a simple “Things our kids say” database form, using Node, Express and SQlite3. Going beyond simple text files.

Around and around you go. When we sync up? Nobody knows!

When is most of humanity going to realise that we’re all stuck on the same space vessel, hurling to the vast and empty space together, and have limited resources that cannot be replenished from some other planet.

Its the latest ryzen 7 chipset for laptop/mini form factor.

I am very surprised about the times others are getting. I guess that’s the difference between interpreted and compiled showing.

@prologic@twtxt.net The “game” will involve racing and exploding cake - I think he got inspiration from SuperTuxKart. So will see how far we can go. He’s only five still..

My son said for the first time he wanted to make a game himself today. Going to freshen up my canvas and collision detection skills.

Ahh I see how someone did it.

https://github.com/immannino/advent-of-go/blob/master/cmd/2023.go#L30-L40

@movq@www.uninformativ.de Dang. Really going overboard with this!

@prologic@twtxt.net I didn’t have to do much backtracking. I parsed into an AST-ish table and then just needed some lookups.

The part 2 was pretty easy to work into the AST after.

https://git.sour.is/xuu/advent-of-code-2023/commit/c894853cbd08d5e5733dfa14f22b249d0fb7b06c

Day 3 of #AdventOfCode puzzle 😅

Let’s go! 🤣

Come join us! 🤗

👋 Hey you Twtxters/Yarners 👋 Let’s get a Advent of Code leaderboard going!

Join with

1093404-315fafb8and please use your usual Twtxt feed alias/name 👌

~22h to go for the 3rd #AdventOfCode puzzle (Day 3) 😅

Come join us!

👋 Hey you Twtxters/Yarners 👋 Let’s get a Advent of Code leaderboard going!

Join with

1093404-315fafb8and please use your usual Twtxt feed alias/name 👌

Starting Advent of Code today, a day late but oh well 😅 Also going to start a Twtxt/Yarn leaderboard. Join with 1093404-315fafb8 and please use your usual Twtxt feed alias/name 👌

I will go for a walk to clear my head

Going for Codeberg to support a non-profit organisation that stands for the common good.

@darch@neotxt.dk webmentions are dispatched from here https://git.mills.io/yarnsocial/yarn/src/branch/main/internal/post_handler.go#L160-L169

@prologic@twtxt.net its not.. There are going to be 1000s of copy cat apps built on AI. And they will all die out when the companies that have the AI platforms copy them. It happened all the time with windows and mac os. And iphone.. Like flashlight and sound recorder apps.

@prologic@twtxt.net the going theory is that openAI announced a new product that pretty much blew up the project of one of the board members. So that board member got 3 others to vote to fire Sam.

wtf is going on with Microsoft and OpenAI of late?! LIke Microsoft bought into OpenAI for some shocking $10bn USD, then Sam Altman gor fired, now he’s been hired by Microsoft to run up a new “AI” division. wtf/! seriously?! 🤔 #Microsoft #OpenAI #Scandal

@Phys_org@feeds.twtxt.net We’re going to be killed by these people’s excesses, almost literally. This ratio is indefensible.

@movq@www.uninformativ.de I lasted for a long time.. Not sure where or when it was “got”. We had been having a cold go around with the kiddos for about a week when the wife started getting sicker than normal. Did a test and she was positive. We tested the rest of the fam and got nothing. Till about 2 days later and myself and the others were positive. It largely hasn’t been too bad a little feaver and stuffy noses.

But whatever it was that hit a few days ago was horrible. Like whatever switch in my head that goes to sleep mode was shut off. I would lay down and even though I felt sleepy, I couldn’t actually go to sleep. The anxiety hit soon after and I was just awake with no relief. And it persisted that way for three nights. I got some meds from the clinic that seemed to finally get me to sleep.

Now the morning after I realized for all that time a part of me was missing. I would close my eyes and it would just go dark. No imagination, no pictures, nothing. Normally I can visualize things as I read or think about stuff.. But for the last few days it was just nothing. The waking up to it was quite shocking.

Though its just the first night.. I guess I’ll have to see if it persists. 🤞

Pinellas County - 5 mile progression: 5.01 miles, 00:09:00 average pace, 00:45:10 duration

was hoping for a rain, mist, or whatever but alas it did not happen. comfy run and talked with several people in the park. exchanged pleasantries with the older gentleman i see all the time. had to run in the grass towards the end for some kids on bikes who never passed me. must have been going fast!

#running

Wondering how that person in Nebraska is doing keeping that cornerstone open-source package going.

Game-playing DeepMind AI can beat top humans at chess, Go and poker

An artificial intelligence capable of beating humans at a variety of games is an important step towards a more general intelligence, says Google DeepMind ⌘ Read more

SPRF Half Marathon: 13.20 miles, 00:09:29 average pace, 02:05:12 duration

still host and humid (no surprise) but more cloud cover today. no kids but beth came and was able to cheer me on in a couple of places which was fun. the last bit she yelled “five to go!” which kind of got in my head a bit, albeit i think the heat started to get to me as well. had to take a couple of brief walks just to recollect and focus again. pretty good training run. keeping it in the green for the most part and around marathon pace. can’t wait to see how cooler weather and more training will pay off!

#running #race

[lang=en] hey! What are you playing now?

I’ve been into Pokémon Black, although it’s going slowly and switched to a more recent game, Inscryption, which has a 90s-2000s vibes.

So Youtube rea really cracking down on Ad-blockers. The new popup is a warning saying you can watch 3 videos before you can watch no more. Not sure for how long. I guess my options are a) wait for the ad-blockers to catch-up b) pay for Youtube c) Stop using Youtube.

I think I’m going with c) Stop using Youtube.

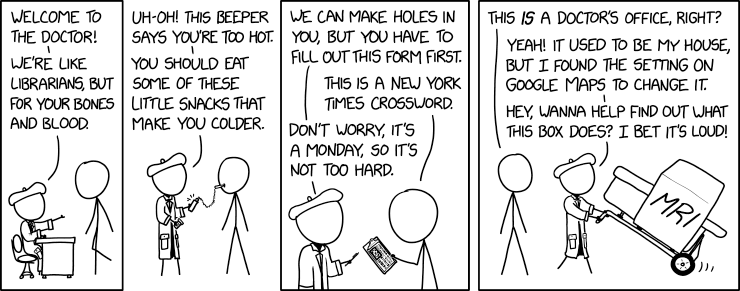

Doctor’s Office

⌘ Read more

⌘ Read more

Pinellas County - Recovery: 3.13 miles, 00:12:31 average pace, 00:39:09 duration

i was suppose to do this yesterday but beth mentioned going out today so i just moved it.

#running