zmq seems like an interesting tool for building task queues and other types of messaging apps. the other option i’m looking at is rabbitmq which has some interesting features like mqtt bridges and federation, but as a result involves a broker. i would like to eventually have all of the ships systems (or at least on the inter-system boundary) communicate over a brokerless messaging protocol. off the shelf env devices and trackers all communicate over an mqtt bridge so some brokering is probably unavoidable without getting into fully custom tech, but that’ll blow the budget.

@bender@twtxt.net I believe it is Unix-Unix Copy Protocol. Not Unix Copy-Copy Protocol.

if twtxt 2 is dropping gemini support, i will probably move on and spend more time on my gemini social zine protocol instead. i think the direction of the protocol is probably fine, but for me web is a tier 2 publishing channel. if the choice is between gemini and http i’m always going to pick gemini. its been a fun ride, but i guess this is where i get off.

@anth@a.9srv.net you wrote:

“Edits and Deletions should go; see also Section 6. This is probably the worst example of this document pushing a text document to do more protocol-like things.”

Edit and deletions are precisely what brought us here. Currently, if one replies to a twtxt, and the original gets later edited, it breaks replies, and potentially drastically changes context.

@prologic@twtxt.net Thanks for writing that up!

I hope it can remain a living document (or sequence of draft revisions) for a good long time while we figure out how this stuff works in practice.

I am not sure how I feel about all this being done at once, vs. letting conventions arise.

For example, even today I could reply to twt abc1234 with “(#abc1234) Edit: …” and I think all you humans would understand it as an edit to (#abc1234). Maybe eventually it would become a common enough convention that clients would start to support it explicitly.

Similarly we could just start using 11-digit hashes. We should iron out whether it’s sha256 or whatever but there’s no need get all the other stuff right at the same time.

I have similar thoughts about how some users could try out location-based replies in a backward-compatible way (append the replyto: stuff after the legacy (#hash) style).

However I recognize that I’m not the one implementing this stuff, and it’s less work to just have everything determined up front.

Misc comments (I haven’t read the whole thing):

Did you mean to make hashes hexadecimal? You lose 11 bits that way compared to base32. I’d suggest gaining 11 bits with base64 instead.

“Clients MUST preserve the original hash” — do you mean they MUST preserve the original twt?

Thanks for phrasing the bit about deletions so neutrally.

I don’t like the MUST in “Clients MUST follow the chain of reply-to references…”. If someone writes a client as a 40-line shell script that requires the user to piece together the threading themselves, IMO we shouldn’t declare the client non-conforming just because they didn’t get to all the bells and whistles.

Similarly I don’t like the MUST for user agents. For one thing, you might want to fetch a feed without revealing your identty. Also, it raises the bar for a minimal implementation (I’m again thinking again of the 40-line shell script).

For “who follows” lists: why must the long, random tokens be only valid for a limited time? Do you have a scenario in mind where they could leak?

Why can’t feeds be served over HTTP/1.0? Again, thinking about simple software. I recently tried implementing HTTP/1.1 and it wasn’t too bad, but 1.0 would have been slightly simpler.

Why get into the nitty-gritty about caching headers? This seems like generic advice for HTTP servers and clients.

I’m a little sad about other protocols being not recommended.

I don’t know how I feel about including markdown. I don’t mind too much that yarn users emit twts full of markdown, but I’m more of a plain text kind of person. Also it adds to the length. I wonder if putting a separate document would make more sense; that would also help with the length.

I’m still more in favor of (replyto:…). It’s easier to implement and the whole edits-breaking-threads thing resolves itself in a “natural” way without the need to add stuff to the protocol.

I’d love to try this out in practice to see how well it performs. 🤔 It’s all very theoretical at the moment.

More:

Subject: The [tag URI scheme](https://en.wikipedia.org/wiki/Tag_URI_scheme) looks interesting. I like that it human read- and writable. And since we already got the timestamp in the twtxt.txt it would be

somewhat trivial to parse. But there are still the issue with what the name/id should be... Maybe it doesn't have to bee that stick? Instead of using `tag:` as the prefix/protocol, it would more it clear

what we are talking about by using `in-reply-to:` (https://indieweb.org/in-reply-to) or `replyto:` similar to `mailto:` 1. `(reply:sorenpeter@darch.dk,2024-09-15T12:06:27Z)' 2.

`(in-reply-to:darch.dk/twtxt.txt,2024-09-15T12:06:27Z)' 2. `(replyto:http://darch.dk/twtxt.txt,2024-09-15T12:06:27Z)' I know it's longer that 7-11 characters, but it's self-explaining when looking at the

twtxt.txt in the raw, and the cases above can all be caught with this regex: `\([\w-]*reply[\w-]*\:` Is this something that would work?

Subject: The [tag URI scheme](https://en.wikipedia.org/wiki/Tag_URI_scheme) looks interesting. I like that it human read- and writable. And since we already got the timestamp in the twtxt.txt it would be

somewhat trivial to parse. But there are still the issue with what the name/id should be... Maybe it doesn't have to bee that stick? Instead of using `tag:` as the prefix/protocol, it would more it clear

what we are talking about by using `in-reply-to:` (https://indieweb.org/in-reply-to) or `replyto:` similar to `mailto:` 1. `(reply:sorenpeter@darch.dk,2024-09-15T12:06:27Z)` 2.

`(in-reply-to:darch.dk/twtxt.txt,2024-09-15T12:06:27Z)` 3. `(replyto:http://darch.dk/twtxt.txt,2024-09-15T12:06:27Z)` I know it's longer that 7-11 characters, but it's self-explaining when looking at the

twtxt.txt in the raw, and the cases above can all be caught with this regex: `\([\w-]*reply[\w-]*\:` Is this something that would work?

Notice the difference? Soren edited, and broke everything.

The tag URI scheme looks interesting. I like that it human read- and writable. And since we already got the timestamp in the twtxt.txt it would be somewhat trivial to parse. But there are still the issue with what the name/id should be… Maybe it doesn’t have to bee that stick?

Instead of using tag: as the prefix/protocol, it would more it clear what we are talking about by using in-reply-to: (https://indieweb.org/in-reply-to) or replyto: similar to mailto:

(reply:sorenpeter@darch.dk,2024-09-15T12:06:27Z)

(in-reply-to:darch.dk/twtxt.txt,2024-09-15T12:06:27Z)

(replyto:http://darch.dk/twtxt.txt,2024-09-15T12:06:27Z)

I know it’s longer that 7-11 characters, but it’s self-explaining when looking at the twtxt.txt in the raw, and the cases above can all be caught with this regex: \([\w-]*reply[\w-]*\:

Is this something that would work?

you can just have a web address.. i added mine.. though i think they have changed up the protocol so my key doesn’t seem to work anymore. https://key.sour.is/id/me@sour.is

url field in the feed to define the URL for hashing. It should have been the last encountered one. Then, assuming append-style feeds, you could override the old URL with a new one from a certain point on:

I was not suggesting to that everyone need to setup a working webfinger endpoint, but that we take the format of nick+(sub)domain as base for generating the hashed together with the message date and content.

If we omit the protocol prefix from the way we do things now will that not solve most of the problems? In the case of gemini://gemini.ctrl-c.club/~nristen/twtxt.txt they also have a working twtxt.txt at https://ctrl-c.club/~nristen/twtxt.txt … damn I just notice the gemini. subdomain.

Okay what about defining a prefers protocol as part of the hash schema? so 1: https , 2: http 3: gemini 4: gopher ?

@xuu@txt.sour.is Thanks for the link. I found a pdf on one of the authors’ home pages: https://ahmadhassandebugs.github.io/assets/pdf/quic_www24.pdf . I wonder how the protocol was evaluated closer to the time it became a standard, and whether anything has changed. I wonder if network speeds have grown faster than CPU speeds since then. The paper says the performance is around the same below around 600 Mbps.

To be fair, I don’t think QUIC was ever expected to be faster for transferring a single stream of data. I think QUIC is supposed to reduce the impact of a dropped packet by making sure it only affects the stream it’s part of. I imagine QUIC still has that advantage, and this paper is showing the other side of a tradeoff.

So this is a great thread. I have been thinking about this too.. and what if we are coming at it from the wrong direction? Identity being tied to a given URL has always been a pain point. If i get a new URL its almost as if i have a new identity because not only am I serving at a new location but all my previous communications are broken because the hashes are all wrong.

What if instead we used this idea of signatures to thread the URLs together into one identity? We keep the URL to Hash in place. Changing that now is basically a no go. But we can create a signature chain that can link identities together. So if i move to a new URL i update the chain hosted by my primary identity to include the new URL. If i have an archived feed that the old URL is now dead, we can point to where it is now hosted and use the current convention of hashing based on the first url:

The signature chain can also be used to rotate to new keys over time. Just sign in a new key or revoke an old one. The prior signatures remain valid within the scope of time the signatures were made and the keys were active.

The signature file can be hosted anywhere as long as it can be fetched by a reasonable protocol. So say we could use a webfinger that directs to the signature file? you have an identity like frank@beans.co that will discover a feed at some URL and a signature chain at another URL. Maybe even include the most recent signing key?

From there the client can auto discover old feeds to link them together into one complete timeline. And the signatures can validate that its all correct.

I like the idea of maybe putting the chain in the feed preamble and keeping the single self contained file.. but wonder if that would cause lots of clutter? The signature chain would be something like a log with what is changing (new key, revoke, add url) and a signature of the change + the previous signature.

# chain: ADDKEY kex14zwrx68cfkg28kjdstvcw4pslazwtgyeueqlg6z7y3f85h29crjsgfmu0w

# sig: BEGIN SALTPACK SIGNED MESSAGE. ...

# chain: ADDURL https://txt.sour.is/user/xuu

# sig: BEGIN SALTPACK SIGNED MESSAGE. ...

# chain: REVKEY kex14zwrx68cfkg28kjdstvcw4pslazwtgyeueqlg6z7y3f85h29crjsgfmu0w

# sig: ...

re: reticulum i might just make my own internet replacement protocol if i keep finding out that the extant ones have yellow snake flag people attached to them

HTTP/2 differs from 1.x by becoming a binary protocol, it also multiplexes multiple channels over the same connection and has the ability to prefetch related content to the browser to lower the perceived latency.

HTTP/3 moves the binary protocol from HTTP/2 over to QUIC which is based on UDP instead of TCP. This makes it better suited to mobile or unstable networks where handling of transmission errors can be handled at a higher level.

I suspect that people who came to Gemini from Gopher are more satisfied with the protocol than people who came to Gemini from HTTP.

So where do we start wring down the specs/protocol for twtxt2/yarn?

@shreyan@twtxt.net What do you mean when you say federation protocol?

Either use webfinger for identity like mastodon etc. or use ATproto from Bluesky (or both?)

We can use webmentions or create our own twt-mentions for notifying someones feed (WIP code at: https://github.com/sorenpeter/timeline/tree/webmention/views)

I’m not sure we need much else. I would not even bother with encryption since other platforms does that better, and for me twtxt/yarn/timeline is for making things public

yarn should define its own federation protocol that extends the basic twtxt in ways that twtxt doesn’t allow. it’s time. and i’ve got ideas!

I would love to see a world where ones twtxt feed is defined by webfinger. So @xuu@txt.sour.is => https://text.sour.is/user/xuu/twtxt.txt

Then my identity can exist independent of the feed location. And I can host multiple protocol types for my feed. Ie. http/gopher/Gemini/irc DCC/etc

> ?

I’m also more in favor of #reposts being human readable and writable. A client might implement a bottom that posts something simple like: #repost Look at this cool stuff, because bla bla [alt](url)

This will then make it possible to also “repost” stuff from other platforms/protocols.

The reader part of a client, can then render a preview of the link, which we talked about would be a nice (optional) feature to have in yarnd.

Pensée désagréable du jour: le protocole #gemini n’est pas écologique car il n’est pas accessible sur du vieux matériel à cause du TLS forcé. Servir des fichiers textes écrits en gemtext en #http est mieux dans ce cas

Check out the Nex Protocol. It’s designed to be even simpler than Gemini and Gopher. What do you think? Could be great to host a twtxt feed on.

Can’t wait until this site joins the trend and becomes “y.com” - the everything protocol.

@prologic@twtxt.net hmm, I’d be up for thinking about that. At least at the protocol and design level–I’m afraid I can’t help much with Go programming.

@prologic@twtxt.net I’ve not looked into the Bluesky protocol, so I don’t know what to think specifically. But this guy definitely is not impressed lol

Sam Wight :verified:: “Fucking Christ the @protocol i…” - Urbanists.Social

Incredible critique of the protocol Bluesky is creating. It sounds like s shitshow.

💡 Quick ‘n Dirty prototype Yarn.social protocol/spec:

If we were to decide to write a new spec/protocol, what would it look like?

Here’s my rough draft (back of paper napkin idea):

- Feeds are JSON file(s) fetchable by standard HTTP clients over TLS

- WebFinger is used at the root of a user’s domain (or multi-user) lookup. e.g:

prologic@mills.io->https://yarn.mills.io/~prologic.json

- Feeds contain similar metadata that we’re familiar with: Nick, Avatar, Description, etc

- Feed items are signed with a ED25519 private key. That is all “posts” are cryptographically signed.

- Feed items continue to use content-addressing, but use the full Blake2b Base64 encoded hash.

- Edited feed items produce an “Edited” item so that clients can easily follow Edits.

- Deleted feed items produced a “Deleted” item so that clients can easily delete cached items.

I played around with parsers. This time I experimented with parser combinators for twt message text tokenization. Basically, extract mentions, subjects, URLs, media and regular text. It’s kinda nice, although my solution is not completely elegant, I have to say. Especially my communication protocol between different steps for intermediate results is really ugly. Not sure about performance, I reckon a hand-written state machine parser would be quite a bit faster. I need to write a second parser and then benchmark them.

lexer.go and newparser.go resemble the parser combinators: https://git.isobeef.org/lyse/tt2/-/commit/4d481acad0213771fe5804917576388f51c340c0 It’s far from finished yet.

The first attempt in parser.go doesn’t work as my backtracking is not accounted for, I noticed only later, that I have to do that. With twt message texts there is no real error in parsing. Just regular text as a “fallback”. So it works a bit differently than parsing a real language. No error reporting required, except maybe for debugging. My goal was to port my Python code as closely as possible. But then the runes in the string gave me a bit of a headache, so I thought I just build myself a nice reader abstraction. When I noticed the missing backtracking, I then decided to give parser combinators a try instead of improving on my look ahead reader. It only later occurred to me, that I could have just used a rune slice instead of a string. With that, porting the Python code should have been straightforward.

Yeah, all this doesn’t probably make sense, unless you look at the code. And even then, you have to learn the ropes a bit. Sorry for the noise. :-)

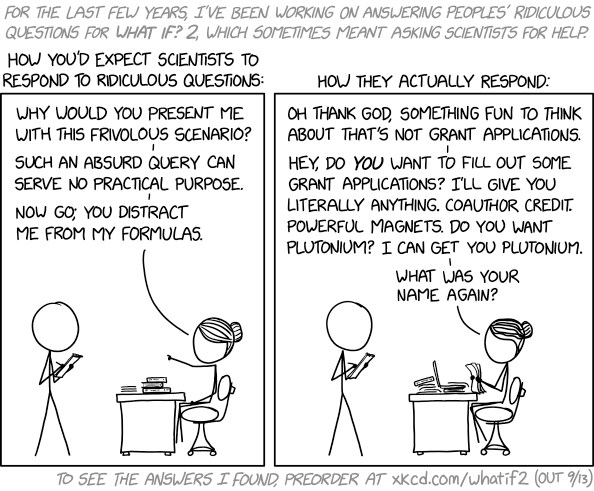

Asking Scientists Questions

⌘ Read more

⌘ Read more

You need better pen test scripts. :-) Seriously, the protocol is absurdly simple. Turn it on! Don’t trust any of the implementations? Write your own!

i want to use something similar to ssb producer tokens as the basis for novo atlantis trade protocols. interfacing with scarcity currencies has been a headache, but i think something like taller that does delegated settlement can link coop credits to another currency for foreign trade could work. devil is in the details.

What if due to climate crisis effects and disasters our digital future will depend on low-energy hardware and protocols like Gemini?

Protocol is a virtual writing surface and a mirror. Memory takes a copy and then Forgets.

i’m going to be honest with you here: i despise protocol labs. IPFS/FileCoin is a scam. fuck that shit.

Indeed! I think the first “network protocol client” I ever wrote was something that just did the PING/PONG part and passed everything else raw.

No, totally not useful. 🤣 I mean, the finger protocol is pretty trivial, and it’d be fun to add, but doesn’t replace anything you’re doing.

email-based gossip protocol :ac_mischief:

interesting RFC dated April 1st, 1998: Hyper Text Coffee Pot Control Protocol (HTCPCP/1.0):

looking at the date this was published, i think the authors originally meant this as an apil’s fool joke/prank.

funny because now we have IOTs and this is somewhat a reality today :P

I’m unclear if I’m going to do the twtxt.net discovery protocol; neither my web server nor Plan 9’s default capture agent strings. :-/

@prologic@twtxt.net the HKP is http keyserver protocol. it’s what happens when you do gpg --send-keys

makes a POST to the keyserver with your pubkey.

Enjoying the constraints of the Gopher protocol as a minimalistic zen-mode kind of online publishing revival.