And maybe I should go back to using GUI designers. Haven’t used those since the Visual Basic days. 🤔 It wasn’t pretty, but you got results very quickly and efficiently.

(When I switched to Linux, I quickly got stuck with GTK and that only had Glade, which wasn’t super great at the time, so I didn’t start using it … and then I never questioned that decision …)

Just typing twts directly into my twtxt file.

Details:

- Opening my twtxt file remotely using

vim scp://user@remote:port//path/to/twtxt.txt

- Inserting the date, time and tab part of the twt with

:.!echo "$(date -Is)\t"

- In case I need to add a new line I just

Ctrl+Shift+u, type in the2028and hitEnter

- In order to replay, you just steal a twt hash from your favorite Yarn instance.

It looks tedious, but it’s fun to know I can twt no matter where I am, as long as can ssh in.

@bender@twtxt.net Hm, are we talking about different dates or are there different timezone offsets for this timezone abbreviation? With EDT being UTC-4, 2025-11-02T12:00:00Z is Sunday at 8:00 in the morning local time for you. Or were did I mess up here? :-?

@prologic@twtxt.net You want me to submit a reply with “I probably won’t show up”?

@lyse@lyse.isobeef.org then I blame @prologic@twtxt.net, and no one else. LOL. But yeah, it is Saturday around 06:00 my time (EDT).

@lyse@lyse.isobeef.org then I blame @prologic@twtxt.net, and no one else. LOL. But yeah, it is Saturday around 08:00 my time (EDT).

@bender@twtxt.net There’s a reason it’s in UTC time 🤣

@movq@www.uninformativ.de It’s way more expensive and time-consuming in the end. If only somebody had warned us!!1

The triangle reminds me of zalgo text: https://en.wikipedia.org/wiki/Zalgo_text

@lyse@lyse.isobeef.org They’re seriously telling us at work: “Can it be AI’d? Do it, don’t waste time!” Shit like that is the result. (What’s this weird gray triangle in the bottom right corner?)

man and it calls home to see if I'm allowed to do that.

Because OP twtxt seems to be a cross-post from the Fediverse, I am bringing some context here. It refers to this GitHub issue. This comment explains why the issue described is happening:

This is usually due to notarization checks. E.g. the binaries are checked by the notarization service (‘XProtect’) which phones home to Apple. Depending on your network environment, this can take a long time. Once the executable has been run the results are usually cached, so any subsequent startup should be fast.

OP network must be running on 1,200 Baud modem, or less. 🤭 I have never, ever, experienced any distinguishable delays.

Turned out I didn’t make it, sorry. Maybe next time. I hope you had a great yarn, @prologic@twtxt.net and @bender@twtxt.net, and didn’t waste any time waiting for me.

We had some gray soup with the occasional fine rain with strong wind gusts. Despite the bad forecast we took the train to Geislingen/Steige and strolled up to the Helfenstein castle ruin. All the colorful leaves were so beautiful, it didn’t matter that the sun was behind thick layers of clouds.

We then continued to the Ödenturm (lit. boring tower). By then the wind had picked up by quite a bit, just as the weatherman predicted. We were very positively surprised that the Swabian Jura Association had opened up the tower. Between May and October, the tower is typically only manned on Sundays and holidays between 10 and 17 o’clock. But yesterday was Saturday and no holiday. The lovely lady up there told us that they’re currently experimenting with opening up on Saturday, too, because there are some highly motivated members responsible for the tower.

We were the very first visitors on that day. Last Sunday, when the weather lived up to the weekday’s name, they counted 128 people up in the tower. Very impressive.

The wind gusts were howling around the tower. Luckily, there are glass windows. So, it was quite pleasant up in the tower room. Chatting with the tower guard for a while, we got even luckier: the sun came out! That was really awesome. The photos don’t do justice. As always, it looked way more stunning in person.

Thanks to all the volunteers who make it possible to enjoy the view from the thirty odd meters up there. That certainly made our day!

After signing the guestbook we climbed down the staircase and returned to the station and headed back. The train even arrived on time. What a great little trip!

https://lyse.isobeef.org/wanderung-auf-die-burgruine-helfenstein-und-den-oedenturm-2025-10-25/

@movq@www.uninformativ.de I guess I wasn’t talking about the speed of interesting text/context, but more the “slowness” of these tools. I think I can build/ solutions and fix bugs faster most of the time? Hmmm 🤔 I think the only thing it’s able to do better than me is grasp large codebases and do pattern machines a bit better, mostly because we’re limited by the interfaces we have to use and in my ase being vision impaired doesn’t help :/

Hmmm 🧐 I’m annectodaly not convinced so-called “AI”(s) really save time™. – I have no proof though, I would need to do some concrete studies / numbers… – But, there is one benefit… It can save you from typing and from worsening RSI / Carpal Tunnel.

The most infuriating 3 seconds of using this Mac every day are the first time I run man and it calls home to see if I’m allowed to do that.

@movq@www.uninformativ.de I think if I was younger, with more energy, and wasn’t blind with leber’s disease (look it up) I’d be fine™ But yeah I get the whole “exhausting” apart. I’ll join you this year, since there’s only 12 puzzles and as you say, we can “take our time” it might actually be fun! (as opposed to exhausting and pressured).

@prologic@twtxt.net Yeah, lots of people are welcoming this change, saying they are relieved that there are fewer puzzles. And ngl, I, too, have been very exhausted at the end of the month. It’s a lot of fun and I loved it each time, but yeah, it can be exhausting.

@movq@www.uninformativ.de This is actually a good positive change I think!

Personally, I’ll probably stretch it out over 24 days. Giving myself more time to solve each puzzle and I really want this event to last the entire month. 😅

I might even do AoC this year with the elevated stress/pressure! – The last few times I’ve tried, I’ve always felt far too much pressure and felt like a failure 😞 (mostly ya know because of my vision impairment, I couldn’t keep up!)

@movq@www.uninformativ.de Oh, we like to be paranoid. We’ve been right so many times. Unfortunately.

@prologic@twtxt.net That sounds horrible. 😅 I wouldn’t want to own such a car. (My plan is not to buy a new car after my current one finally broke down entirely.)

@lyse@lyse.isobeef.org First time I heard about eCall. I don’t think I like this. 🫤 Feels like another attempt at going for complete surveillance. Yes, yes, it’s about “security”/“safety” … it always is.

Advent of Code will be different this year:

There will only be 12 puzzles, i.e. only December 1 to December 12. This might make it more interesting for some people, because it’s (probably) less work and a lower chance of people getting burned out. 🤔

Personally, I’ll probably stretch it out over 24 days. Giving myself more time to solve each puzzle and I really want this event to last the entire month. 😅

Maybe this makes it more interesting for some people around here as well?

@movq@www.uninformativ.de Speaking of “clusterfucks”. Every fucking time I try to type something on my fucking goddamn iPhone’s little tiny ass on-screen keyboard it ends up typing out “I love you!” 🤟 For fucks sake 🤦♂️ – Given the size of the fucking goddamn on-screen keyboards on these things and folks with limited/poor vision, can’t we figure out what I meant to type instead of spitting out total garbage nonsense that I had no intention of typing that makes me just look silly and stupid?! 🤬 Ask @bender@twtxt.net how many times this has happened on IRC whenever I’ve been on my phone 📱

After taking most of the year off from role-playing, I’ve got 3 one-shots coming up in the next month, all of which need some tweaking before I can run them (as do my homebrew rules).

Plus there’s a “build a game” code challenge at work, a pair of media boxes I need to rebuild, a pair of dead machines I need to diagnose, and I’d like to (eventually) get my twtxt apps to a “releasable” state.

So many projects, so little (free) time…

@movq@www.uninformativ.de The time has come for “vibe coding” consultations.

@movq@www.uninformativ.de @prologic@twtxt.net Unfortunately, I had to review a coworker’s code that was also spewed out the same way. It was abso-fucking-lutely horrible. I didn’t know upfront, but then asked afterwards and got the proud (!) answer that it indeed was “assisted”. I bet this piece of garbage result was never checked or questioned the tiniest bit before submitting for review. >:-( It didn’t even do the right thing as a bonus.

What a giant shitshow. Things just have to burn to the ground several times.

@alexonit@twtxt.alessandrocutolo.it Hell yeah, that looks great! :-) What a pity you’re not having any photos, though. I love that you went to a craftsmanship school and learned some amazing skills. The older I get, the more I admire all sorts of crafts. That’s also why I started building physical stuff myself in my spare time.

This sketch is well done, so you countersunk the holes to make room for the heads. Makes absolutely sense. Mille grazie! <3

Intranets have been around since Jesus times (well, not quite 😂, but you get the idea). They are fun to play with, but that’s about it. I mean, the “fun” of the Internet comes from its variety.

It happened.

“Can you help me debug this program? I vibe coded it and I have no idea what’s going on. I had no choice – learning this new language and frameworks would have taken ages, and I have severe time constraints.”

Did I say “no”? Of course not, I’m a “nice guy”. So I’m at fault as well, because I endorsed this whole thing. The other guy is also guilty, because he didn’t communicate clearly to his boss what can be done and how much time it takes. And the boss and his bosses are guilty a lot, because they’re all pushing for “AI”.

The end result is garbage software.

This particular project is still relatively small, so it might be okay at the moment. But normalizing this will yield nothing but garbage. And actually, especially if this small project works out fine, this contributes to the shittiness because management will interpret this as “hey, AI works”, so they will keep asking for it in future projects.

How utterly frustrating. This is not what I want to do every day from now on.

LOL loser you still use polynomials!? Weren’t those invented like thousands of years ago? LOL dude get with the times, everyone uses Equately for their equations now. It was made by 3 interns at Facebook, so it’s pretty much the new hotness.

@movq@www.uninformativ.de I submitted it via the form on their website (https://digital-markets-act.ec.europa.eu/contact-dma-team_en) and got the following response:

Dear citizen,

Thank you for contacting us and sharing your concerns regarding the impact of Google’s plans to introduce a developer verification process on Android. We appreciate that you have chosen to contact us, as we welcome feedback from interested parties.

As you may be aware, the Digital Markets Act (‘DMA’) obliges gatekeepers like Google to effectively allow the distribution of apps on their operating system through third party app stores or the web. At the same time, the DMA also permits Google to introduce strictly necessary and proportionate measures to ensure that third-party software apps or app stores do not endanger the integrity of the hardware or operating system or to enable end users to effectively protect security.

We have taken note of your concerns and, while we cannot comment on ongoing dialogue with gatekeepers, these considerations will form part of our assessment of the justifications for the verification process provided by Google.

Kind regards,

The DMA Team

@bender@twtxt.net I guess most clocks don’t support that. 😅 My wrist watch can do it, you can select it in the menu:

https://movq.de/v/ccb4ffcbc5/s.png

In general, different transmitter means different frequency and different encoding, for example these two:

@movq@www.uninformativ.de how do you set your clock to use a specific time signal radio station? I have one wall clock in my office, it works great, but no way to set that.

DCF77, our time signal radio station, is a great public service. I really love that. It’s just a signal that anybody can pick up, no subscription, no tracking, no nothing. Much like GPS/GNSS. 💚

I went on a short stroll in the woods and came across two great spotted woodpeckers. They were busy with their courtship display, I reckon, so it took them a while to notice me and escape into thicker parts out of sight. That was really awesome. There are a lot of apples and sloes now, looking really good. The cam issues still persist, though, I wish the photos were sharper. Also, I got the error that the function wheel was not adjusted correctly and alledgedly pointed between two options numerous times. And no, it was bang on a setting. https://lyse.isobeef.org/waldspaziergang-2025-10-07/

@alexonit@twtxt.alessandrocutolo.it Thanks mate! Ah cool, now I’m curious, what did you make? :-)

You used the rubber hammer to fold the metal, not to set the rivets, right? :-? I glued cork on my wooden mallet some time ago. This worked quite good for bending. But rubber might be even better as it is a tad softer. I will try this next time, I think I have one deep down in a drawer somewhere.

@movq@www.uninformativ.de I can confirm.

An intern practicing with turtle had an error when launching it the first time because it was missing tkinter which it use internally.

@zvava@twtxt.net yarnd fetches the feeds roughly every ten minutes:

grep twtxt.net www/logs/twtxt.log | cut -d ' ' -f1 | tail -n 20

2025-10-04T07:00:45+02:00

2025-10-04T07:10:26+02:00

2025-10-04T07:22:43+02:00

2025-10-04T07:30:45+02:00

2025-10-04T07:40:48+02:00

2025-10-04T07:52:59+02:00

2025-10-04T08:00:07+02:00

2025-10-04T08:13:33+02:00

2025-10-04T08:23:13+02:00

2025-10-04T08:31:22+02:00

2025-10-04T08:41:29+02:00

2025-10-04T08:53:25+02:00

2025-10-04T09:03:31+02:00

2025-10-04T09:11:42+02:00

2025-10-04T09:23:11+02:00

2025-10-04T09:29:49+02:00

2025-10-04T09:36:17+02:00

2025-10-04T09:46:33+02:00

2025-10-04T09:58:40+02:00

2025-10-04T10:06:54+02:00

I suspect that the timing was just right. Or wrong, depending on how you’re looking at it. ;-)

It’s time to say goodbye to the GTK world.

GTK2 was nice to work with, relatively lightweight, and there were many cool themes back then. GTK3 was already a bit clunky, but tolerable. GTK4 now pulls in all kinds of stuff that I’m not interested in, it has become quite heavy.

Farewell. 👋

All good things come to an end, I guess.

I have an Epson printer (AcuLaser C1100) and an Epson scanner (Perfection V10), both of which I bought about 20 years ago. The hardware still works perfectly fine.

Until recently, Epson still provided Linux drivers for them. That is pretty cool! I noticed today that they have relaunched their driver website – and now I can’t find any Linux drivers for that hardware anymore. Just doesn’t list it (it does list some drivers for Windows 7, for example).

I mean, okay, we’re talking about 20 years here. That is a very long time, much more than I expected. But if it still works, why not keep using it?

Some years ago, I started archiving these drivers locally, because I anticipated that they might vanish at some point. So I can still use my hardware for now (even if I had to reinstall my PC for some reason). It might get hacky at some point in the future, though.

This once more underlines the importance of FOSS drivers for your hardware. I sadly didn’t pay attention to that 20 years ago.

The main feed got quite large again, so it’s time for another rotation into archive feeds. I just noticed that I forgot to upload the archive feeds last time. Whoops. :-)

@alexonit@twtxt.alessandrocutolo.it Hahaha, that made me laugh real good. :-D I find it always surprising what collects in a short amount of time.

url metadata field unequivocally treated as the canon feed url when calculating hashes, or are they ignored if they're not at least proper urls? do you just tolerate it if they're impersonating someone else's feed, or pointing to something that isn't even a feed at all?

@zvava@twtxt.net Yes, the specification defines the first url to be used for hashing. No matter if it points to a different feed or whatever. Just unsubscribe from malicious feeds and you’re done.

Since the first url is used for hashing, it must never change. Otherwise, it will break threading, as you already noticed. If your feed moves and you wanna keep the old messages in the same new feed, you still have to point to the old url location and keep that forever. But you can add more urls. As I said several times in the past, in hindsight, using the first url was a big mistake. It would have been much better, if the last encountered url were used for hashing onwards. This way, feed moves would be relatively straightforward. However, that ship has sailed. Luckily, feeds typically don’t relocate.

is the first url metadata field unequivocally treated as the canon feed url when calculating hashes, or are they ignored if they’re not at least proper urls? do you just tolerate it if they’re impersonating someone else’s feed, or pointing to something that isn’t even a feed at all?

and if the first url metadata field changes, should it be logged with a time so we can still calculate hashes for old posts? or should it never be updated? (in the case of a pod, where the end user has no choice in how such events are treated) or do we redirect all the old hashes to the new ones (probably this, since it would be helpful for edits too)

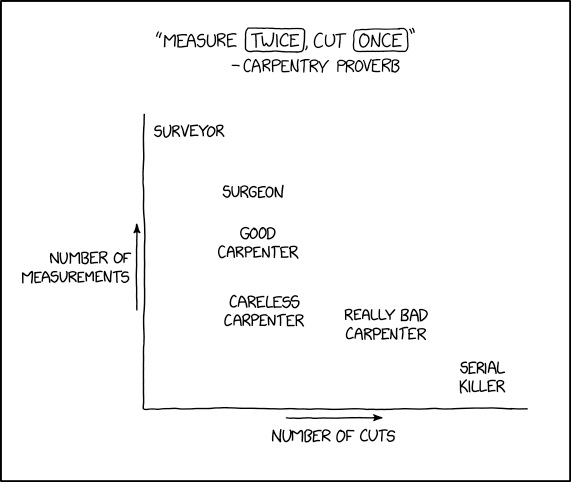

Measure Twice, Cut Once

⌘ Read more

⌘ Read more

i desperately want to simply deploy a bbycll instance but i need to change the entire database schema for the Nth time, i haven’t been working on it much recently as this back and forth w the backend (which you don’t expect from a spec as simple as twtxt) is really demotivating, as well as life and stuff getting in the way

perhaps i just need a nice shower and four coffees, midnight is when i am most productive and the hour is approaching..

@prologic@twtxt.net I too, self-host various services on a VPS (and considering buying a mini PC to keep at home instead).

I use most of it as a hosting platform for personal use only and as a remote development environment (I do share a couple of tools with a friend though).

But given the costant risks of DDoS, hacking, bots, etc. I keep any of my public facing resources purely static and on separate hosting providers (without lock-ins of course).

Lately, I began using homebrew PWAs with CouchDB as a sync database, this way I get a fantastic local-first experience and also have total control of my data, that also sync in a locally hosted backup instance in real-time.

Also, I was already aware of Salty.im, but what I’m thinking is a more feature complete solution that even my family can use quickly, Delta.chat with the new chatmail provider (self-hostable) might be the solution for my needs.

But I’m still thinking if it’s worth the trouble. I might just drop everything and only use safe channels to speak with them (free 24/7 family tech-support is easy to manage 😆).

Also, I’ll be waiting for the day you’ll share with us your story, I’m pretty curious about it!

For a very first attempt, I’m extremely happy how this tray turned out: https://lyse.isobeef.org/tmp/blechschachtel/ The photos look rougher than in person. The 0.5mm aluminium sheet was 300x200mm to begin with. Now, the accidental outside dimensions are 210x110mm. It took me about an hour to make. Tomorrow, I gotta build a simple folder, so I don’t have to hammer it anymore, but can simply bend it a little at a time.

@movq@www.uninformativ.de You didn’t miss anything. Just time for more useful stuff. ;-)

@prologic@twtxt.net you doing this reminded me of mkws, and Adi. Good times, we have seeing so many people come and go. It is kind of sad, when I think about “jjl”, and Phil, and the many others…

I am feeling “mushy” today. Ugh, ageing sucks.

@prologic@twtxt.net yup, that’s what I meant. The lack of it on the URL is fine, but on the post itself it is always a good idea. Time frames matter.

@prologic@twtxt.net I can’t upload a screenshot (tried, but Yarnd simple “ate” my reply). See https://zsblog.mills.io/posts/hello-zs-blog.html. Is has no date/time on it.