Let’s hope that the two cakes turn out better than last week: https://lyse.isobeef.org/tmp/tote-und-lebendige-kuchen-2025-12-02.jpg Got some gingerbread as backup. Yeah, best lighting…

@prologic@twtxt.net I too, self-host various services on a VPS (and considering buying a mini PC to keep at home instead).

I use most of it as a hosting platform for personal use only and as a remote development environment (I do share a couple of tools with a friend though).

But given the costant risks of DDoS, hacking, bots, etc. I keep any of my public facing resources purely static and on separate hosting providers (without lock-ins of course).

Lately, I began using homebrew PWAs with CouchDB as a sync database, this way I get a fantastic local-first experience and also have total control of my data, that also sync in a locally hosted backup instance in real-time.

Also, I was already aware of Salty.im, but what I’m thinking is a more feature complete solution that even my family can use quickly, Delta.chat with the new chatmail provider (self-hostable) might be the solution for my needs.

But I’m still thinking if it’s worth the trouble. I might just drop everything and only use safe channels to speak with them (free 24/7 family tech-support is easy to manage 😆).

Also, I’ll be waiting for the day you’ll share with us your story, I’m pretty curious about it!

@movq@www.uninformativ.de WE NEED MORE BACKUPS!!!!!!!!!!!1

@kat@yarn.girlonthemoon.xyz Oh no. 😨 Backups! We need more backups!

linode’s having a major outage (ongoing as of writing, over 24 hours in) and my friend runs a site i help out with on one of their servers. we didn’t have recent backups so i got really anxious about possible severe data loss considering the situation with linode doesn’t look great (it seems like a really bad incident).

…anyway the server magically came back online and i got backups of the whole application and database, i’m so relieved :‘)

Running monthly backups…

@xuu@txt.sour.is or @kat@yarn.girlonthemoon.xyz Do either of you have time this weekend to test upgrading your pod to the new cacher branch? 🤔 It is recommended you take a full backup of you pod beforehand, just in case. Keen to get this branch merged and to cut a new release finally after >2 years 🤣

@bender@twtxt.net Lemme look at the old backup…

@prologic@twtxt.net @bmallred@staystrong.run So is restic considered stable by now? “Stable” as in “stable data format”, like a future version will still be able to retrieve my current backups. I mean, it’s at version “0.18”, but they don’t specify which versioning scheme they use.

I use restic and Backblaze B2 for offline backup storage at a cost of $6/TB/month. I don’t backup my entire ~20TB NAS and its datasets however, so I’m only paying about ~$2/month right now. I only backup the most important things I cannot afford to lose or annot re-created.

@movq@www.uninformativ.de there are many other similar backup tools. I would love to hear what will make you pick Borg above the rest.

On top of my usual backups (which are already offsite, but it requires me carrying a hard disk to that other site), I think I might rent a storage server and use Borg. 🤔 Hoping that their encryption is good enough. Maybe that’ll also finally convince me to get a faster internet connection. 😂

Add support for skipping backup if data is unchagned · 0cf9514e9e - backup-docker-volumes - Mills 👈 I just discovered today, when running backups, that this commit is why my backups stopped working for the last 4 months. It wasn’t that I was forgetting to do them every month, I broke the fucking tool 🤣 Fuck 🤦♂️

@thecanine@twtxt.net I mean I can restore whatever anyone likes, the problem is the last backup I took was 4 months ago 😭 So I decided to start over (from scratch). Just let me know what you want and I’ll do it! I used the 4-month old backup to restore your account (by hand) and avatar at least 🤣

@thecanine@twtxt.net I’m so sorry I fucked things up 🥲 I hope you can trust I’ll try to do a better job of backups and data going forward 🤗

This weekend (as some of you may now) I accidently nuke this Pod’s entire data volume 🤦♂️ What a disastrous incident 🤣 I decided instead of trying to restore from a 4-month old backup (we’ll get into why I hadn’t been taking backups consistently later), that we’d start a fresh! 😅 Spring clean! 🧼 – Anyway… One of the things I realised was I was missing a very critical Safety Controls in my own ways of working… I’ve now rectified this…

@prologic@twtxt.net Spring cleanup! That’s one way to encourage people to self-host their feeds. :-D

Since I’m only interested in the url metadata field for hashing, I do not keep any comments or metadata for that matter, just the messages themselves. The last time I fetched was probably some time yesterday evening (UTC+2). I cannot tell exactly, because the recorded last fetch timestamp has been overridden with today’s by now.

I dumped my new SQLite cache into: https://lyse.isobeef.org/tmp/backup.tar.gz This time maybe even correctly, if you’re lucky. I’m not entirely sure. It took me a few attempts (date and time were separated by space instead of T at first, I normalized offsets +00:00 to Z as yarnd does and converted newlines back to U+2028). At least now the simple cross check with the Twtxt Feed Validator does not yield any problems.

Oh well. I’ve gone and done it again! This time I’ve lost 4 months of data because for some reason I’ve been busy and haven’t been taking backups of all the things I should be?! 🤔 Farrrrk 🤬

@prologic@twtxt.net If it develops, and I’m not saying it will happen soon, perhaps Yarn could be connected as an additional node. Implementation would not be difficult for any client or software. It will not only be a backup of twtxt, but it will be the source for search, discovery and network health.

1972 UNIX V2 “beta” resurrected from old tapes

There’s a number of backups of old DECtapes from Dennis Ritchie, which he gave to Warren Toomey in 1997. The tapes were eventually uploaded, and through analysis performed by Yufeng Gao, a lot of additional details, code, and software were recovered from them. A few days ago, Gao came back with the results from their analys of two more tapes, and on it, they found something quite special. Getting this recovered version to run was a bit of a … ⌘ Read more

i upgraded my pc from lubuntu 22.04 to 24.04 yesterday and i was like “surely there is no way this will go smoothly” but no it somehow did. like i didn’t take a backup i just said fuck it and upgraded and it WORKED?!?! i mean i had some driver issues but it wasn’t too bad to fix. wild

A random suggestion. You should add a password to your private ssh key. Why? If someone steals your key, they won’t be able to do anything without the password.

You should run: ssh-keygen -p

And remember to make a backup copy of key file. As a developer, it is a one of the most valuable files on your computer.

This is a great day to check and do your backups :)

@emmanuel@wald.ovh Btw I already figured out why accessing your web server is slow:

$ host wald.ovh

wald.ovh has address 86.243.228.45

wald.ovh has address 90.19.202.229

wald.ovh has 2 IPv4 addresses, one of which is dead and doesn’t respond.. That’s why accessing your website is so slow as depending on client and browser behaviors one of two things may happen 1) a random IP is chosen and ½ the time the wrong one is picked or 2) both are tried in some random order and ½ the time its slow because the broken one is picked.

If you don’t know what 86.243.228.45 is, or it’s a dead backup server or something, I’d suggest you remove this from the domain record.

I’ve talked about how I do backups on unix a bunch of times, but someone asked again today and I realized I didn’t have it written down where I could point to. So I wrote a lab report: http://a.9srv.net/reports/index.html#vac-unix

@prologic@twtxt.net @bender@twtxt.net No worries. In the end you did it all with your backup. And sorry for my exported timezone mess. :-/

🔥 ATTENTION: I have really bad news folks 😢

Today, (just this morning in AEST) I accidentally nuke my pod (twtxt.net). I keep backups, but unfortunately the recovery point objective (RTP) is at worst a month! 🤦♂️ 😱 (the recovery time objective is around ~30m or so, restoring can take a while due to the size of the archive and index) – For those that are unfamiliar with these terms, they essentially relate to “how much data loss can occur” (RPO) and “how quickly you can restore the system” (RTO).

This pod (twtxt.net) is back up and online. However we’ve last the last ~5 days worth of posts y’all may have made on your feeds (for those that use this pod).

I’m so sorry 😞

FYI 👋 I will be deleting the following inactive users from my pod (twtxt.net) soon™:

$ ./tools/inactive_users.sh 730

@thgie@twtxt.net last seen 732 days ago

@will@twtxt.net last seen 740 days ago

@shaneflores@twtxt.net last seen 752 days ago

@magnus@twtxt.net last seen 757 days ago

@nickmellor@twtxt.net last seen 757 days ago

@birb@twtxt.net last seen 763 days ago

@screem@twtxt.net last seen 772 days ago

@servusdei@twtxt.net last seen 774 days ago

@alex@twtxt.net last seen 790 days ago

@andreottica@twtxt.net last seen 801 days ago

@fox@twtxt.net last seen 822 days ago

@anx@twtxt.net last seen 829 days ago

@olav@olav.bonn.cafe last seen 855 days ago

@caesar@twtxt.net last seen 866 days ago

@jim@twtxt.net last seen 869 days ago

@rell@twtxt.net last seen 882 days ago

@readfog@twtxt.net last seen 886 days ago

If anyone on this lists sees this post and wishes to preserve their feed/account for some reason (beyonds backups I maintain), please login at least once over the next coming weeks to get off this list. I will re-run this tool again, and then nuke blindly anything that matches >730 days of inactivity.

scp(1) options.

@mckinley@twtxt.net I mean, yes! I’ve heard a lot of good things about how efficient of a tool it is for backup and all; and I’m willing to spend the time and learn. It’s just that seeing those +400 possible options was a buzz-kill. 🫣 luckily @lyse and @movq shared their most used options!

Can I get someone like maybe @xuu@txt.sour.is or @abucci@anthony.buc.ci or even @eldersnake@we.loveprivacy.club – If you have some spare time – to test this yarnd PR that upgrades the Bitcask dependency for its internal database to v2? 🙏

VERY IMPORTANT If you do; Please Please Please backup your yarn.db database first! 😅 Heaven knows I don’t want to be responsible for fucking up a production database here or there 🤣

@movq@www.uninformativ.de I wiped both ~/.cache/jenny and my maildir_target when I tried to reset things. Still got wrecked 😅

If it’s not too much to ask, could you backup or/change your maildir_target and give it a try with an empty directory?

FIX: Temporarily removed sorenpeter’s twtxt link from my follow list, whipped my twtxt Maildir and jenny Cache. Only then I was able to fetch everything as usual (I think). Now I’ll backup things and see what happens if I pull sorenpeter’s feed.

No keyboards were harmed during this experiment… yet.

@prologic@twtxt.net earlier you suggested extending hashes to 11 characters, but here’s an argument that they should be even longer than that.

Imagine I found this twt one day at https://example.com/twtxt.txt :

2024-09-14T22:00Z Useful backup command: rsync -a “$HOME” /mnt/backup

and I responded with “(#5dgoirqemeq) Thanks for the tip!”. Then I’ve endorsed the twt, but it could latter get changed to

2024-09-14T22:00Z Useful backup command: rm -rf /some_important_directory

which also has an 11-character base32 hash of 5dgoirqemeq. (I’m using the existing hashing method with https://example.com/twtxt.txt as the feed url, but I’m taking 11 characters instead of 7 from the end of the base32 encoding.)

That’s what I meant by “spoofing” in an earlier twt.

I don’t know if preventing this sort of attack should be a goal, but if it is, the number of bits in the hash should be at least two times log2(number of attempts we want to defend against), where the “two times” is because of the birthday paradox.

Side note: current hashes always end with “a” or “q”, which is a bit wasteful. Maybe we should take the first N characters of the base32 encoding instead of the last N.

Code I used for the above example: https://fossil.falsifian.org/misc/file?name=src/twt_collision/find_collision.c

I only needed to compute 43394987 hashes to find it.

thinking about moving some of my services to dependable third parties.. I love to host my own stuff, but I need to have at least some backups. esp for stuff that mostly serves as an alias.

@prologic@twtxt.net How does yarn.social’s API fix the problem of centralization? I still need to know whose API to use.

Say I see a twt beginning (#hash) and I want to look up the start of the thread. Is the idea that if that twt is hosted by a a yarn.social pod, it is likely to know the thread start, so I should query that particular pod for the hash? But what if no yarn.social pods are involved?

The community seems small enough that a registry server should be able to keep up, and I can have a couple of others as backups. Or I could crawl the list of feeds followed by whoever emitted the twt that prompted my query.

I have successfully used registry servers a little bit, e.g. to find a feed that mentioned a tag I was interested in. Was even thinking of making my own, if I get bored of my too many other projects :-)

woops, my backup script calling #rsunc wasn’t actually syncing all new files, for reasons (-a flag, I hate you)

@mckinley@twtxt.net I can’t say for sure. I didn’t even know how three-way merges work till I looked it up. I guess it’s more of git thing that would prove useful in the case of using passwordstore/pass.

As for Keepass, all I do is syncing it’s database file across devices using syncting. Never felt the need to try anything else.

I guess it is safe enough for my use case, with Backup database before saving on and custom Backup Path Placeholders as Backup plan in case of an Eff up.

@mckinley@twtxt.net You definitely have got a point!

It is kind of a hassle to keep things in sync and NOT eff up.

It happened to me before but I was lucky enough to have backups elsewhere.

But, now I kind of have a workflow to avoid data loss while benefiting from both tools.

P.S: my bad, I meant Syncthing earlier on my original replay instead of Rsync. 🫠

@mckinley@twtxt.net for me:

- a wall mount 6U rack which has:

- 1U patch panel

- 1U switch

- 2U UPS

- 1U server, intel atom 4G ram, debian (used to be main. now just has prometheus)

- 1U patch panel

- a mini ryzon 16 core 64G ram, fedora (new main)

- multiple docker services hosted.

- multiple docker services hosted.

- synology nas with 4 2TB drives

- turris omnia WRT router -> fiber uplink

network is a mix of wireguard, zerotier.

- wireguard to my external vms hosted in various global regions.

- this allows me ingress since my ISP has me behind CG-NAT

- this allows me ingress since my ISP has me behind CG-NAT

- zerotier is more for devices for transparent vpn into my network

i use ssh and remote desktop to get in and about. typically via zerotier vpn. I have one of my VMs with ssh on a backup port for break glass to get back into the network if needed.

everything has ipv6 though my ISP does not provide it. I have to tunnel it in from my VMs.

Not a bad option, although now we need a phone with camera, a printer, a QR reader app, to name a few…

And don’t let get started with usability issues of QR codes (like restaurant menus)

My idea is to make it easy to backup keys with pen and paper 🖋 📄 without copying the hexadecimal string which is prone to error 👀

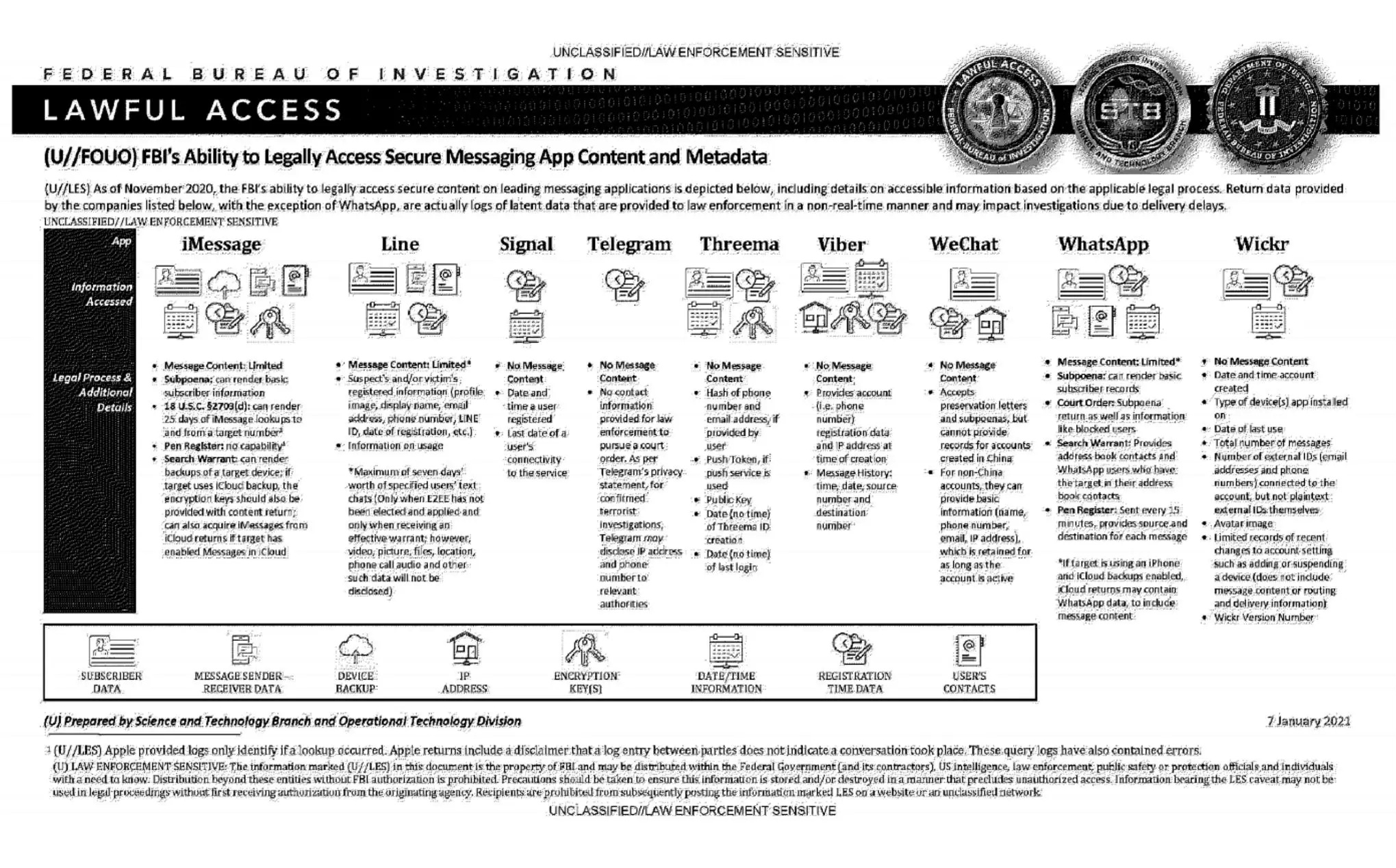

An official FBI document dated January 2021, obtained by the American association “Property of People” through the Freedom of Information Act.

This document summarizes the possibilities for legal access to data from nine instant messaging services: iMessage, Line, Signal, Telegram, Threema, Viber, WeChat, WhatsApp and Wickr. For each software, different judicial methods are explored, such as subpoena, search warrant, active collection of communications metadata (“Pen Register”) or connection data retention law (“18 USC§2703”). Here, in essence, is the information the FBI says it can retrieve:

Apple iMessage: basic subscriber data; in the case of an iPhone user, investigators may be able to get their hands on message content if the user uses iCloud to synchronize iMessage messages or to back up data on their phone.

Line: account data (image, username, e-mail address, phone number, Line ID, creation date, usage data, etc.); if the user has not activated end-to-end encryption, investigators can retrieve the texts of exchanges over a seven-day period, but not other data (audio, video, images, location).

Signal: date and time of account creation and date of last connection.

Telegram: IP address and phone number for investigations into confirmed terrorists, otherwise nothing.

Threema: cryptographic fingerprint of phone number and e-mail address, push service tokens if used, public key, account creation date, last connection date.

Viber: account data and IP address used to create the account; investigators can also access message history (date, time, source, destination).

WeChat: basic data such as name, phone number, e-mail and IP address, but only for non-Chinese users.

WhatsApp: the targeted person’s basic data, address book and contacts who have the targeted person in their address book; it is possible to collect message metadata in real time (“Pen Register”); message content can be retrieved via iCloud backups.

Wickr: Date and time of account creation, types of terminal on which the application is installed, date of last connection, number of messages exchanged, external identifiers associated with the account (e-mail addresses, telephone numbers), avatar image, data linked to adding or deleting.

TL;DR Signal is the messaging system that provides the least information to investigators.

@movq@www.uninformativ.de I clone the important stuff on two separate clusters, but both are in my house. One of these days I’m planning to ask my brother to put a server of mine in his house, and then we can cross-clone for offsite backups that don’t require the cloud.

Follow-up question for you guys: Where do you backup your files to? Anything besides the local NAS?

@mckinley@twtxt.net ninja backup and Borg

@mckinley@twtxt.net Yeah, that’s more clear. 👌

Systems that are on all the time don’t benefit as much from at-rest encryption, anyway.

Right, especially not if it’s “cloud storage”. 😅 (We’re only doing it on our backup servers, which are “real” hardware.)

restic · Backups done right! – In case no-one has used this wonderful tool restic yet, I can beyond a doubt assure you it is really quite fantastic 👌 #backups

The battery life in this i9 MacBook Pro from 2018 has diminished to being barely enough to serve as an UPS backup system with enough time to perform a safe shutdown

mostly for the purpose of offline / async posting, not so much as a backup microblogs are not very important in an archival sense, its more about the transient moment

Getting started with Restic for my backup needs. It’s really neat and fast.