@doesnm@doesnm.p.psf.lt Ooops you might want to re-send that to james instead 🤣

@prologic@twtxt.net does that include mine? otherwise it would make them 8 and 5, maybe even throw off your maths by 0.00001% 😆 … and, come on! 1.04% seems like a good ratio considering how many gopher holes and gem capsules compared to how many Web servers out there in the world 😂

@doesnm@doesnm.p.psf.lt My Salty public key is:

kex1fhxntuc0av7q48hlfj970ve297dzzghn82wp5cahr9r92y8rlrqqtwp983

@xuu@txt.sour.is they can take 2% of your disk space/bandwidth and rent it to the highest bidder 🤥

@doesnm@doesnm.p.psf.lt Do you have a sample Caddy log file you can supply? I’ll see if we can improve the tool 👌

@doesnm@doesnm.p.psf.lt Fot a sample access log? Which tool are you using?

Found this: https://notabug.org/tinyrabbit/gemini-antenna. Maybe it have some user-agent alternative?

@doesnm@doesnm.p.psf.lt I couldn’t find any references to this anywhere either.

@doesnm@doesnm.p.psf.lt Like now?

We:

- Drop

# url=from the spec.

- We don’t adopt

# uuid =– Something @anth@a.9srv.net also mentioned (see below)

We instead use the @nick@domain to identify your feed in the first place and use that as the identify when calculating Twt hashes <id> + <timestamp> + <content>. Now in an ideal world I also agree, use WebFinger for this and expect that for the most part you’ll be doing a WebFinger lookup of @user@domain to fetch someone’s feed in the first place.

The only problem with WebFinger is should this be mandated or a recommendation?

Something @anth@a.9srv.net said on ITC

17:42 I should also note in there that it doesn’t address the two things i really want it to: mandate utf-8 (which should be easy to fit in) and something for better @ mentions.

I actually agree with in both counts and it got me thinking…

@bender@twtxt.net I believe it is Unix-Unix Copy Protocol. Not Unix Copy-Copy Protocol.

Gemini/Gopher Twtxt feeds account for less than 1% in existence:

$ total=$(inspect-db yarns.db | jq -r '.Value.URL' | awk -F'//' '{if ($1 ~ /^https?/) print "http/https:"; else print $1}' | sort | uniq -c | awk '{sum+=$1} END {print sum}'); inspect-db yarns.db | jq -r '.Value.URL' | awk -F'//' '{if ($1 ~ /^https?/) print "http/https:"; else print $1}' | sort | uniq -c | awk -v total="$total" '{printf "%d %s %.2f%%\n", $1, $2, ($1/total)*100}' | sort -r

7 gemini: 0.66%

4 gopher: 0.38%

1046 http/https: 98.96%

@bender@twtxt.net Re that broken thread (#bqor23a). Its the same one. My pod doesn’t have the Root Twt: https://twtxt.net/twt/bqor23a => 404 Not Found.

How in the hell did you even reply to this in the first place?

@cuaxolotl@sunshinegardens.org Wait, what!? We’re dropping Gemini support!?

@quark@ferengi.one HAHAHAHAHAHAHAHAHAHAHA! 🤣

@aelaraji@aelaraji.com ooooh! It’s that kind mission! /me stands, salutes, turns around, and exits the room. LOL.

@quark@ferengi.one HAHA I wish! but no. It’s actually

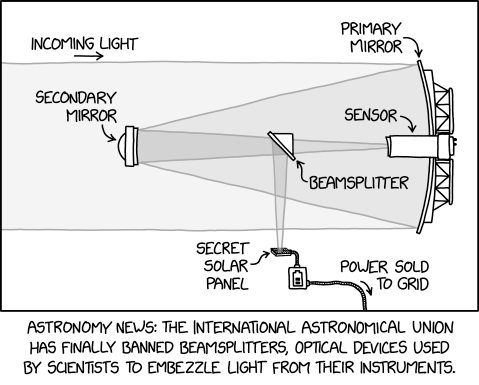

Beamsplitters

⌘ Read more

⌘ Read more

@aelaraji@aelaraji.com why, having a party with lots of libations? LOL.

Pinellas County Running: 4.05 miles, 00:08:38 average pace, 00:34:58 duration

if twtxt 2 is dropping gemini support, i will probably move on and spend more time on my gemini social zine protocol instead. i think the direction of the protocol is probably fine, but for me web is a tier 2 publishing channel. if the choice is between gemini and http i’m always going to pick gemini. its been a fun ride, but i guess this is where i get off.

Thricegreat’s Webpage | https://thricegreat.neocities.org/

@prologic@twtxt.net that “little database that could” is simply amazing, isn’t it? I run Conduwuit (nevermind, this one is RocksDB), and GoToSocial using it as a backend, no issues. And, of course, sqlite is the database of choice for a lot of things under iOS.

@david@collantes.us SQLite

@prologic@twtxt.net, are you running Gitea with an SQL backend, or using sqlite? Any reason have haven’t moved to Forgejo?

@prologic@twtxt.net a wise plan! Who knows, ideas change, and often plans do not hash, right? Mature, mature! :-)

@xuu@txt.sour.is was that 2% picked out randomly? I like it! LOL.

@prologic@twtxt.net I like the, allegedly, original:

“It can scarcely be denied that the supreme goal of all theory is to make the irreducible basic elements as simple and as few as possible without having to surrender the adequate representation of a single datum of experience.”

Not as simple as the interpretation you used, yet often context is king (or queen).

@prologic@twtxt.net and one could say that “for every simple problem, there is a solution that’s confusing, convoluted, and right.” :-P

@prologic@twtxt.net so, where are they? I want to take a peek at HomeTunnel (even though I don’t a use case for it at the moment). Show us repos! :-P

@david@collantes.us yeah what @lyse@lyse.isobeef.org said. 😅 and I just chickened out seeing bigger numbers than usual.

rsync(1) but, whenever I Tab for completion and get this:

@lyse@lyse.isobeef.org and @movq@www.uninformativ.de thanks for sharing those options, they’re a good point to start from. Much appreciated! 🙏

scp(1) options.

@mckinley@twtxt.net I mean, yes! I’ve heard a lot of good things about how efficient of a tool it is for backup and all; and I’m willing to spend the time and learn. It’s just that seeing those +400 possible options was a buzz-kill. 🫣 luckily @lyse and @movq shared their most used options!

@david@collantes.us having offsets were nice because it gives you context of where the user is in relation to you.

@prologic@twtxt.net thanks. I hate it. Might as well use UUID

@lyse@lyse.isobeef.org thank you! Raining is starting to fall very steadily. All good so far. Wife’s home, a nice meal simmers. Ah! :-D

@lyse@lyse.isobeef.org on this:

3.2 Timestamps: I feel no need to mandate UTC. Timezones are fine with me. But I could also live with this new restriction. I fail to see, though, how this change would make things any easier compared to the original format.

Exactly! If anything it will make things more complicated, no?

Good writeup, @anth@a.9srv.net! I agree to most of your points.

3.2 Timestamps: I feel no need to mandate UTC. Timezones are fine with me. But I could also live with this new restriction. I fail to see, though, how this change would make things any easier compared to the original format.

3.4 Multi-Line Twts: What exactly do you think are bad things with multi-lines?

4.1 Hash Generation: I do like the idea with with a new uuid metadata field! Any thoughts on two feeds selecting the same UUID for whatever reason? Well, the same could happen today with url.

5.1 Reply to last & 5.2 More work to backtrack: I do not understand anything you’re saying. Can you rephrase that?

8.1 Metadata should be collected up front: I generally agree, but if the uuid metadata field were a feed URL and no real UUID, there should be probably an exception to change the feed URL mid-file after relocation.

Pinellas County Running: 4.08 miles, 00:10:08 average pace, 00:41:19 duration

@anth@a.9srv.net you wrote:

“Edits and Deletions should go; see also Section 6. This is probably the worst example of this document pushing a text document to do more protocol-like things.”

Edit and deletions are precisely what brought us here. Currently, if one replies to a twtxt, and the original gets later edited, it breaks replies, and potentially drastically changes context.

Tiens, je découvre qu’il y a un magasin wikimedia: https://store.wikimedia.org/en-fr

This is only first draft quality, but I made some notes on the #twtxt v2 proposal. http://a.9srv.net/b/2024-09-25

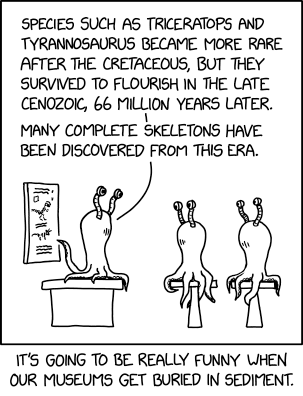

Late Cenozoic

⌘ Read more

⌘ Read more

@sorenpeter@darch.dk not even this: https://twtxt.net/media/AzUmzTN5YEJdt4VPeeprjB.png?full=1

@sorenpeter@darch.dk this will show broken, because you are hellbent on editing twtxts, aren’t you? :-D

(#2024-09-24T12:53:35Z) What does this screenshot show? The resolution it too low for reading the text…

(#abcdefg12345) to something like (https://twtxt.net/user/prologic/twtxt.txt 2024-09-22T07:51:16Z).

(#2024-09-24T12:45:54Z) @prologic@twtxt.net I’m not really buying this one about readability. It’s easy to recognize that this is a URL and a date, so you skim over it like you would we mentions and markdown links and images. If you are not suppose to read the raw file, then we might a well jam everything into JSON like mastodon

yarnd does for example) and equally a 5x increase in on-disk storage as well. This is based on the Twt Hash going from a 13 bytes (content-addressing) to 63 bytes (on average for location-based addressing). There is roughly a ~20-150% increase in the size of individual feeds as well that needs to be taken into consideration (on the average case).

(#2024-09-24T12:44:35Z) There is a increase in space/memory for sure. But calculating the hashes also takes up CPU. I’m not good with that kind of math, but it’s a tradeoff either way.

(#2024-09-24T12:39:32Z) @prologic@twtxt.net It might be simple for you to run echo -e "\t\t" | sha256sum | base64, but for people who are not comfortable in a terminal and got their dev env set up, then that is magic, compared to the simplicity of just copy/pasting what you see in a textfile into another textfile – Basically what @movq@www.uninformativ.de also said. I’m also on team extreme minimalism, otherwise we could just use mastodon etc. Replacing line-breaks with a tab would also make it easier to handwrite your twtxt. You don’t have to hardwrite it, but at least you should have the option to. Just as i do with all my HTML and CSS.