user/bmallred/data/2023-08-14-16-04-49.fit: 3.88 miles, 00:11:31 average pace, 00:44:41 duration

user/bmallred/data/2023-08-13-05-39-40.fit: 8.53 miles, 00:11:06 average pace, 01:34:43 duration

What if I run my Gitea Actions Runners on some Vultr VM(s) for now? At least until I get some more hardware just for a “build farm” 🤔

user/bmallred/data/2023-08-11-05-36-04.fit: 4.47 miles, 00:09:38 average pace, 00:43:03 duration

user/bmallred/data/2023-08-10-05-25-00.fit: 8.81 miles, 00:06:45 average pace, 00:59:26 duration

user/bmallred/data/2023-08-09-05-59-05.fit: 5.02 miles, 00:09:38 average pace, 00:48:23 duration

podman works with TLS. It does not have the "--docker" siwtch so you have to remove that and use the exact replacement commands that were in that github comment.

@prologic@twtxt.net Change your script to this:

#!/bin/sh

set -e

alias docker=podman

if [ ! command -v docker > /dev/null 2>&1 ]; then

echo "docker not found"

exit 1

fi

mkdir -p $HOME/.docker/certs.d/cas

## key stuff omitted

# DO NOT DO THIS docker context create cas --docker "host=tcp://cas.run:2376,ca=$HOME/.docker/certs.d/cas/ca.pem,key=$HOME/.docker/certs.d/cas/key.pem,cert=$HOME/.docker/certs.d/cas/cert.pem"

# DO THIS:

podman system connection add "host=tcp://cas.run:2376,ca=$HOME/.docker/certs.d/cas/ca.pem,key=$HOME/.docker/certs.d/cas/key.pem,cert=$HOME/.docker/certs.d/cas/cert.pem"

# DO NOT DO THIS docker context use cas

# DO THIS:

podman system connection default cas

user/bmallred/data/2023-08-08-05-46-21.fit: 8.15 miles, 00:06:44 average pace, 00:54:55 duration

@prologic@twtxt.net I don’t get your objection. dockerd is 96M and has to run all the time. You can’t use docker without it running, so you have to count both. docker + dockerd is 131M, which is over 3x the size of podman. Plus you have this daemon running all the time, which eats system resources podman doesn’t use, and docker fucks with your network configuration right on install, which podman doesn’t do unless you tell it to.

That’s way fat as far as I’m concerned.

As far as corporate goes, podman is free and open source software, the end. docker is a company with a pricing model. It was founded as a startup, which suggests to me that, like almost all startups, they are seeking an exit and if they ever face troubles in generating that exit they’ll throw out all niceties and abuse their users (see Reddit, the drama with spyware in Audacity, 10,000 other examples). Sure you can use it free for many purposes, and the container bits are open source, but that doesn’t change that it’s always been a corporate entity, that they can change their policies at any time, that they can spy on you if they want, etc etc etc.

That’s way too corporate as far as I’m concerned.

I mean, all of this might not matter to you, and that’s fine! Nothing wrong with that. But you can’t have an alternate reality–these things I said are just facts. You can find them on Wikipedia or docker.com for that matter.

@prologic@twtxt.net I had a feeling my container was not running remotely. It was too crisp.

podman is definitely capable of it. I’ve never used those features though so I’d have to play around with it awhile to understand how it works and then maybe I’d have a better idea of whether it’s possible to get it to work with cas.run.

There’s a podman-specific way of allowing remote container execution that wouldn’t be too hard to support alongside docker if you wanted to go that route. Personally I don’t use docker–too fat, too corporate. podman is lightweight and does virtually everything I’d want to use docker to do.

@prologic@twtxt.net @jmjl@tilde.green

It looks like there’s a podman issue for adding the context subcommand that docker has. Currently podman does not have this subcommand, although this comment has a translation to podman commands that are similar-ish.

It looks like that’s all you need to do to support podman right now! Though I’m not 100% sure the containers I tried really are running remotely. Details below.

I manually edited the shell script that cas.run add returns, changing all the docker commands to podman commands. Specifically, I put alias docker=podman at the top so the check for docker would pass, and then I replaced the last two lines of the script with these:

podman system connection add cas "host=tcp://cas.run..."

podman system connection default cas

(that … after cas.run is a bunch of connection-specific stuff)

I ran the script and it exited with no output. It did create a connection named “cas”, and made that the default. I’m not super steeped in how podman works but I believe that’s what you need to do to get podman to run containers remotely.

I ran some containers using podman and I think they are running remotely but I don’t know the right juju to verify. It looks right though!

This means you could probably make minor modifications to the generated shell script to support podman. Maybe when the check for docker fails, check for podman, and then later in the script use the podman equivalents to the docker context commands.

@prologic@twtxt.net hmm, now I get this:

$ ssh -p 2222 -i PRIVATE_GITHUB_KEY GITHUB_USERNAME@cas.run add | sh

sh: 135: docker: not found

The quickstart says:

## Quick Start

ssh -p 2222 cas.run add | sh

so that’s why I tried this command (I had to modify it with my key and username like before)

Edit: 🤦♂ and that’s becasue I don’t have docker on this machine. Sorry about that, false alarm.

@prologic@twtxt.net aha, thank you, that got me unjammed.

Turns out I thought I had an SSH key set up in github, but github didn’t agree with me. So, I re-added the key.

I also had to modify the command slightly to:

ssh -p 2222 -i PRIVATE_GITHUB_KEY GITHUB_USERNAME@cas.run help

since I generate app-specific keypairs and need to specify that for ssh and I haven’t configured it to magically choose the key so I have to specify it in the command line.

Anyhow, that did it. Thanks!

user/bmallred/data/2023-08-07-05-51-04.fit: 5.45 miles, 00:09:21 average pace, 00:51:01 duration

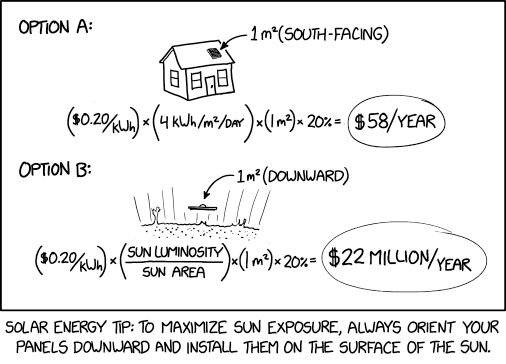

Solar Panel Placement

⌘ Read more

⌘ Read more

@prologic@twtxt.net so what is the command to use? I did ssh -p 2222 GITHUB_USERNAME@cas.run help but that gives the same error. There’s something missing here.

# ssh -p 2222 cas.run help

The authenticity of host '[cas.run]:2222 ([139.180.180.214]:2222)' can't be established.

RSA key fingerprint is SHA256:i5txciMMbXu2fbB4w/vnElNSpasFcPP9fBp52+Avdbg.

This key is not known by any other names

Are you sure you want to continue connecting (yes/no/[fingerprint])? yes

Warning: Permanently added '[cas.run]:2222' (RSA) to the list of known hosts.

abucci@cas.run: Permission denied (publickey).

My proof-of-concept Container as a Service (CAS or CaaS) is now up and running. If anyone wants to have a play? 🤔 There’s still heaps to do, lots of “features” missing, but you can run stuff at least 😅

ssh -p 2222 cas.run help

Just been playing around with some numbers… A typical small static website or blog could be run for $0.30-$0.40 USD/month. How does that compare with what you’re paying @mckinley@twtxt.net ? 🤔

user/bmallred/data/2023-08-04-05-57-29.fit: 6.64 miles, 00:09:38 average pace, 01:04:01 duration

Would anyone pay for like cheap hosting if it only cost you say ~$0.50 USD per month for a basic space to run your website, twtxt feed, yarn pod, whatever? 🤔 Of course we’re talking slices of a server here in terms of memory and cpu, so this would be 10 milliCores of CPU + 64MB of Memory, more than enough to run quite a bit of shit™ 🤣 (especially when you don’t need to run or manage a full OS)

user/bmallred/data/2023-08-02-05-32-53.fit: 4.41 miles, 00:09:04 average pace, 00:40:00 duration

user/bmallred/data/2023-07-31-15-34-43.fit: 1.02 miles, 00:10:08 average pace, 00:10:20 duration

user/bmallred/data/2023-07-31-14-52-02.fit: 3.21 miles, 00:09:40 average pace, 00:31:02 duration

user/bmallred/data/2023-07-30-16-22-31.fit: 1.70 miles, 00:08:56 average pace, 00:15:09 duration

Pinellas County - Long run: 10.70 miles, 00:11:36 average pace, 02:04:13 duration

had a lot going against me today (all self inflicted). got about 4h30m of sleep with too much to drink late in the evening. no hangover or anything, but probably didn’t help my rest nor hydration. also it was supposedly 80F with a feels like of 93F when i started and 89F with feels like of 111F when i finished. the legs felt heavy and didn’t have the energy to up the cadence and sustain it. it was definitely nice to get out but just one of those days.

#running

user/bmallred/data/2023-07-28-05-57-46.fit: 4.81 miles, 00:08:57 average pace, 00:43:03 duration

user/bmallred/data/2023-07-27-06-06-39.fit: 8.06 miles, 00:06:25 average pace, 00:51:43 duration

user/bmallred/data/2023-07-26-05-30-35.fit: 4.81 miles, 00:08:57 average pace, 00:43:02 duration

user/bmallred/data/2023-07-24-05-45-03.fit: 4.78 miles, 00:09:25 average pace, 00:45:03 duration

@prologic@twtxt.net It was super useful if you needed to do the sorts of things it did. I’m pretty sad.

At its core was Sage, a computational mathematics system, and their own version of Jupyter notebooks. So, you could do all kinds of different math stuff in a notebook environment and share that with people. But on top of that, there was a chat system, a collaborative editing system, a course management system (so if you were teaching a class using it you could keep track of students, assignments, grades, that sort of thing), and a bunch of other stuff I never used. It all ran in a linux container with python/conda as a base, so you could also drop to a terminal, install stuff in the container, and run X11 applications in the same environment. I never taught a class with it but I used to use it semi-regularly to experiment with ideas.

I used to be a big fan of a service called cocalc, which you could also self host. It was kind of an integrated math, data science, research, writing, and teaching platform.

I hadn’t run it in awhile, and when I checked in with it today I found their web site brags that cocalc is now “extensively integrated with ChatGPT”.

Which means I can’t use it anymore, and frankly anyone doing anything serious shouldn’t use it either. Very disappointing.

@lyse@lyse.isobeef.org oh wow nice, I got it running with no trouble:

|

|

| .

| | |

| | |

| | |

|__________ | |

/ | _,..----. | / ,Y-o..

.| ,-'' | / .' / ' .

|| [ --.....- | | | `.

|| |".........__ | | \ |

b | ' | | \ |

| | | `. _,'

| | ' `'''

, , . .

\ .'| ,-'\V d---. |...

\. ,'| / |/ | / |

` ...,' ,' `..,Y / / |

_/ | | |

,' |

-._______/

user/bmallred/data/2023-07-21-05-26-51.fit: 6.47 miles, 00:09:36 average pace, 01:02:10 duration

user/bmallred/data/2023-07-19-16-39-50.fit: 01:09:41 duration

user/bmallred/data/2023-07-19-10-55-00.fit: 00:30:08 duration

user/bmallred/data/2023-07-19-09-46-42.fit: 00:41:28 duration

user/bmallred/data/2023-07-18-04-32-08.fit: 5.94 miles, 00:09:12 average pace, 00:54:41 duration

user/bmallred/data/2023-07-17-09-36-56.fit: 00:41:02 duration

user/bmallred/data/2023-07-16-05-38-40.fit: 4.03 miles, 00:08:20 average pace, 00:33:36 duration

user/bmallred/data/2023-07-15-12-40-19.fit: 00:59:09 duration

user/bmallred/data/2023-07-13-05-33-52.fit: 4.02 miles, 00:08:56 average pace, 00:35:54 duration

@prologic@twtxt.net I run fail2ban on very aggressive settings to avoid these headaches. That plus manually banning IP ranges that register bots on my pod (🙄) works pretty well for me.

user/bmallred/data/2023-07-12-05-31-59.fit: 2.07 miles, 00:08:55 average pace, 00:18:30 duration

user/bmallred/data/2023-07-10-05-21-43.fit: 6.55 miles, 00:07:19 average pace, 00:47:55 duration

Pinellas County - Long run: 10.02 miles, 00:11:40 average pace, 01:56:59 duration

rough.

- didn’t get a lot of sleep

- didn’t hydrate enough the day prior

- hot and humid

- just didn’t feel like it

- leg didn’t feel right

#running

user/bmallred/data/2023-07-08-13-44-23.fit: 01:20:10 duration

user/bmallred/data/2023-07-07-06-14-44.fit: 4.31 miles, 00:10:30 average pace, 00:45:12 duration

user/bmallred/data/2023-07-06-06-03-31.fit: 4.03 miles, 00:10:02 average pace, 00:40:26 duration

user/bmallred/data/2023-07-04-06-30-30.fit: 4.08 miles, 00:08:00 average pace, 00:32:36 duration