St Petersburg Distance Classic Marathon: 26.41 miles, 00:11:23 average pace, 05:00:39 duration

first marathon down. everything that could go wrong did. honestly i am just proud i did not quit. now i have to look at the run and figure out what i can tweak or add to my training. had a cramp start in my right quad at around mile 15. then around mile 18 both of my calves started to feel odd as if someone was lightly strumming my tendons. then they seized! this continued for the remainder of the marathon where i would walk then try to run and then stop when i had to. then during the entirety of the pace my nose would not stop dripping making it difficult to breathe. ha! also my shorts almost came down twice and i had to re-tie them while carrying my handheld water in my teeth. seriously, so many things i did not expect and had not happened in any previous runs.

really happy to be able to eat spicy food and have some alcoholic beverages again though!

#running #race

Pinellas County - Easy: 5.05 miles, 00:09:08 average pace, 00:46:08 duration

everything clicked today. kept a steady but mildly progressive pace whilst keeping the heart rate in zone 2 for the most part. nothing felt strained and breathing was easy. this one was a great boost in confidence seeing the progress made in the training and very happy with it.

#running

Having fun with React - yet again. A large part of my job entails (re)learning technologies - luckily I have access to some good resources in the form of training- and tutorial sites, all provided by my employer.

Pyramids: 5.39 miles, 00:10:53 average pace, 00:58:37 duration

called it early due to spicy food and opted for treadmill. was trying to get to zone 5 and was sure the pace would get me there at the peak, but barely reached threshold with conditions. curious if i was outside how different it would have been. new training block. 10:55, 9:41, 6:59

#running #treadmill

SPRF Half Marathon: 13.20 miles, 00:09:29 average pace, 02:05:12 duration

still host and humid (no surprise) but more cloud cover today. no kids but beth came and was able to cheer me on in a couple of places which was fun. the last bit she yelled “five to go!” which kind of got in my head a bit, albeit i think the heat started to get to me as well. had to take a couple of brief walks just to recollect and focus again. pretty good training run. keeping it in the green for the most part and around marathon pace. can’t wait to see how cooler weather and more training will pay off!

#running #race

Pinellas County - Fartlek: 6.02 miles, 00:09:01 average pace, 00:54:16 duration

fartlek was fun and seemed to lock in at 8:00 pace easily. first day of marathon training!

#running

Winter Haven - Long run: 10.34 miles, 00:09:53 average pace, 01:42:09 duration

great weather. i did not sleep great but the body felt refreshed. hit all my paces i wanted and kept the heart rate where i needed to. stopped when i could have gone more but marathon training starts tomorrow.

#running

why am I not surprised?… https://uk.pcmag.com/ai/147757/elon-musk-will-train-his-ai-project-using-your-tweets

@marado@twtxt.net It can’t possibly be defensible, which to me always signals an attempt at a power grab. They never explicitly said “we will use anything we scrape from the web to train our AI” before–that’s new. There is growing pushback against that practice, with numerous legal cases winding through the legal system right now. Some day those cases will be heard and decided on by judges. So they’re trying to get out ahead of that, in my opinion, and cement their claims to this data before there’s a precedent set.

Most of the can run locally have such a small training set they arnt worth it. Are more like the Markov chains from the subreddit simulator days.

There is one called orca that seems promising that will be released as OSS soon. Its running at comparable numbers to OpenAI 3.5.

Home | Tabby This is actually pretty cool and useful. Just tried this on my Mac locally of course and it seems to have quite good utility. What would be interesting for me would be to train it on my code and many projects 😅

verbaflow understands which came out to roughly ~5GB. Then I tried some of the samples in the README. My god, this this is so goddamn awfully slow its like watching paint dry 😱 All just to predict the next few tokens?! 😳 I had a look at the resource utilisation as well as it was trying to do this "work", using 100% of 1.5 Cores and ~10GB of Memory 😳 Who da fuq actually thinks any of this large language model (LLM) and neural network crap is actually any good or useful? 🤔 Its just garbage 🤣

@prologic@twtxt.net You more or less need a data center to run one of these adequately (well, train…you can run a trained one with a little less hardware). I think that’s the idea–no one can run them locally, they have to rent them (and we know how much SaaS companies and VCs love the rental model of computing).

There’s a lot of promising research-grade work being done right now to produce models that can be run on a human-scale (not data-center-scale) computing setup. I suspect those will become more commonly deployed in the next few years.

@prologic@twtxt.net It’s a fun challenge to see how many words you can say without expressing any ideas at all. Maybe this GPT stuff should be trained to do that!

I was listening to an O’Reilly hosted event where they had the CEO of GitHub, Thomas Dohmke, talking about CoPilot. I asked about biased systems and copyright problems. He, Thomas Dohmke, said, that in the next iteration they will show name, repo and licence information next to the code snippets you see in CoPilot. This should give a bit more transparency. The developer still has to decide to adhere to the licence. On the other hand, I have to say he is right about the fact, that probably every one of us has used a code snippet from stack overflow (where 99% no licence or copyright is mentioned) or GitHub repos or some tutorial website without mentioning where the code came from. Of course, CoPilot has trained with a lot of code from public repos. It is a more or less a much faster and better search engine that the existing tools have been because how much code has been used from public GitHub repos without adding the source to code you pasted it into?

@carsten@yarn.zn80.net yeesh, it’s a for-pay company I wouldn’t give them the output of your mind for free and train their AI for them.

ChatGPT is good, but it’s not that good 🤣 I asked it to write a program in Go that performs double ratcheting and well the code is total garbage 😅 – Its only as good as the inputs it was trained on 🤣 #OpenAI #GPT3

HM [02;04;06]: 13 mile run: 13.21 miles, 00:11:02 average pace, 02:25:47 duration

felt great minus high alert for code brown since miles 7 to 11.

last run of the training block!

#running

HM [01;04;06]: 10 mile run: 10.31 miles, 00:11:59 average pace, 02:03:32 duration

last run of first training block.

#running

twtxting from a train, that’s a first :))

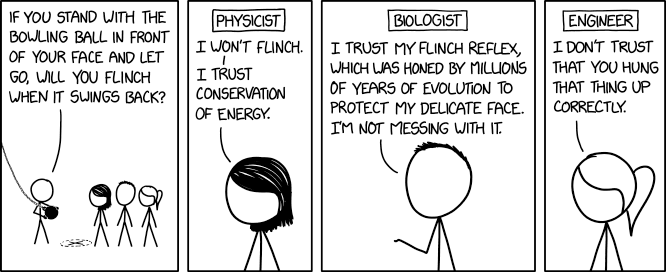

Flinch

⌘ Read more

⌘ Read more