Just saw this user agent popping up:

yarnd/ERSION@OMMIT go1.23.4 (+https://.../twtxt.txt; @username)

ERSION? OMMIT? 😅

Let me introduce you to the much superior version 4 instead: https://lyse.isobeef.org/tmp/twxm4.xml

Hello, i want to present my new revolution twtxt v3 format - twjson

That’s why you should use it:

- It’s easy to to parse

- It’s easy to read (in formatted mode :D)

- It used actually \n for newlines, you don’t need unprintable symbols

- Forget about hash collisions because using full hash

Here is my twjson feed: https://doesnm.p.psf.lt/twjson.json

And twtxt2json converter: https://doesnm.p.psf.lt/twjson.js

ReactOS 0.4.15 released

It’s been over three years since the last ReactOS release, but today, in honour of the first commit to the project by the oldest, still active contributor, the project released ReactOS 0.4.15. Of course, there’s been a steady stream of nightly releases, so it’s not like the project stalled or anything, but having a proper release is always nice to have. We are pleased to announce the release of ReactOS 0.4.15! This release offers Plug and Play fixes, audio fixes, memory management fi … ⌘ Read more

@eapl.me@eapl.me Cool!

Proposal 3 (https://git.mills.io/yarnsocial/twtxt.dev/issues/18#issuecomment-19215) has the “advantage”, that you do not have to “mention” the original author if the thread slightly diverges. It seems to be a thing here that conversations are typically very flat instead of trees. Hence, and despite being a tree hugger, I voted for 3 being my favorite one, then 2, 1 and finally 4.

All proposals still need more work to clarify the details and edge cases in my opinion before they can be implemented.

Hi! For anyone following the Request for Comments on an improved syntax for replies and threads, I’ve made a comparative spreadsheet with the 4 proposals so far. It shows a syntax example, and top pros and cons I’ve found:

https://docs.google.com/spreadsheets/d/1KOUqJ2rNl_jZ4KBVTsR-4QmG1zAdKNo7QXJS1uogQVo/edit?gid=0#gid=0

Feel free to propose another collaborative platform (for those without a G account), and also share your comments and analysis in the spreadsheet or in Gitea.

After 47 years, OpenVMS gets a package manager

As of the 18th of February, OpenVMS, known for its stability and high-availability, 47 years old and ported to 4 different CPU architecture, has a package manager! This article shows you how to use the package manager and talks about a few of its quirks. It’s an early beta version, and you do notice that when using it. A small list of things I noticed, coming from a Linux (apt/yum/dnf) background: There seems to be no automatic dependency … ⌘ Read more

Chapter 2:

Chapter 4: Chapter 5:

ah crap. chapters 2, 4 and 5 are being cropped by yarn on upload. they should be more like 2-3 hours long

Chapter 3:

Chapter 4:

so dry.. haha this would put me to sleep

KDE splits KWin into kwin_x11 and kwin_wayland

One of the biggest behind-the-scenes changes in the upcoming Plasma 6.4 release is the split of kwin_x11 and kwin_wayland codebases. With this blog post, I would like to delve in what led us to making such a decision and what it means for the future of kwin_x11. ↫ Vlad Zahorodnii For the most part, this change won’t mean much for users of KWin on either Wayland or X11, at least for now. At least for the remainder of the Plasma 6.x life … ⌘ Read more

4 miles: 4.00 miles, 00:10:00 average pace, 00:40:01 duration

Pinellas County Running - 4 miles: 4.04 miles, 00:08:45 average pace, 00:35:20 duration

Pinellas County - 4 miles: 4.05 miles, 00:08:21 average pace, 00:33:46 duration

the morning is so much better to run. just the getting up part sucks. legs tired from such a quick turn around but felt fine.

#running

Zen and the art of microcode hacking

Now that we have examined the vulnerability that enables arbitrary microcode patches to be installed on all (un-patched) Zen 1 through Zen 4 CPUs, let’s discuss how you can use and expand our tools to author your own patches. We have been working on developing a collection of tools combined into a single project we’re calling zentool. The long-term goal is to provide a suite of capabilities similar to binutils, but targeting AMD microcode instead of CPU mach … ⌘ Read more

Pinellas County - 4 miles: 4.06 miles, 00:08:41 average pace, 00:35:15 duration

4 mile run: 4.02 miles, 00:09:29 average pace, 00:38:08 duration

A love letter to Void Linux

I installed Void on my current laptop on the 10th of December 2021, and there has never been any reinstall. The distro is absurdly stable. It’s a rolling release, and yet, the worst update I had in those years was one time, GTK 4 apps took a little longer to open on GNOME. Which was reverted after a few hours. Not only that, I sometimes spent months without any update, and yet, whenever I did update, absolutely nothing went wrong. Granted, I pretty much only did full upgrades … ⌘ Read more

zlib-rs is faster than C

I’m sure we can all have a calm, rational discussion about this, so here it goes: zlib-rs, the Rust re-implementation of the zlib library, is now faster than its C counterparts in both decompression and compression. We’ve released version 0.4.2 of zlib-rs, featuring a number of substantial performance improvements. We are now (to our knowledge) the fastest api-compatible zlib implementation for decompression, and beat the competition in the most important compression cases too. ↫ F … ⌘ Read more

Pinellas County - 4 mile run: 4.06 miles, 00:08:50 average pace, 00:35:50 duration

late in the evening (fucking work). definitely had the urge to drop a deuce for the majority of it. the pace was comfortable.

#running

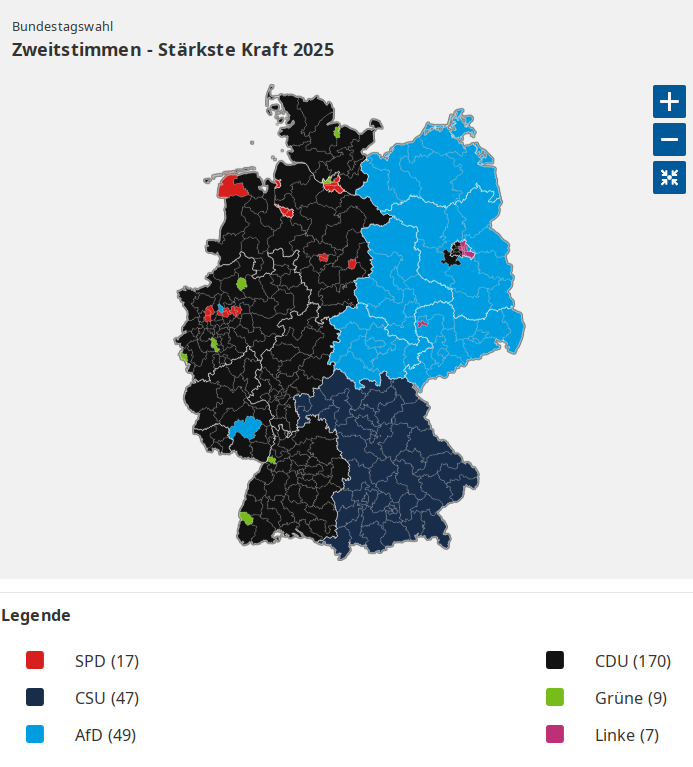

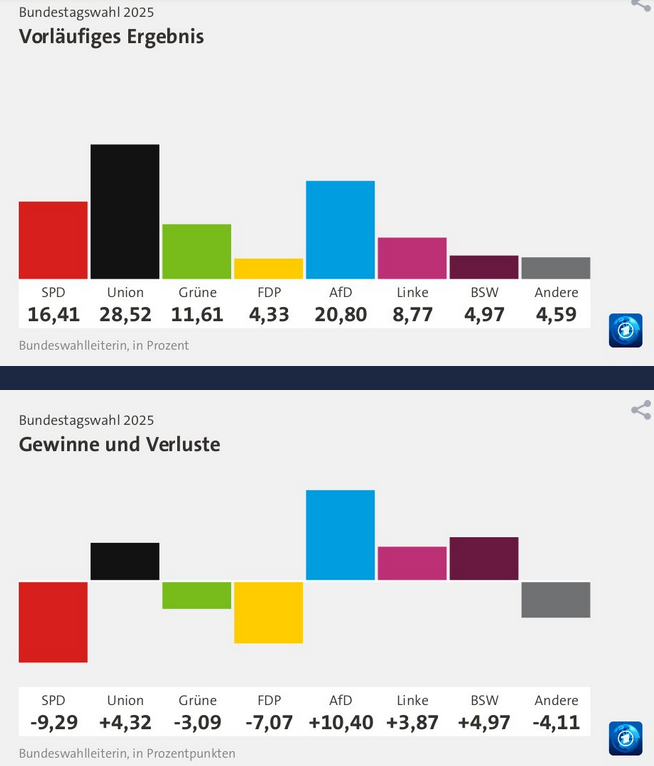

@bender@twtxt.net @prologic@twtxt.net The outcome was to be expected but it’s still pretty catastrophic. Here’s an overview:

East Germany is dominated by AfD. Bavaria is dominated by CSU (it’s always been that way, but this is still a conservative/right party). Black is CDU, the other conservative/right party.

The guy who’s probably going to be chancellor recently insulted the millions of people who did demonstrations for peace/anti-right. “Idiots”, “they’re nuts”, stuff like that. This was before the election. He already earned the nickname “Mini Trump”.

Both the right and the left got more votes this time, but the left only gained 3.87 percentage points while the right (CDU/CSU + AfD) gained 14.72:

The Green party lost, SPD (“mid-left”) lost massively (worst result in their history). FDP also lost. These three were the previous government.

This isn’t looking good at all, especially when you think about what’s going to happen in the next 4 years. What will CDU (the winner) do? Will they be able to “turn the ship around”? Highly unlikely. They are responsible for the current situation (in large parts). They will continue to do business as usual. They will do anything but help poor/ordinary people. This means that AfD will only get stronger over the next 4 years.

Our only hope would be to ban AfD altogether. So far, nobody but non-profit organizations is willing to do that (for unknown reasons).

I don’t even know if banning the AfD would help (but it’s probably our best/only option). AfD politicians are nothing but spiteful, hateful, angry, similar to Trump/MAGA. If you’ve seen these people talk and still vote for them, then you must be absolutely filled with rage and hatred. Very concerning.

Correct me if I’m wrong, @lyse@lyse.isobeef.org, @arne@uplegger.eu, @johanbove@johanbove.info.

Running - 4 miles: 4.00 miles, 00:09:31 average pace, 00:38:05 duration

so boring

#running #treadmill

Running - 4 miles: 4.00 miles, 00:09:32 average pace, 00:38:06 duration

this week is going to be hell. with the travel and all the fires at work i can already tell.

#running #treadmill

@eapl.me@eapl.me I can do that as soon as I get back home. Also, just in case you’ve missed it, Choice 1 is actually 4 different variations.

4, but I like the idea of @eapl_en@eapl.me

reviewing logs this morning and found i have been spammed hard by bots not respecting the robots.txt file. only noticed it because the OpenAI bot was hitting me with a lot of nonsensical requests. here is the list from last month:

- (810) bingbot

- (641) Googlebot

- (624) http://www.google.com/bot.html

- (545) DotBot

- (290) GPTBot

- (106) SemrushBot

- (84) AhrefsBot

- (62) MJ12bot

- (60) BLEXBot

- (55) wpbot

- (37) Amazonbot

- (28) YandexBot

- (22) ClaudeBot

- (19) AwarioBot

- (14) https://domainsbot.com/pandalytics

- (9) https://serpstatbot.com

- (6) t3versionsBot

- (6) archive.org_bot

- (6) Applebot

- (5) http://search.msn.com/msnbot.htm

- (4) http://www.googlebot.com/bot.html

- (4) Googlebot-Mobile

- (4) DuckDuckGo-Favicons-Bot

- (3) https://turnitin.com/robot/crawlerinfo.html

- (3) YandexNews

- (3) ImagesiftBot

- (2) Qwantify-prod

- (1) http://www.google.com/adsbot.html

- (1) http://gais.cs.ccu.edu.tw/robot.php

- (1) YaK

- (1) WBSearchBot

- (1) DataForSeoBot

i have placed some middleware to reject these for now but it is not a full proof solution.

the leftists are telling me 2+2=4. the right wingers are saying its 10. so as a reasonable centrist its clear to me that the real answer is 7

Let’s Encrypt ends support for expiration notification emails

Since its inception, Let’s Encrypt has been sending expiration notification emails to subscribers that have provided an email address to us. We will be ending this service on June 4, 2025. ↫ Josh Aas on the Let’s Encrypt website They’re ending the expiration notification service because it’s costly, adds a ton of complexity to their systems, and constitutes a privacy risk because of all the email addresses the … ⌘ Read more

Doomsday Clock hits 89 seconds + 4 more stories

The Doomsday Clock moves to 89 seconds; Germany’s Bundestag passes new immigration plan; Scientists succeed in DNA storage using 5D crystal; AI report highlights emerging dangers; NASA discovers life’s building blocks in asteroid samples. ⌘ Read more

PebbleOS becomes open source, new Pebble device announced

Eric Migicovsky, founder of Pebble, the original smartwatch maker, made a major announcement today together with Google. Pebble was originally bought by Fitbit and in turn Fitbit was then bought by Google, but Migicovsky always wanted to to go back to his original idea and create a brand new smartwatch. PebbleOS took dozens of engineers working over 4 years to build, alongside our fantastic product and QA teams. Repro … ⌘ Read more

Pinellas County - Cycling: 4.79 miles, 00:05:43 average pace, 00:27:20 duration

Pinellas County - 5 miles: 4.99 miles, 00:08:49 average pace, 00:44:02 duration

it was chill to start out with. then saw our friends walking their dogs and said hey. and then, saw some friends driving by. and then saying hello to a bunch of people in the park. and then realized there was a 5km race in the park today. yeah, i didn’t keep it chill.

#running

@kat@yarn.girlonthemoon.xyz I approve! That’s how I learned HTML (version 4 at the time and XHTML shortly after) and making websites, too. Some of them are still made like this to this day. Hand-written HTML. Hardly any <div> and class nonsense. I can’t remember with which editor I started out with, but I upgraded to Webweaver (later renamed to Webcraft) quickly. Yeah, this were the times when there was just a single computer for the whole family.

Free hosting on Arcor, Freenet and I don’t know anymore how they were all called. Like this author, I uploaded everything via FTP. Oh dear, when was the last time I used that? And I had registered plenty of free .de.vu domains.

Being on Windows at the time, everything was ISO-8859-1 for me. No UTF-8, I don’t think I’ve heard about it back then.

Later, I wrote my own CMSes in PHP. Man, were they bad in retrospect. :-D Of course, MySQL databases were used as backends. I still exactly know the moment I read the first time about SQL injections. I tried it on my own CMS login and was shocked when I could just break in. The very next thing I did was to lock down everything with an .htaccess until I actually fixed my broken PHP code. Hahaha, good memories.

I swear by Atom or RSS feeds. Many of my sites offer them. I daily consume feeds, they’re just great.

#genuary #genuary2025 #genuary17 Maybe related to today prompt: What happens if pi=4? https://youtu.be/tGfUaZ8hTzg

So Go lang is at a funny version huh’ v1.23.4 will there ever be a v1.23.45678? 🫠🤡

Hmm, I just noticed that the feed template seems to be broken on your yarnd instance, @kat@yarn.girlonthemoon.xyz. Looking at your raw feed file (and your mates as well), line 6 reads:

# This is hosted by a Yarn.social pod yarn running yarnd ERSION@OMMIT go1.23.4

^^^^^^^^^^^^

Looks like the first letters of the version and commit got somehow chopped off. I’ve no idea what happened here, maybe @prologic@twtxt.net knows something. :-? I’m not familiar with the templating, I just recall @xuu@txt.sour.is reporting in IRC the other day that he’s also having great fun with his custom preamble from time to time.

That “broken” comment doesn’t hurt anything, it’s still a proper comment and hence ignored by clients. It’s just odd, that’s all.

It turns out my ISP supports ipv6. After 4-5 months with only ipv4, I thought to ask customer support, and they told me how to turn it on. (I’m pretty happy with ebox so far. Low-priced fibre with no issues so far. Though all my traffic goes through Montreal, 500km away from me in Toronto, which adds a few ms to network latency.)

How in da fuq do you actually make these fucking useless AI bots go way?

proxy-1:~# jq '. | select(.request.remote_ip=="4.227.36.76")' /var/log/caddy/access/mills.io.log | jq -s '. | last' | caddy-log-formatter -

4.227.36.76 - [2025-01-05 04:05:43.971 +0000] "GET /external?aff-QNAXWV=&f=mediaonly&f=noreplies&nick=g1n&uri=https%3A%2F%2Fmy-hero-ultra-impact-codes.linegames.org HTTP/2.0" 0 0

proxy-1:~# date

Sun Jan 5 04:05:49 UTC 2025

😱

For some reason, I was using calc all this time. I mean, it’s good, but I need to do base conversions (dec, hex, bin) very often and you have to type base(2) or base(16) in calc to do that. That’s exhausting after a while.

So I now replaced calc with a little Python script which always prints the results in dec/hex/bin, grouped in bytes (if the result is an integer). That’s what I need. It’s basically just a loop around Python’s exec().

$ mcalc

> 123

123 0x[7b] 0b[01111011]

> 1234

1234 0x[04 d2] 0b[00000100 11010010]

> 0x7C00 + 0x3F + 512

32319 0x[7e 3f] 0b[01111110 00111111]

> a = 10; b = 0x2b; c = 0b1100101

10 0x[0a] 0b[00001010]

> a + b + 3 * c

356 0x[01 64] 0b[00000001 01100100]

> 2**32 - 1

4294967295 0x[ff ff ff ff] 0b[11111111 11111111 11111111 11111111]

> 4 * atan(1)

3.141592653589793

> cos(pi)

-1.0

Easy: 4.06 miles, 00:08:51 average pace, 00:35:59 duration

51F this morning with a bit of a breeze which was great. felt easy but i think the enjoyment of being outside brought my pace and heart rate up a bit. actually slept well last night and woke up refreshed… been about a month or more i think.

#running

@bender@twtxt.net Dud! you should see the updated version! 😂 I have just discovered the scratch #container image and decided I wanted to play with it… I’m probably going to end up rebuilding a LOT of images.

~/htwtxt » podman image list htwtxt

REPOSITORY TAG IMAGE ID CREATED SIZE

localhost/htwtxt 1.0.7-scratch 2d5c6fb7862f About a minute ago 12 MB

localhost/htwtxt 1.0.5-alpine 13610a37e347 4 weeks ago 20.1 MB

localhost/htwtxt 1.0.7-alpine 2a5c560ee6b7 4 weeks ago 20.1 MB

docker.io/buckket/htwtxt latest c0e33b2913c6 8 years ago 778 MB

Easy run: 4.07 miles, 00:09:19 average pace, 00:37:56 duration

kept it easy. no watching pace or anything and played it by feel.

#running

Das Spiel der 20 Felder: Die möglichen Regeln des 4.600 Jahre alten Spiels mit einem Entwurf für einen modernen Spielplan.

after thinking and researching about it, yep, I agree that WebFinger is a good idea.

For example reading here: https://bsky.social/about/blog/4-28-2023-domain-handle-tutorial

I wasn’t considering some scenarios, like multiple accounts for a single domain (See ‘How can I set and manage multiple subdomain handles?’ in the link above)

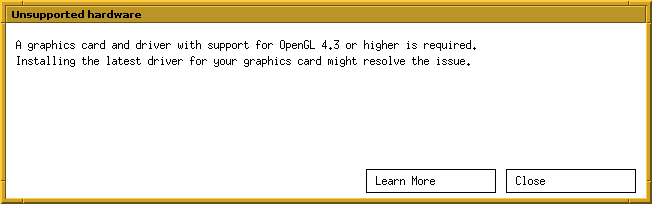

@bender@twtxt.net Hmmm… it makes sense. Now I curious, how old is his hardware though? is it SolveSpace kind of old? or reaaaally Blender 2.4 series kid of old!? 🤔

Goodbye Blender, I guess? 🤔

A bit annoying, but not much of a problem. The only thing I did with Blender was make some very simple 3D-printable objects.

I’ll have a look at the alternatives out there. Worst case is I go back to Art of Illusion, which I used heavily ~15 years ago.

Termux same thing @doesnm uses and it worked 👍 Media

@doesnm@doesnm.p.psf.lt No it’s all good… I’ve just rebuilt it from master and it doesn’t look like anything is broken:

~/GitRepos> git clone https://github.com/plomlompom/htwtxt.git

Cloning into 'htwtxt'...

remote: Enumerating objects: 411, done.

remote: Total 411 (delta 0), reused 0 (delta 0), pack-reused 411 (from 1)

Receiving objects: 100% (411/411), 87.89 KiB | 430.00 KiB/s, done.

Resolving deltas: 100% (238/238), done.

~/GitRepos> cd htwtxt

master ~/GitRepos/htwtxt> go mod init htwtxt

go: creating new go.mod: module htwtxt

go: to add module requirements and sums:

go mod tidy

master ~/GitRepos/htwtxt> go mod tidy

go: finding module for package github.com/gorilla/mux

go: finding module for package golang.org/x/crypto/bcrypt

go: finding module for package gopkg.in/gomail.v2

go: finding module for package golang.org/x/crypto/ssh/terminal

go: found github.com/gorilla/mux in github.com/gorilla/mux v1.8.1

go: found golang.org/x/crypto/bcrypt in golang.org/x/crypto v0.29.0

go: found golang.org/x/crypto/ssh/terminal in golang.org/x/crypto v0.29.0

go: found gopkg.in/gomail.v2 in gopkg.in/gomail.v2 v2.0.0-20160411212932-81ebce5c23df

go: finding module for package gopkg.in/alexcesaro/quotedprintable.v3

go: found gopkg.in/alexcesaro/quotedprintable.v3 in gopkg.in/alexcesaro/quotedprintable.v3 v3.0.0-20150716171945-2caba252f4dc

master ~/GitRepos/htwtxt> go build

master ~/GitRepos/htwtxt> ll

.rw-r--r-- aelaraji aelaraji 330 B Fri Nov 22 20:25:52 2024 go.mod

.rw-r--r-- aelaraji aelaraji 1.1 KB Fri Nov 22 20:25:52 2024 go.sum

.rw-r--r-- aelaraji aelaraji 8.9 KB Fri Nov 22 20:25:06 2024 handlers.go

.rwxr-xr-x aelaraji aelaraji 12 MB Fri Nov 22 20:26:18 2024 htwtxt <-------- There's the binary ;)

.rw-r--r-- aelaraji aelaraji 4.2 KB Fri Nov 22 20:25:06 2024 io.go

.rw-r--r-- aelaraji aelaraji 34 KB Fri Nov 22 20:25:06 2024 LICENSE

.rw-r--r-- aelaraji aelaraji 8.5 KB Fri Nov 22 20:25:06 2024 main.go

.rw-r--r-- aelaraji aelaraji 5.5 KB Fri Nov 22 20:25:06 2024 README.md

drwxr-xr-x aelaraji aelaraji 4.0 KB Fri Nov 22 20:25:06 2024 templates