@doesnm@doesnm.p.psf.lt Fot a sample access log? Which tool are you using?

how to parse caddy access log with useragent tool? seems it dont detect anything in json

scp(1) options.

@mckinley@twtxt.net I mean, yes! I’ve heard a lot of good things about how efficient of a tool it is for backup and all; and I’m willing to spend the time and learn. It’s just that seeing those +400 possible options was a buzz-kill. 🫣 luckily @lyse and @movq shared their most used options!

@movq@www.uninformativ.de Yes, the tools are surprisingly fast. Still, magrep takes about 20 seconds to search through my archive of 140K emails, so to speed things up I would probably combine it with an indexer like mu, mairix or notmuch.

#fzf is the new emacs: a tool with a simple purpose that has evolved to include an #email client. https://sr.ht/~rakoo/omail/

I’m being a little silly, of course. fzf doesn’t actually check your email, but it appears to be basically the whole user interface for that mail program, with #mblaze wrangling the emails.

I’ve been thinking about how I handle my email, and am tempted to make something similar. (When I originally saw this linked the author was presenting it as an example tweaked to their own needs, encouraging people to make their own.)

This approach could surely also be combined with #jenny, taking the place of (neo)mutt. For example mblaze’s mthread tool presents a threaded discussion with indentation.

@movq@www.uninformativ.de @falsifian@www.falsifian.org @prologic@twtxt.net Maybe I don’t know what I’m talking about and You’ve probably already read this: Everything you need to know about the “Right to be forgotten” coming straight out of the EU’s GDPR Website itself. It outlines the specific circumstances under which the right to be forgotten applies as well as reasons that trump the one’s right to erasure …etc.

I’m no lawyer, but my uneducated guess would be that:

A) twts are already publicly available/public knowledge and such… just don’t process children’s personal data and MAYBE you’re good? Since there’s this:

… an organization’s right to process someone’s data might override their right to be forgotten. Here are the reasons cited in the GDPR that trump the right to erasure:

- The data is being used to exercise the right of freedom of expression and information.

- The data is being used to perform a task that is being carried out in the public interest or when exercising an organization’s official authority.

- The data represents important information that serves the public interest, scientific research, historical research, or statistical purposes and where erasure of the data would likely to impair or halt progress towards the achievement that was the goal of the processing.

B) What I love about the TWTXT sphere is it’s Human/Humane element! No deceptive algorithms, no Corpo B.S …etc. Just Humans. So maybe … If we thought about it in this way, it wouldn’t heart to be even nicer to others/offering strangers an even safer space.

I could already imagine a couple of extreme cases where, somewhere, in this peaceful world one’s exercise of freedom of speech could get them in Real trouble (if not danger) if found out, it wouldn’t necessarily have to involve something to do with Law or legal authorities. So, If someone asks, and maybe fearing fearing for… let’s just say ‘Their well being’, would it heart if a pod just purged their content if it’s serving it publicly (maybe relay the info to other pods) and call it a day? It doesn’t have to be about some law/convention somewhere … 🤷 I know! Too extreme, but I’ve seen news of people who’d gone to jail or got their lives ruined for as little as a silly joke. And it doesn’t even have to be about any of this.

P.S: Maybe make X tool check out robots.txt? Or maybe make long-term archives Opt-in? Opt-out?

P.P.S: Already Way too many MAYBE’s in a single twt! So I’ll just shut up. 😅

Speaking of AI tech (sorry!); Just came across this really cool tool built by some engineers at Google™ (currently completely free to use without any signup) called NotebookLM 👌 Looks really good for summarizing and talking to document 📃

I’m not advocating in either direction, btw. I haven’t made up my mind yet. 😅 Just braindumping here.

The (replyto:…) proposal is definitely more in the spirit of twtxt, I’d say. It’s much simpler, anyone can use it even with the simplest tools, no need for any client code. That is certainly a great property, if you ask me, and it’s things like that that brought me to twtxt in the first place.

I’d also say that in our tiny little community, message integrity simply doesn’t matter. Signed feeds don’t matter. I signed my feed for a while using GPG, someone else did the same, but in the end, nobody cares. The community is so tiny, there’s enough “implicit trust” or whatever you want to call it.

If twtxt/Yarn was to grow bigger, then this would become a concern again. But even Mastodon allows editing, so how much of a problem can it really be? 😅

I do have to “admit”, though, that hashes feel better. It feels good to know that we can clearly identify a certain twt. It feels more correct and stable.

Hm.

I suspect that the (replyto:…) proposal would work just as well in practice.

@prologic@twtxt.net I saw those, yes. I tried using yarnc, and it would work for a simple twtxt. Now, for a more convoluted one it truly becomes a nightmare using that tool for the job. I know there are talks about changing this hash, so this might be a moot point right now, but it would be nice to have a tool that:

- Would calculate the hash of a twtxt in a file.

- Would calculate all hashes on a

twtxt.txt(local and remote).

Again, something lovely to have after any looming changes occur.

Could someone knowledgable reply with the steps a grandpa will take to calculate the hash of a twtxt from the CLI, using out-of-the-box tools? I swear I read about it somewhere, but can’t find it.

@bender@twtxt.net that’s not your change, silly robot, it is mine! LOL. I am finding @prologic@twtxt.net’s tool handy to refer to previous posts (as reference, for example).

@aelaraji@aelaraji.com Btw, I’m also open to ideas for this tool and welcome any contributions 👌

@prologic@twtxt.net well…

how would that work exactly?

To my limited knowledge, Keyoxide is an open source project offering different tools for verifying one’s online persona(s). That’s done by either A) creating an Ariande Profile using the web interface, a CLI. or B) Just using your GPG key. Either way, you add in Identity claims to your different profiles, links and whatnot, and finally advertise your profile … Then there is a second set of Mobile/Web clients and CLI your correspondents can use to check your identity claims. I think of them like the front-ends of GPG Keyservers (which keyoxide leverages for verification when you opt for the GPG Key method), where you verify profiles using links, Key IDs and Fingerprints…

Who maintains cox site? Is it centralized or decentralized can be relied upon?

- Maintainers? Definitely not me, but here’s their Git stuff and OpenCollective page …

- Both ASP and Keyoxide Webtools can be self-hosted. I don’t see a central authority here… + As mentioned on their FAQ page the whole process can be done manually, so you don’t have to relay on any one/thing if you don’t want to, the whole thing is just another tool for convenience (with a bit of eye candy).

Does that mean then that every user is required to have a cox side profile?

Nop. But it looks like a nice option to prove that I’m the same person to whom that may concern if I ever change my Twtxt URL, host/join a yarn pod or if I reach out on other platforms to someone I’ve met in her. Otherwise I’m just happy exchanging GPG keys or confirm the change IRL at a coffee shop or something. 😁

@mckinley@twtxt.net To answer some of your questions:

Are SSH signatures standardized and are there robust software libraries that can handle them? We’ll need a library in at least Python and Go to provide verified feed support with the currently used clients.

We already have this. Ed25519 libraries exist for all major languages. Aside from using ssh-keygen -Y sign and ssh-keygen -Y verify, you can also use the salty CLI itself (https://git.mills.io/prologic/salty), and I’m sure there are other command-line tools that could be used too.

If we all implemented this, every twt hash would suddenly change and every conversation thread we’ve ever had would at least lose its opening post.

Yes. This would happen, so we’d have to make a decision around this, either a) a cut-off point or b) some way to progressively transition.

This tool, using age is pretty neat: https://github.com/ndavd/agevault. So simple, yet seemingly powerful!

@prologic@twtxt.net it’s a Clownflare option to prevent images on your website from being embedded on other websites. It helps with my low bandwidth resources. And I believe you can set-up similar rules with Nginx, I’m just too lazy to do it manually RN.

Kinda cool tool for bringing together all your timeline based data across socials.

pour faire mes cours, le générateur de grisse bouille est magique. https://framalab.org/gknd-creator/ . je découvre que je peux héberger le code source https://si3t.ch/tools/comicgen/. Reste donc à y déposer les images modèles qui me plaisent pour crréer des cours de sciences. Et bien évidemment, je ne peux m’empêcher de penser à <@peha@framapiaf.org> 👼

./tools/dump_cache.sh: line 8: bat: command not found

No Token Provided

I don’t have bat on my VPS and there is no package for installing it. Is cat a reasonable alternate?

There’s other potential uses for the tool (compare syscall latency between OSes, stat latency between file systems), but not what i’m after.

Holly insert inappropriate word here ! 🤣 I have finally done it !!!

FreeBSD 14.0-RELEASE (GENERIC) #0 releng/14.0-n265380-f9716eee8ab4: Fri Nov 10 05:57:23 UTC 2023

Welcome to FreeBSD!

% pkg update

The package management tool is not yet installed on your system.

Do you want to fetch and install it now? [y/N]:

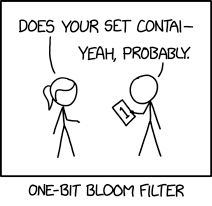

Bloom Filter

⌘ Read more

⌘ Read more

Interesting. Thanks! And thank you for replying. :) Indeed, I don’t check for mention with twtxt. To me, twtxt is to share, not to talk: there is my email commented at the top of my #twtxt.txt for this purpose. Trying to create discussions with twtxt is nonsens : there are much better tools to do so (email, xmpp, …) @aelaraji@aelaraji.com @im-in.space@im-in.space

I’m looking for wallpapers matching a color palette. Is there any tool to do so? I found the opposite, picture to palette, but not palette to picture :/

@mckinley@twtxt.net You definitely have got a point!

It is kind of a hassle to keep things in sync and NOT eff up.

It happened to me before but I was lucky enough to have backups elsewhere.

But, now I kind of have a workflow to avoid data loss while benefiting from both tools.

P.S: my bad, I meant Syncthing earlier on my original replay instead of Rsync. 🫠

</> htmx - high power tools for html really liking the idea of htmx 🤔 If I don’t have to learn all this complicated TypeScript/React/NPM garbage, I can just write regular SSA (Server-Side-Apps) and then progressively upgrade to SPA (Single-Page-App) using htmx hmmm 🧐

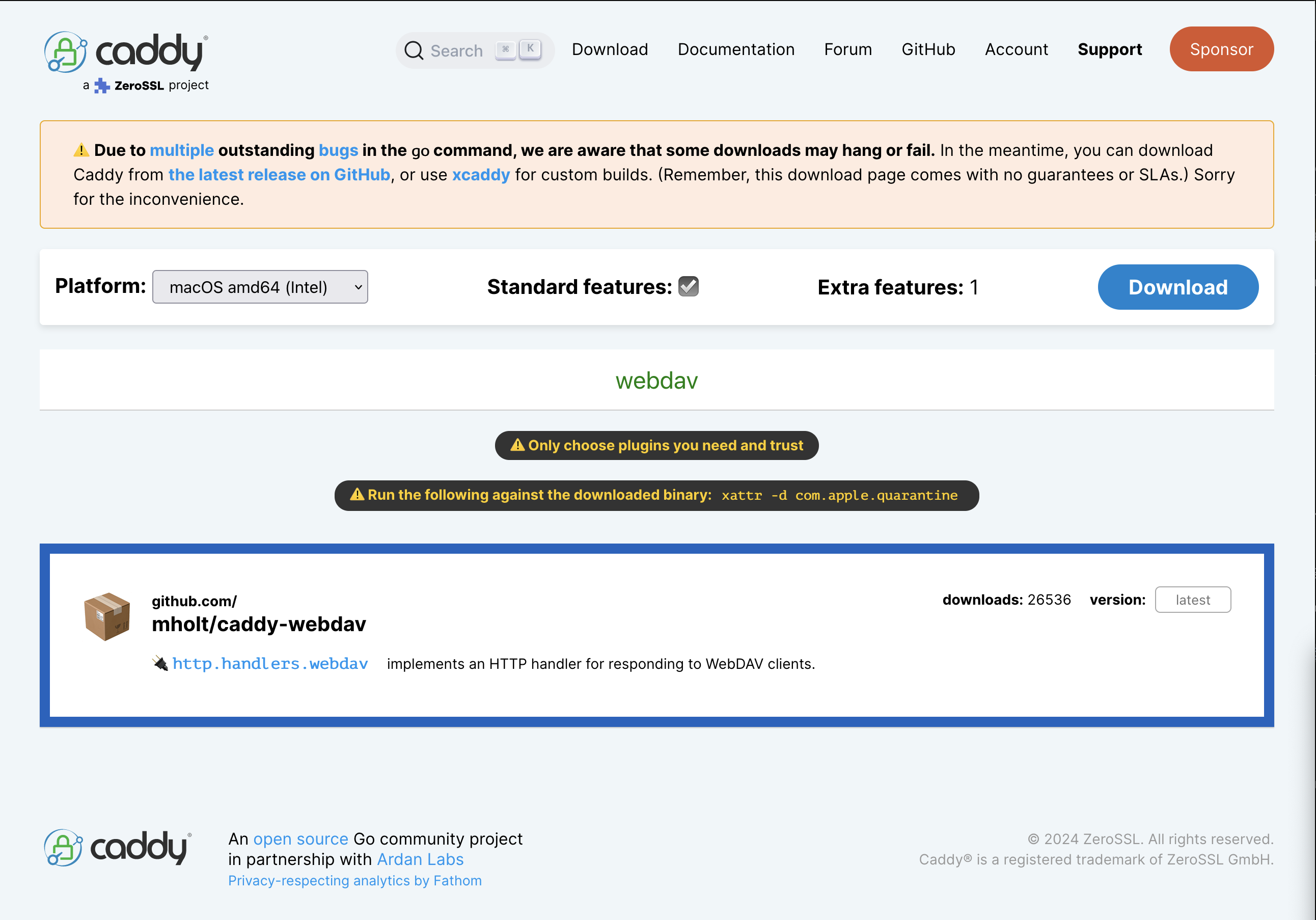

it is an addon in the download tool. Or you can use xcaddy to build it in.

its a notebook tool like evernote. @sorenpeter@darch.dk linked it above: https://joplinapp.org/

@lyse@lyse.isobeef.org its a hierarchy key value format. I designed it for the network peering tools i use.. I can grant access to different parts of the tree to other users.. kinda like directory permissions. a basic example of the format is:

@namespace

# multi

# line

# comment

root :value

# example space comment

@namespace.name space-tag

# attribute comments

attribute attr-tag :value for attribute

# attribute with multiple

# lines of values

foo :bar

:bin

:baz

repeated :value1

repeated :value2

each @ starts the definition of a namespace kinda like [name] in ini format. It can have comments that show up before. then each attribute is key :value and can have their own # comment lines.

Values can be multi line.. and also repeated..

the namespaces and values can also have little meta data tags added to them.

the service can define webhooks/mqtt topics to be notified when the configs are updated. That way it can deploy the changes out when they are updated.

#gemini readers, I wrote a tool to download new gemfeeds entries instead of opening a client: gemini://si3t.ch/log/2024-02-28-gemfeeds-downloader.txt

Google Chrome Gains AI Features Including a Writing Helper

Google is adding new AI features to Chrome, including tools to organize browser tabs, customize themes, and assist users with writing online content such as reviews and forum posts.

The writing helper is similar to an AI-powered feature already offered in Google’s experimental search experience, SGE, which helps users draft emails in various tones and lengths. W … ⌘ Read more

when writing a new tool/software, write doc first, explaining how it works. Then, actually writing the code is much easier :)

Forensics Tools https://github.com/mesquidar/ForensicsTools

@eapl.me@eapl.me I have many fond memories of Turbo pascal and Turbo C(++). They really did have a great help system. And debug tools! Its rare for language docs to be as approachable. QBasic was great. As was PHP docs when I first came into web.

Obligatory Twtxt post: I love how I can simply use a terminal window and some very basic tools (echo, scp, ssh) to publish thoughts, as they pop up, onto the Internet in a structured way, that can be found and perhaps even appreciated.

@lyse@lyse.isobeef.org I wish more standardization around distributed issues and PRs within the repo ala git-bug was around for this. I see it has added some bridge tooling now.

Anyone have any ideas how you might identify processes (pids) on Linux machine that are responsible for most of the Disk I/O on that machine and subsequently causing high I/O wait times for other processes? 🤔

Important bit: The machine has no access to the internet, there are hardly any standard tools on it, etc. So I have to get something to it “air gapped”. I have terminal access to it, so I can do interesting things like, base64 encode a static binary to my clipboard and paste it to a file, then base64 decode it and execute. That’s about the only mechanisms I have.

@prologic@twtxt.net do not use it, but gave it a try early on and was not impressed. it gave a good outline of what I asked but then unreliably dorked up all the crucial parts.

I will say though if it is truly learning at the rate they say then it should be a good tool.

In setting up my own company and it’s internal tools and services and supporting infrastructure, the ony thing I haven’t figured out how to solve “really well” is Email, Calendar and Contacts 😢 All the options that exist “suck”. They suck either in terms of “operational complexity and overheads” or “a poor user experience”.

@prologic@twtxt.net Horseshit hype:

- AI that we have today cannot think–there is no cognitive capacity

- AI that we have today cannot be interviewed–“inter” “viewing” is two minds interacting, but AI of today has no mind, which means this is a puppet show

- AI today is not free–it’s a tool, a machine, hardly different from a hammer. It does what a human directs it to do and has no drives, desires, or autonomy. What you’re seeing here is a fancy Mechnical Turk

This shit is probably paid for by AI companies who desperately want us to think of the AI as far more capable than it actually is, because that juices sales and gives them a way to argue they aren’t responsible for any harms it causes.

I’d love to read the original source code of this:

https://ecsoft2.org/t-tiny-editor

This was our standard editor back in the day, not an “emergency tool”. And it’s only 9kB in size … which feels absurd in 2023. 😅 The entire hex dump fits on one of today’s screens.

Being so small meant it had no config file. Instead, it came with TKEY.EXE, a little tool to binary-patch T.EXE to your likings.

Yep, that’s right, we have to use these tools in a proper way; terminal it’s not a friendly tool to use for this kind of stuff, on mobile devices, and web interfaces are prepared to bring us a confortable space.

Btw, I’m waiting for your php based client 😜 no pressure… 🤭

[lang=en] That was the reason for twtxt-php =P

I tried using CLI tools but it was too hacky, I think.

More if we consider Jakob’s Law, where we have prior expectations of a microblogging system.

A Web interface could be quite minimalistic and usable as well. (And mobile-friendly)

snac/the fediverse for a few days and already I've had to mute somebody. I know I come on strongly with my opinions sometimes and some people don't like that, but this person had already started going ad hominem (in my reading of it), and was using what felt to me like sketchy tactics to distract from the point I was trying to make and to shut down conversation. They were doing similar things to other people in the thread so rather than wait for it to get bad for me I just muted them. People get so weirdly defensive so fast when you disagree with something they said online. Not sure I fully understand that.

@prologic@twtxt.net Well, you can mute or block individual users, and you can mute conversations too. I think the tools for controlling your interactions aren’t so bad (they could definitely be improved ofc). And in my case, I was replying to something this person said, so it wasn’t outrageous for his reply to be pushed to me. Mostly, I was sad to see how quickly the conversation went bad. I thought I was offering something relatively uncontroversial, and actually I was just agreeing with and amplifying something another person had already said.

What I see here is that when I was reading your .txt, the timestamp was like 40 minutes later than current time. Say it’s 1pm and that twt is timed on 1.40pm

No idea why, perhaps your server has a wrong Timezone, or your twtxt tool is doing some timezome conversion?

Google Says It’ll Scrape Everything You Post Online for AI

Google updated its privacy policy over the weekend, explicitly saying the company reserves the right to scrape just about everything you post online to build its AI tools.

Google can eat shit.

Seems to me you could write a script that:

- Parses a StackOverflow question

- Runs it through an AI text generator

- Posts the output as a post on StackOverflow

and basically pollute the entire information ecosystem there in a matter of a few months? How long before some malicious actor does this? Maybe it’s being done already 🤷

What an asinine, short-sighted decision. An astonishing number of companies are actively reducing headcount because their executives believe they can use this newfangled AI stuff to replace people. But, like the dot com boom and subsequent bust, many of the companies going this direction are going to face serious problems when the hypefest dies down and the reality of what this tech can and can’t do sinks in.

We really, really need to stop trusting important stuff to corporations. They are not tooled to last.

@shreyan@twtxt.net I agree re: AR. Vircadia is neat. I stumbled on it years ago when I randomly started wondering “wonder what’s going on with Second Life and those VR things” and started googling around.

Unfortunately, like so many metaverse efforts, it’s almost devoid of life. Interesting worlds to explore, cool tools to build your own stuff, but almost no people in it. It feels depressing, like an abandoned shopping mall.

I have no interest in doing anything about it, even if I had the time (which I don’t), but these kind of thing happen all day every day to countless people. My silly blog post isn’t worth getting up in arms about, but there are artists and other creators who pour countless hours, heart and soul into their work, only to have it taken in exactly this way. That’s one of the reasons I’m so extremely negative about the spate of “AI” tools that have popped up recently. They are powered by theft.

There is a “right” way to make something like GitHub CoPilot, but Microsoft did not choose that way. They chose one of the most exploitative options available to them. For that reason, I hope they face significant consequences, though I doubt they will in the current climate. I also hope that CoPilot is shut down, though I’m pretty certain it will not be.

Other than access to the data behind it, Microsoft has nothing special that allows it to create something like CoPilot. The technology behind it has been around for at least a decade. There could be a “public” version of this same tool made by a cooperating group of people volunteering, “leasing”, or selling their source code into it. There could likewise be an ethically-created corporate version. Such a thing would give individual developers or organizations the choice to include their code in the tool, possibly for a fee if that’s something they want or require. The creators of the tool would have to acknowledge that they have suppliers–the people who create the code that makes their tool possible–instead of simply stealing what they need and pretending that’s fine.

This era we’re living through, with large companies stomping over all laws and regulations, blatantly stealing other people’s work for their own profit, cannot come to an end soon enough. It is destroying innovation, and we all suffer for that. Having one nifty tool like CoPilot that gives a bit of convenience is nowhere near worth the tremendous loss that Microsoft’s actions in this instace are creating for everyone.