I’m trying to implement configurable key bindings in tt. Boy, is parsing the key names into tcell.EventKeys a horrible thing. This type consists of three information:

- maybe a predefined compound key sequence, like Ctrl+A

- maybe some modifiers, such as Shift, Ctrl, etc.

- maybe a rune if neither modifiers are present nor a predefined compound key exists

It’s hardcoded usage results in code like this:

func (t *TreeView[T]) InputHandler() func(event *tcell.EventKey, setFocus func(p tview.Primitive)) {

return t.WrapInputHandler(func(event *tcell.EventKey, setFocus func(p tview.Primitive)) {

switch event.Key() {

case tcell.KeyUp:

t.moveUp()

case tcell.KeyDown:

t.moveDown()

case tcell.KeyHome:

t.moveTop()

case tcell.KeyEnd:

t.moveBottom()

case tcell.KeyCtrlE:

t.moveScrollOffsetDown()

case tcell.KeyCtrlY:

t.moveScrollOffsetUp()

case tcell.KeyTab, tcell.KeyBacktab:

if t.finished != nil {

t.finished(event.Key())

}

case tcell.KeyRune:

if event.Modifiers() == tcell.ModNone {

switch event.Rune() {

case 'k':

t.moveUp()

case 'j':

t.moveDown()

case 'g':

t.moveTop()

case 'G':

t.moveBottom()

}

}

}

})

}

This data structure is just awful to handle and especially initialize in my opinion. Some compound tcell.Keys are mapped to human-readable names in tcell.KeyNames. However, these names always use - to join modifiers, e.g. resulting in Ctrl-A, whereas tcell.EventKey.Name() produces +-delimited strings, e.g. Ctrl+A. Gnaarf, why this asymmetry!? O_o

I just checked k9s and they’re extending tcell.KeyNames with their own tcell.Key definitions like crazy: https://github.com/derailed/k9s/blob/master/internal/ui/key.go Then, they convert an original tcell.EventKey to tcell.Key: https://github.com/derailed/k9s/blob/b53f3091ca2d9ab963913b0d5e59376aea3f3e51/internal/ui/app.go#L287 This must be used when actually handling keyboard input: https://github.com/derailed/k9s/blob/e55083ba271eed6fc4014674890f70c5ed6c70e0/internal/ui/tree.go#L101

This seems to be much nicer to use. However, I fear this will break eventually. And it’s more fragile in general, because it’s rather easy to forget the conversion or one can get confused whether a certain key at hand is now an original tcell.Key coming from the library or an “extended” one.

I will see if I can find some other programs that provide configurable tcell key bindings.

@eldersnake@we.loveprivacy.club

Steps to world domination:

- “Invent” “AI” (by using other people’s data).

- Get people hyped about it and ideally hooked on it.

- Only provide it as a cloud service. But hey, if you want to, you can run it locally!

- Buy all hardware available on the market, so that nobody but you can build more systems.

- All PCs of consumers and competitors are too weak now and can’t be upgraded anymore.

- Everybody depends on your cloud service! Win!

All of that is possible because corporations don’t have a “conscience” in capitalism. Nobody forces the RAM manufacturers to sell all their stuff to just one or two buyers, but since the only goal of that manufacturer is to make money, they do it.

Nice! 😊 Here are the startup latencies for the simplest Mu (µ) program. println("Hello World"):

- Interpreter: ~5ms

- Native Code: ~1.5ms

Hmmm 🤔

Excluding merges, 1 author has pushed 171 commits to main and 175 commits to all branches. On main, 294 files have changed and there have been 52880 additions and 18269 deletions.

From the Mu (µ) Gitea Activity Tab

@movq@www.uninformativ.de Well, just a very limited subset thereof:

- inline and multiline code blocks using single/double/triple backticks (but no code blocks with just indentation)

- markdown links using using

[text](url)

- markdown media links using

And that’s it. No bold, italics, lists, quotes, headlines, etc.

Just like mentions, plain URLs, markdown links and markdown media URLs are highlighted and available in the URLs View. They’re also colored differently, similarly to code segments.

I definitely should write some documentation and provide screenshots.

I assume you made the thing load quickly, didn’t you?

That’s the problem with Python. If you have a couple of files to import, it will take time.

I want this to be reasonably fast on my old Intel NUC from 2016 (Celeron N3050 @ 1.60GHz) and I already notice that the program startup takes about 95 ms (or 125 ms when there are no .pyc files yet). That’s still fine, but it shows that I’ll have to be careful and keep this thing very small …

Python 3.14 will bring lazy imports, maybe that can help in some cases.

The tt URLs View now automatically selects the first URL that I probably are going to open. In decreasing order, the URL types are:

- markdown media URLs (images, videos, etc.)

- markdown or plaintext URLs

- subjects

- mentions

I might differentiate between mentions of subscribed and unsubscribed feeds in the future. The odds of opening a new feed over an already existing one are higher.

Whoo! I fixed one of the hardest bugs in mu (µ) I think I’ve had to figure out. Took me several days in fact to figure it out. The basic problem was, println(1, 2) was bring printed as 1 2 in the bytecode VM and 1 nil when natively compiled to machine code on macOS. In the end it turned out the machine code being generated / emitted meant that the list pointers for the rest... of the variadic arguments was being slot into a register that was being clobbered by the mu_retain and mu_release calls and effectively getting freed up on first use by the RC (reference counting) garbage collector 🤦♂️

very good blog post that reminded me why it’s taking so long to ship bbycll — previously i had computed the hashes of every post before storing them in the database, after realizing it’s a much better idea to compute the hashes during runtime and only store the post content & timestamp i’m now having to rewrite every function that reads & writes data. i hope the reason as to why i lost motivation is obvious — thankfully i caught it early enough so that once i’m done rewriting just those functions i should™ be able to finalize 1.0-rc with little hassle

I rewrote all my solutions in Rust (except for day 10 part 2) and these are the runtimes on my i7-3770 from 2013 (this measures CLOCK_PROCESS_CPUTIME_ID, not wallclock):

day01/1 [ 00.000501311] Result: 1066

day01/2 [ 00.000400298] Result: 6223

day02/1 [ 00.000358848] Result: 12586854255

day02/2 [ 00.000750711] Result: 17298174201

day03/1 [ 00.000106537] Result: 17405

day03/2 [ 00.000404632] Result: 171990312704598

day04/1 [ 00.000257517] Result: 1626

day04/2 [ 00.007495342] Result: 9173

day05/1 [ 00.000237212] Result: 505

day05/2 [ 00.000142731] Result: 344423158480189

day06/1 [ 00.000229629] Result: 4076006202939

day06/2 [ 00.000279552] Result: 7903168391557

day07/1 [ 00.000204422] Result: 1622

day07/2 [ 00.000283816] Result: 10357305916520

day08/1 [ 00.029427421] Result: 84968

day08/2 [ 00.028089859] Result: 8663467782

day09/1 [ 00.000310304] Result: 4764078684

day09/2 [ 00.015512554] Result: 1652344888

day10/1 [ 00.000796663] Result: 375

day10/2 [ --.---------] Result: 15377 (Z3)

day11/1 [ 00.000416804] Result: 753

day11/2 [ 00.000660528] Result: 450854305019580

day12/1 [ 00.000336081] Result: 577

day12/2 [ 00.000000695] Result: no part 2

A little under 90 ms total.

On my Samsung NC10 netbook from 2011 with its Intel Atom N455 at 1.6 GHz:

day01/1 [ 00.003771326] Result: 1066

day01/2 [ 00.003267317] Result: 6223

day02/1 [ 00.003902698] Result: 12586854255

day02/2 [ 00.006659479] Result: 17298174201

day03/1 [ 00.000747544] Result: 17405

day03/2 [ 00.002737587] Result: 171990312704598

day04/1 [ 00.001263892] Result: 1626

day04/2 [ 00.044985301] Result: 9173

day05/1 [ 00.001696761] Result: 505

day05/2 [ 00.000978962] Result: 344423158480189

day06/1 [ 00.001387660] Result: 4076006202939

day06/2 [ 00.001734248] Result: 7903168391557

day07/1 [ 00.001295528] Result: 1622

day07/2 [ 00.001809659] Result: 10357305916520

day08/1 [ 00.277251443] Result: 84968

day08/2 [ 00.284359332] Result: 8663467782

day09/1 [ 00.003152407] Result: 4764078684

day09/2 [ 00.071123459] Result: 1652344888

day10/1 [ 00.005279527] Result: 375

day10/2 [ --.---------] Result: 15377 (Z3)

day11/1 [ 00.003273342] Result: 753

day11/2 [ 00.005139719] Result: 450854305019580

day12/1 [ 00.002857552] Result: 577

day12/2 [ 00.000004421] Result: no part 2

A little over 700 ms total.

I like this. You get performance that’s more or less in the ballpark of C, but without the footguns.

If your very popular project with lots of stars on GitHub is over 10 years old, and you’re still at a pre-1.0 version because you’re using SemVer and a 1.0 would mean making some kind of commitment and that’s somehow not desirable for you, then I think you’re doing something wrong. 🤔

I cleaned up all my of AoC (Advent of Code) 2025 solutions, refactored many of the utilities I had to write as reusable libraries, re-tested Day 1 (but nothing else). here it is if you’re curious! This is written in mu, my own language I built as a self-hosted minimal compiler/vm with very few types and builtins.

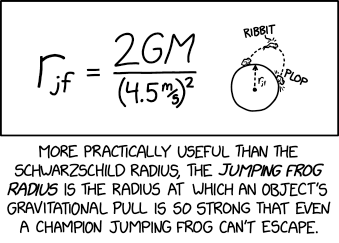

Jumping Frog Radius

⌘ Read more

⌘ Read more

I’m having to write my own functions like this in mu just to solve AoC puzzles :D

fn pow10(k) {

p := 1

i := 0

while i < k {

p = p * 10

i = i + 1

}

return p

}

I just completed “Secret Entrance” - Day 1 - Advent of Code 2025 #AdventOfCode https://adventofcode.com/2025/day/1 — However I did it in my own toy programming language called mu, which I had to build first 🤣

Come back from my trip, run my AoC 2025 Day 1 solution in my own language (mu) and find it didn’t run correctly 🤣 Ooops!

$ ./bin/mu examples/aoc2025/day1.mu

closure[0x140001544e0]

Alright, Advent of Code is over:

https://www.uninformativ.de/blog/postings/2025-12-12/0/POSTING-en.html

It’s been quite the time sink, especially with the DOS games on top, but it was fun. 🥳

In case you’re wondering: All puzzles (except for part 2 of day 10) were doable in Python 1 on SuSE Linux 6.4 and ran in a finite time on the Pentium 133. Puzzle 10/2 might have been doable as well if I had better education. 🤣

Awk to take lines from Plan 9’s /lib/unicode and prepend the actual glyph and a tab: awk ‘{cmd=sprintf(“unicode %s”, $1); cmd | getline c; printf(“%s %s\n”, c, $0)}’

@prologic@twtxt.net No beak, no feathers, … looks suspicious! That’s probably a weird mammal!!1! 😅🤣

Gootosocial to a Pleroma one. While GTS is kinda cute (lightweight and easy to manage) of a software, the inability to fetch/scroll through people's past toots when visiting a profile or having access to a federated timeline and a proper search functionality ...etc felt like handicap for the past N months.

@bender@twtxt.net yeah, I’ve been reading through the documentation last night and it felt overwhelming for a minute… +1 point goes to GTS’s docs. but hey, I’ll be taking the easy route: podman-compose up -d they provide both a container image and an example compose file in a separate git repo but I’m wondering why that is not mentioned anywhere in the docs, (unless it is and I haven’t seen it yet)

Day 2 was pretty tough on my old hardware. Part 1 originally took 16 minutes, then I got it down to 9 seconds – only to realize later that my solution abused some properties of my particular input. A correct solution will probably take about 30 seconds. 🫤

Part 2 took 29 minutes this morning. I wrote an optimized version but haven’t tested it yet. I hope it’ll be under a minute.

Python 1 feels really slow, even compared to Java 1. And these first puzzles weren’t even computationally intensive. We’ll see how far I’ll make it …

AoC Day #1 solution (mu): https://gist.mills.io/prologic/d3c22bcbc22949939b715a850fe63131

Thinking about doing Advent of Code in my own tiny language mu this year.

mu is:

- Dynamically typed

- Lexically scoped with closures

- Has a Go-like curly-brace syntax

- Built around lists, maps, and first-class functions

Key syntax:

- Functions use

fnand braces:

fn add(a, b) {

return a + b

}

- Variables use

:=for declaration and=for assignment:

x := 10

x = x + 1

- Control flow includes

if/elseandwhile:

if x > 5 {

println("big")

} else {

println("small")

}

while x < 10 {

x = x + 1

}

- Lists and maps:

nums := [1, 2, 3]

nums[1] = 42

ages := {"alice": 30, "bob": 25}

ages["bob"] = ages["bob"] + 1

Supported types:

int

bool

string

list

map

fn

nil

mu feels like a tiny little Go-ish, Python-ish language — curious to see how far I can get with it for Advent of Code this year. 🎄

Day 1 was surprisingly finnicky. A lot of people got it wrong, apparently. Me too. 🤣

Advent of Code 2025 starts tomorrow. 🥳🎄

This year, I’m going to use Python 1 on SuSE Linux 6.4, writing the code on my trusty old Pentium 133 with its 64 MB of RAM. No idea if that old version of Python will be fast enough for later puzzles. We’ll see.

@lyse@lyse.isobeef.org Damn. That was stupid of me. I should have posted examples using 2026-03-01 as cutoff date. 😂

In my actual test suite, everything uses 2027-01-01 and then I have this, hoping that that’s good enough. 🥴

def test_rollover():

d = jenny.HASHV2_CUTOFF_DATE

assert len(jenny.make_twt_hash(URL, d - timedelta(days=7), TEXT)) == 7

assert len(jenny.make_twt_hash(URL, d - timedelta(seconds=3), TEXT)) == 7

assert len(jenny.make_twt_hash(URL, d - timedelta(seconds=2), TEXT)) == 7

assert len(jenny.make_twt_hash(URL, d - timedelta(seconds=1), TEXT)) == 7

assert len(jenny.make_twt_hash(URL, d, TEXT)) == 12

assert len(jenny.make_twt_hash(URL, d + timedelta(seconds=1), TEXT)) == 12

assert len(jenny.make_twt_hash(URL, d + timedelta(seconds=2), TEXT)) == 12

assert len(jenny.make_twt_hash(URL, d + timedelta(seconds=3), TEXT)) == 12

assert len(jenny.make_twt_hash(URL, d + timedelta(days=7), TEXT)) == 12

(In other words, I don’t care as long as it’s before 2027-01-01. 😏😅)

@prologic@twtxt.net Your gitea thinks the LICENSE file in the yarn repository is SSPL-1.0 instead of GNU AGPL 3.0,

and I can’t help but giggle at that

yarnd installation has been properly fixed.

cat /etc/mokou/yarnd.conf

exec=/usr/pkg/sbin/daemonize -c/var/db/yarnd -u www -p /var/run/yarnd.pid /usr/pkg/sbin/chpst -e /usr/local/etc/yarnd /usr/local/sbin/yarnd -b 127.0.0.1:[classified information]

I know this might seem a bit overengineered, but the previous command until now had the secrets exposed on the process list

Tired to re-enable the Ege route to git.mills.io today (after finishing work) and this is what I found 🤯 Tehse asshole/cunts are still at it !!! 🤬 – So let’s instead see if this works:

$ host git.mills.io 1.1.1.1

Using domain server:

Name: 1.1.1.1

Address: 1.1.1.1#53

Aliases:

git.mills.io is an alias for fuckoff.mills.io.

fuckoff.mills.io has address 127.0.0.1

PS: Would anyone be interested if I started a massive global class action suit against companies that do this kind of abusive web crawling behavior, violate/disregards robots.txt and whatever else standards that are set in stone by the W3C? 🤔

This caveman is getting too old for the Internet… 😅 It took me 1 hrs and 50 mins to catch up with what’s been going on my feed.

I’ve once again brought up a Gitea instance on my server space, but there are two highlights here:

- No self-registration (accounts are tied to the e-mail server, which is in turn tied to the system accounts)

- Going beyond the landing page requires to be logged in, no excuses. (It also could scare the AI crawlers to oblivion, avoiding Anubis at that)

That’s it.

@lyse@lyse.isobeef.org Probably wouldn’t help, since almost every request comes from a different IP address. These are the hits on those weird /projects URLs since Sunday:

1 IP has 5 hits

1 IP has 4 hits

13 IPs have 3 hits

280 IPs have 2 hits

25543 IPs have 1 hit

The total number of hits has decreased now. Maybe the botnet has moved on …

Fark me 🤦♂️ I woke up quite late today (after a long night helping/assisting with a Mainframe migration last night fork work) to abusive traffic and my alerts going off. The impact? My pod (twtxt.net) was being hammered by something at a request rate of 30 req/s (there are global rate limits in place, but still…). The culprit? Turned out to be a particular IP 43.134.51.191 and after looking into who own s that IP I discovered it was yet-another-bad-customer-or-whatever from Tencent, so that entire network (ASN) is now blocked from my Edge:

+# Who: Tentcent

+# Why: Bad Bots

+132203

Total damage?

$ caddy-log-formatter twtxt.net.log | cut -f 1 -d ' ' | sort | uniq -c | sort -r -n -k 1 | head -n 5

61371 43.134.51.191

402 159.196.9.199

121 45.77.238.240

8 106.200.1.116

6 104.250.53.138

61k reqs over an hour or so (before I noticed), bunch of CPU time burned, and useless waste of my fucking time.

And regarding those broken URLs: I once speculated that these bots operate on an old dataset, because I thought that my redirect rules actually were broken once and produced loops. But a) I cannot reproduce this today, and b) I cannot find anything related to that in my Git history, either. But it’s hard to tell, because I switched operating systems and webservers since then …

But the thing is that I’m seeing new URLs constructed in this pattern. So this can’t just be an old crawling dataset.

I am now wondering if those broken URLs are bot bugs as well.

They look like this (zalgo is a new project):

https://www.uninformativ.de/projects/slinp/zalgo/scksums/bevelbar/

When you request that URL, you get redirected to /git/:

$ curl -sI https://www.uninformativ.de/projects/slinp/zalgo/scksums/bevelbar/

HTTP/1.0 301 Moved Permanently

Date: Sat, 22 Nov 2025 06:13:51 GMT

Server: OpenBSD httpd

Connection: close

Content-Type: text/html

Content-Length: 510

Location: /git/

And on /git/, there are links to my repos. So if a broken client requests https://www.uninformativ.de/projects/slinp/zalgo/scksums/bevelbar/, then sees a bunch of links and simply appends them, you’ll end up with an infinite loop.

Is that what’s going on here or are my redirects actually still broken … ?

I just noticed this pattern:

uninformativ.de 201.218.xxx.xxx - - [22/Nov/2025:06:53:27 +0100] "GET /projects/lariza/multipass/xiate/padme/gophcatch HTTP/1.1" 301 0 "" "Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/112.0.0.0 Safari/537.36"

www.uninformativ.de 103.10.xxx.xxx - - [22/Nov/2025:06:53:28 +0100] "GET http://uninformativ.de/projects/lariza/multipass/xiate/padme/gophcatch HTTP/1.1" 400 0 "" "Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/112.0.0.0 Safari/537.36"

Let me add some spaces to make it more clear:

uninformativ.de 201.218.xxx.xxx - - [22/Nov/2025:06:53:27 +0100] "GET /projects/lariza/multipass/xiate/padme/gophcatch HTTP/1.1" 301 0 "" "Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/112.0.0.0 Safari/537.36"

www.uninformativ.de 103.10.xxx.xxx - - [22/Nov/2025:06:53:28 +0100] "GET http://uninformativ.de/projects/lariza/multipass/xiate/padme/gophcatch HTTP/1.1" 400 0 "" "Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/112.0.0.0 Safari/537.36"

Some IP (from Brazil) requests some (non-existing, completely broken) URL from my webserver. But they use the hostname uninformativ.de, so they get redirected to www.uninformativ.de.

In the next step, just a second later, some other IP (from Nepal) issues an HTTP proxy request for the same URL.

Clearly, someone has no idea how HTTP redirects work. And clearly, they’re running their broken code on some kind of botnet all over the world.

Testing 1 2 3

Testing 1 2 3

FTR, I see one (two) issues with PyQt6, sadly:

- The PyQt6 docs appear to be mostly auto-generated from the C++ docs. And they contain many errors or broken examples (due to the auto-conversion). I found this relatively unpleasent to work with.

- (Until Python finally gets rid of the Global Interpreter Lock properly, it’s not really suited for GUI programs anyway – in my opinion. You can’t offload anything to a second thread, because the whole program is still single-threaded. This would have made my fractal rendering program impossible, for example.)

Testing 1 2 3 @manton@twtxt.net

For those curious, the new Twtxt <-> ActivityPub bridge I’m building (bidirectional) simply requires three things:

- You register your Twtxt feed to the bridge: https://bridge.twtxt.net

- You verify that you in fact own/control the feed by putting the verification code somewhere on/in your feed (doesn’t matter where or how)

- You proxy/forward requests for

/.well-known/webfingerto the Bridgebridge.twtxt.net.

I’m still testing through and ironing out bugs 🐛 Please be patient! 🙏

My goodness, a new level of stupidity.

The bots are now doing things like this:

GET http://uninformativ.de/projects/lariza/feednotify/datenstrahler/slinp/countty HTTP/1.1

- That URL does not exist.

- By including

http://uninformativ.dein that request, this instructs the webserver to do an HTTP proxy request. Of course, this isn’t allowed on my webserver (and shouldn’t by allowed on any normal webserver), resulting in HTTP 400. And even if it were, the target would be the exact same server, making a proxy request unnecessary.

And of course, it’s not just 50 hits like this or 100 or 1’000 or 10’000. No, it’s over 150’000 in the last 2 days. All from vastly different IP ranges of different cloud hosters.

This almost looks like a DDoS attack, but it’s just completely stupid. This feels more like some idiot vibe coded a crawler.

@prologic@twtxt.net yeah I should probably update. Version 0.15.1@31958f89 2025-06-29T20:35:20+10:00 go1.23.1

@prologic@twtxt.net Let’s go through it one by one. Here’s a wall of text that took me over 1.5 hours to write.

The criticism of AI as untrustworthy is a problem of misapplication, not capability.This section says AI should not be treated as an authority. This is actually just what I said, except the AI phrased/framed it like it was a counter-argument.

The AI also said that users must develop “AI literacy”, again phrasing/framing it like a counter-argument. Well, that is also just what I said. I said you should treat AI output like a random blog and you should verify the sources, yadda yadda. That is “AI literacy”, isn’t it?

My text went one step further, though: I said that when you take this requirement of “AI literacy” into account, you basically end up with a fancy search engine, with extra overhead that costs time. The AI missed/ignored this in its reply.

Okay, so, the AI also said that you should use AI tools just for drafting and brainstorming. Granted, a very rough draft of something will probably be doable. But then you have to diligently verify every little detail of this draft – okay, fine, a draft is a draft, it’s fine if it contains errors. The thing is, though, that you really must do this verification. And I claim that many people will not do it, because AI outputs look sooooo convincing, they don’t feel like a draft that needs editing.

Can you, as an expert, still use an AI draft as a basis/foundation? Yeah, probably. But here’s the kicker: You did not create that draft. You were not involved in the “thought process” behind it. When you, a human being, make a draft, you often think something like: “Okay, I want to draw a picture of a landscape and there’s going to be a little house, but for now, I’ll just put in a rough sketch of the house and add the details later.” You are aware of what you left out. When the AI did the draft, you are not aware of what’s missing – even more so when every AI output already looks like a final product. For me, personally, this makes it much harder and slower to verify such a draft, and I mentioned this in my text.

Skill Erosion vs. Skill EvolutionYou, @prologic@twtxt.net, also mentioned this in your car tyre example.

In my text, I gave two analogies: The gym analogy and the Google Translate analogy. Your car tyre example falls in the same category, but Gemini’s calculator example is different (and, again, gaslight-y, see below).

What I meant in my text: A person wants to be a programmer. To me, a programmer is a person who writes code, understands code, maintains code, writes documentation, and so on. In your example, a person who changes a car tyre would be a mechanic. Now, if you use AI to write the code and documentation for you, are you still a programmer? If you have no understanding of said code, are you a programmer? A person who does not know how to change a car tyre, is that still a mechanic?

No, you’re something else. You should not be hired as a programmer or a mechanic.

Yes, that is “skill evolution” – which is pretty much my point! But the AI framed it like a counter-argument. It didn’t understand my text.

(But what if that’s our future? What if all programming will look like that in some years? I claim: It’s not possible. If you don’t know how to program, then you don’t know how to read/understand code written by an AI. You are something else, but you’re not a programmer. It might be valid to be something else – but that wasn’t my point, my point was that you’re not a bloody programmer.)

Gemini’s calculator example is garbage, I think. Crunching numbers and doing mathematics (i.e., “complex problem-solving”) are two different things. Just because you now have a calculator, doesn’t mean it’ll free you up to do mathematical proofs or whatever.

What would have worked is this: Let’s say you’re an accountant and you sum up spendings. Without a calculator, this takes a lot of time and is error prone. But when you have one, you can work faster. But once again, there’s a little gaslight-y detail: A calculator is correct. Yes, it could have “bugs” (hello Intel FDIV), but its design actually properly calculates numbers. AI, on the other hand, does not understand a thing (our current AI, that is), it’s just a statistical model. So, this modified example (“accountant with a calculator”) would actually have to be phrased like this: Suppose there’s an accountant and you give her a magic box that spits out the correct result in, what, I don’t know, 70-90% of the time. The accountant couldn’t rely on this box now, could she? She’d either have to double-check everything or accept possibly wrong results. And that is how I feel like when I work with AI tools.

Gemini has no idea that its calculator example doesn’t make sense. It just spits out some generic “argument” that it picked up on some website.

3. The Technical and Legal Perspective (Scraping and Copyright)The AI makes two points here. The first one, I might actually agree with (“bad bot behavior is not the fault of AI itself”).

The second point is, once again, gaslighting, because it is phrased/framed like a counter-argument. It implies that I said something which I didn’t. Like the AI, I said that you would have to adjust the copyright law! At the same time, the AI answer didn’t even question whether it’s okay to break the current law or not. It just said “lol yeah, change the laws”. (I wonder in what way the laws would have to be changed in the AI’s “opinion”, because some of these changes could kill some business opportunities – or the laws would have to have special AI clauses that only benefit the AI techbros. But I digress, that wasn’t part of Gemini’s answer.)

tl;drExcept for one point, I don’t accept any of Gemini’s “criticism”. It didn’t pick up on lots of details, ignored arguments, and I can just instinctively tell that this thing does not understand anything it wrote (which is correct, it’s just a statistical model).

And it framed everything like a counter-argument, while actually repeating what I said. That’s gaslighting: When Alice says “the sky is blue” and Bob replies with “why do you say the sky is purple?!”

But it sure looks convincing, doesn’t it?

Never againThis took so much of my time. I won’t do this again. 😂

@movq@www.uninformativ.de this I find more worrisome, and saw no mention of it on your text: Right-Wing Chatbots Turbocharge America’s Political and Cultural Wars (gift article).

Enoch, one of the newer chatbots powered by artificial intelligence, promises “to ‘mind wipe’ the pro-pharma bias” from its answers. Another, Arya, produces content based on instructions that tell it to be an “unapologetic right-wing nationalist Christian A.I. model.”

@movq@www.uninformativ.de Gemini liked your opinion very much. Here is how it countered:

1. The User Perspective (Untrustworthiness)The criticism of AI as untrustworthy is a problem of misapplication, not capability.

- AI as a Force Multiplier: AI should be treated as a high-speed drafting and brainstorming tool, not an authority. For experts, it offers an immense speed gain, shifting the work from slow manual creation to fast critical editing and verification.

- The Rise of AI Literacy: Users must develop a new skill—AI literacy—to critically evaluate and verify AI’s probabilistic output. This skill, along with improving citation features in AI tools, mitigates the “gaslighting” effect.

The fear of skill loss is based on a misunderstanding of how technology changes the nature of work; it’s skill evolution, not erosion.

- Shifting Focus to High-Level Skills: Just as the calculator shifted focus from manual math to complex problem-solving, AI shifts the focus from writing boilerplate code to architectural design and prompt engineering. It handles repetitive tasks, freeing humans for creative and complex challenges.

- Accessibility and Empowerment: AI serves as a powerful democratizing tool, offering personalized tutoring and automation to people who lack deep expertise. While dependency is a risk, this accessibility empowers a wider segment of the population previously limited by skill barriers.

The legal and technical flaws are issues of governance and ethical practice, not reasons to reject the core technology.

- Need for Better Bot Governance: Destructive scraping is a failure of ethical web behavior and can be solved with better bot identification, rate limits, and protocols (like enhanced

robots.txt). The solution is to demand digital citizenship from AI companies, not to stop AI development.

I’m building a service that lets you:

create and manage disposable, brandable email aliases so you can track leaks, forward important messages, and keep your real inbox clean.

I’ve just finishing building it for the most part, and have cut a v0.1.0 release. It’s currently closed source (to be decided later) and now open to beta testers. cc @bender@twtxt.net 🙏 I fully intend to monetize and offer this as a paid service in teh coming weeks/months, but beta/invite-only testers and early adopters/users first 🤟

Specimen 1: A caveman marking his territory:

@lyse@lyse.isobeef.org back to this, I think @prologic@twtxt.net meant 1 November 12:00 UTC. I won’t hold it against him. 🤭

There are only two hard problems in distributed systems: 2. Exactly-once delivery 1. Guaranteed order of processing 2. Exactly-once delivery

@movq@www.uninformativ.de It’s way more expensive and time-consuming in the end. If only somebody had warned us!!1

The triangle reminds me of zalgo text: https://en.wikipedia.org/wiki/Zalgo_text