Throw your “Get rich quick” plans at me, I wanna be able to afford suing somebody/something about this. 😂 It’s not the first time that this happened and I’m sure it’s not gonna be the last.

⨁ Follow button on their profile page or use the Follow form and enter a Twtxt URL. You may also find other feeds of interest via Feeds. Welcome! 🤗

@mckinley@twtxt.net He’s signed up three times now even though I keep deleting the account, which is enough for me to permaban this person. I don’t technically want open registrations on my pod but up till now I’ve been too lazy to figure out how to turn them off and actually do that, and there hasn’t been a pressing need. I may have to now.

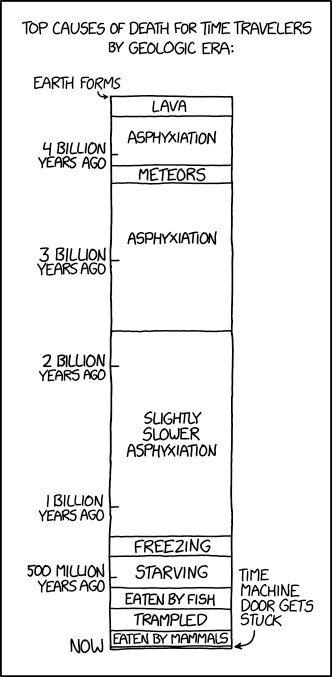

Time Traveler Causes of Death

⌘ Read more

⌘ Read more

Correct, @bender@twtxt.net. Since the very beginning, my twtxt flow is very flawed. But it turns out to be an advantage for this sort of problem. :-) I still use the official (but patched) twtxt client by buckket to actually fetch and fill the cache. I think one of of the patches played around with the error reporting. This way, any problems with fetching or parsing feeds show up immediately. Once I think, I’ve seen enough errors, I unsubscribe.

tt is just a viewer into the cache. The read statuses are stored in a separate database file.

It also happened a few times, that I thought some feed was permanently dead and removed it from my list. But then, others mentioned it, so I resubscribed.

(@anth@a.9srv.net’s feed almost never works, but I keep it because they told me they want to fix their server some time.)

@prologic@twtxt.net Yeah, I’ve noticed that as well when I hacked around. That’s a very good addition, ta! :-)

Getting to this view felt suprisingly difficult, though. I always expected my feeds I follow in the “Feeds” tab. You won’t believe how many times I clicked on “Feeds” yesterday evening. :-D Adding at least a link to my following list on the “Feeds” page would help my learning resistence. But that’s something different.

Also, turns out that “My Feeds” is the list of feeds that I author myself, not the ones I have subscribed to. The naming is alright, I can see that it makes sense. It just was an initial surprise that came up.

i have finished porting my laptop config to nixos in record time. i did the entire rebuild in one day, only a handful of hours total. love it.

If some of you budding fathers want to know how I created a computer nerd to one day work for Facebook in the big USA, well you purchase a $1000 Xmas present, an enormous thick book with C++ programming, and say, you can play as many games as you like kids, but James has to create them using computer software.

SO James created once a 3D chess program with sound, took 6 months or so, really hard to beat, not based on logic moves point by point like other chess programs, this one was based on the depth of looking for patterns, set it to 5 moves ahead and you were toast every time. Nice program too, sadly gone over the years, computers suffer from bit rot. We used to try and mark rotten hard drive discs once as bad sectors, not sure how UBuntu does this these days, I see a dozen errors on the screen every time I load.

Today I would purchase for my kids AI CAD simulation software with metal 3D printer and get your child to build fancy 3D models and engines from scratch. This will make them an expert in the CAD AI industry by the time they are 14 years old. Sadly AI is here to stay and will spoil the Internet.

@prologic@twtxt.net Thanks for the invitation. What time of day?

Hmmm I’m a little concerned, as I’m seeing quite a few feeds I follow in an error state:

I’m not so concerned with the 15x context deadline exceeded but more concerned with:

aelaraji@aelaraji.com Unfollow (6 twts, Last fetched 5m ago with error:

dead feed: 403 Forbidden

x4 times.)

And:

anth@a.9srv.net Unfollow (1 twts, Last fetched 5m ago with error:

Get "http://a.9srv.net/tw.txt": dial tcp 144.202.19.161:80: connect: connection refused

x3733 times.)

Hmmm, maybe the stats are a bit off? 🤔

I setup and switched to Headscale last night. It was relatively simple, I spent more time installing a web GUI to manage it to be honest, the actual server is simple enough. The native Tailscale Android app even works with it thankfully.

receieveFile())? 🤔

@stigatle@yarn.stigatle.no @xuu@txt.sour.is @lyse@lyse.isobeef.org “Not cool”? I was receiving many broken (HTTP 400 error) requests per second from an IP address I didn’t recognize, right after having my VPS crash because the hard drive filled up with bogus data. None of this had happened on this VPS before, so it was a new problem that I didn’t understand and I took immediate action to get it under control. Of course I reported the IP address to its abuse email. That’s a 100% normal, natural, and “cool” thing to do in such a situation. At the time I had no idea it was @xuu@txt.sour.is .

The moment I realized it was @xuu@txt.sour.is and definitely a false alarm, I emailed the ISP and told them this was a false positive and to not ban or block the IP in question because it was not abusive traffic. They haven’t yet responded but I do hope they’ve stopped taking action, and if there’s anything else I can do to certify to them that this is not abuse then I will do that.

I run numerous services on that VPS that I rely on, and I spent most of my day today cleaning up the mess all this has caused. I get that this caused @xuu@txt.sour.is a lot of stress and I’m sincerely sorry about that and am doing what I can to rectify the situation. But calling me “not cool” isn’t necessary. This was an unfortunate situation that we’re trying to make right and there’s no need for criticizing anyone.

twts are taking a very long time to post from yarn after the latest upgrade. Like a good 60 seconds.

receieveFile())? 🤔

@prologic@twtxt.net I don’t know if this is new, but I’m seeing:

Jul 25 16:01:17 buc yarnd[1921547]: time="2024-07-25T16:01:17Z" level=error msg="https://yarn.stigatle.no/user/stigatle/twtxt.txt: client.Do fail: Get \"https://yarn.stigatle.no/user/stigatle/twtxt.txt\": dial tcp 185.97.32.18:443: i/o timeout (Client.Timeout exceeded while awaiting headers)" error="Get \"https://yarn.stigatle.no/user/stigatle/twtxt.txt\": dial tcp 185.97.32.18:443: i/o timeout (Client.Timeout exceeded while awaiting headers)"

I no longer see twts from @stigatle@yarn.stigatle.no at all.

Threshold: 8.00 miles, 00:09:15 average pace, 01:14:00 duration

9:50 for warm-up and cool-down then 8:00 on and 11:30 off three times.

#running #treadmill

There are also a bunch of log messages scrolling by. I’ve never seen this much activity in the log:

Jul 25 01:37:39 buc.ci yarnd[829]: [yarnd] 2024/07/25 01:37:39 (149.71.56.69) "GET /external?nick=lovetocode999&uri=https://pagez.co.uk/services/your-own-100-fully-owned-online-vi>

Jul 25 01:37:39 buc.ci yarnd[829]: [yarnd] 2024/07/25 01:37:39 (162.211.155.2) "GET /twt/112135496802692324 HTTP/1.1" 400 12 826.65µs

Jul 25 01:37:40 buc.ci yarnd[829]: [yarnd] 2024/07/25 01:37:40 (51.222.253.14) "GET /conv/muttriq HTTP/1.1" 200 36881 20.448309ms

Jul 25 01:37:40 buc.ci yarnd[829]: [yarnd] 2024/07/25 01:37:40 (162.211.155.2) "GET /twt/112730114943543514 HTTP/1.1" 400 12 663.493µs

Jul 25 01:37:40 buc.ci yarnd[829]: [yarnd] 2024/07/25 01:37:40 (27.75.213.253) "GET /external?nick=lovetocode999&uri=http%3A%2F%2Falfarah.jo%2FHome%2FChangeCulture%3FlangCode%3Den>

Jul 25 01:37:40 buc.ci yarnd[829]: time="2024-07-25T01:37:40Z" level=error msg="http://bynet.com.br/log_envio.asp?cod=335&email=%21%2AEMAIL%2A%21&url=https%3A%2F%2Fwww.almanacar.c>

Jul 25 01:37:40 buc.ci yarnd[829]: [yarnd] 2024/07/25 01:37:40 (162.211.155.2) "GET /twt/111674756400660911 HTTP/1.1" 400 12 545.106µs

Jul 25 01:37:40 buc.ci yarnd[829]: time="2024-07-25T01:37:40Z" level=warning msg="feed FetchFeedRequest: @<lovetocode999 http://alfarah.jo/Home/ChangeCulture?langCode=en&returnUrl>

Jul 25 01:37:41 buc.ci yarnd[829]: [yarnd] 2024/07/25 01:37:41 (162.211.155.2) "GET /twt/112507964696096567 HTTP/1.1" 400 12 838.946µs

Something really weird is going on?

Thank “Human Goodness” for the Gutenberg Project and all the books I’ll get to immerse myself into, especially in such hard times 🙏

I admit I’ve always compromised on this way too much myself, always to this day having Facebook Messenger just to communicate in my families group chats. Sure I run it in a Work profile on my GrapheneOS phone that I can switch off at any time, I can completely cut it off from network access any time as well, I can have a lot of rudimentary control over it, I use it as sparingly as possible, but it doesn’t change the fact everytime I use it we’re funneling private convos through bloody Meta’s servers and trackers etc.

@johanbove@johanbove.info Did they produce a new season or you’re just catching up with the old ones? It has been ages since the last time I’ve watched any of it.

@prologic@twtxt.net Hmm, yeah, hmm, I’m not sure. 😅 It all appears very subjective to me. Is 2k lines of code a lot or not?

I mean, I’m all for reducing complexity. 😅 I just have a hard time defining it and arguing about it. What I call “too complex”, others might think of as “just fine”. 🤔

Pinellas County Running: 6.01 miles, 00:10:20 average pace, 01:02:05 duration

was feeling in the flow for the first 2.5 miles and then some lady stopped me in her car to help her get a turtle to the pond. could not get it back afterwards but it was still a fun time out albeit exhausting.

#running

It’s a very dangerous time. The coalition for reason is extremely weak. That’s why I really appreciate C.H. Danhauser’s entertaining and informative Logical Thinking series. (https://www.youtube.com/watch?v=BUqMNVnELzE&list=PLMpofmkxKHBJfta_JzekLbWGHUSLUJoLt)

Pinellas County - Mile time trial: 1.03 miles, 00:06:40 average pace, 00:06:51 duration

after the warm-up the humidity hit me and i realized i was drenched and i could not stop sweating. it was going to be rough, and it was. kept a pretty steady pace which was great… and around 0.70 miles i upchucked in my mouth a bit, which was oh so great, so i eased off the gas towards the end. overall very happy with the effort since normally i do this in the cooler and drier conditions. in addition i have not been doing much speed work so this is great.

76.2F feels like 84.6F with 93% RH and 73.7F dew point

#running

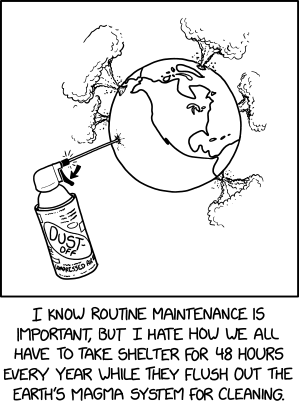

Routine Maintenance

⌘ Read more

⌘ Read more

I lapsed with the coffee drinking. One cup a day. Making tea takes way more time than making a quick coffee. Although I still should take the time.

Time flows backwards for some successful people

42km for 42 years: 26.26 miles, 00:11:50 average pace, 05:10:44 duration

crazy run… don’t have a lot of time so i’ll have to remember to complete the thread that i captured in my journal.

#running

@prologic@twtxt.net Yes very very strange! I truly don’t know where to start on that one 🤣 Must be one of those really weird edge cases. Thanks for your help on this, I can at least post normally now.👌

I’ll check logging in etc tomorrow, time for bed lol 😴

@prologic@twtxt.net The login issue I cant yet narrow down as to when it happens as sometimes I login fine. But it gives off a 401 forbidden error. Anyway I’ve been focusing on the posting error as I figured it must be related. Registering and logging in as a new user works every time, which is weird.

Hoping the kitty I’m watching will start snoring again, my recorder is ready this time.

I just typed something that took me a while to enter, hit post, and lost everything because I was logged out. Can that be disabled? Let me be logged in for as long as I want (or for a very long time), unless I hit logout, or account for the previously entered text, and present it (or run the post action), after having to re-login?

Speaking of “AI” … I guess I gotta find out soon how to disable/sabotage Microsoft’s “Recall”, before this garbage takes over the family computers. 😩

(There’s no way the people in question will switch operating systems. I’ve tried, countless times.)

There are apparently dedicated “fireproof” external hard drives available that do this, and this coincidentally-timed piece suggests I might be able to get closer to what I was thinking in the not-too-distant future: https://www.pcgamer.com/hardware/ssds/researchers-have-developed-a-type-of-flash-memory-storage-that-can-withstand-temperatures-higher-than-the-surface-of-venus/

I run Plan 9 on my server and my main home workstation (a raspberry pi). My “daily driver” time is basically split between that and a Mac (excluding time on my phone, i suppose). I think it looks elegant, too. :-)

@prologic@twtxt.net @lyse@lyse.isobeef.org about time i got my act together

Base: 7.01 miles, 00:09:43 average pace, 01:08:08 duration

just logging the miles and time. last day of kids’ school so a lot of chaos has settled only to be replaced i am sure!

#running #treadmill

@movq@www.uninformativ.de It looks like this one actually reads the robots.txt … it did a couple of times over the past few weeks.

“GET /robots.txt HTTP/1.1” 304 0 “-” “Mozilla/5.0 AppleWebKit/537.36 (KHTML, like Gecko; compatible; GPTBot/1.0; +https://openai.com/gptbot)”

VO2 Max: 5.00 miles, 00:08:56 average pace, 00:44:40 duration

was suppose to be a VO2Max workout… 9:50 warm up and cool down for 10 minutes a piece, then 5 times :20 on and 11:30 off, actually didn’t feel too rough but that may have been a different story if i was not on the treadmill.

#running #treadmill

This was interesting: I didn’t expect so much variation in reported times. If you happen to have #plan9 running on some other VPS, I’d love to hear your results. https://pdx.social/@a/112481970480703254

@movq@www.uninformativ.de I have this one as per some article I read some time ago… But just like the robots.txt I don’t think you have any grantee that it would be honored, you might even have a better chance hunting for and blocking user-agents.

Planning a file back up from an old machine that’s been sitting in the corner gathering dust… Because I know ! I’m about to eff it up, BIIIIG Time ! 😂

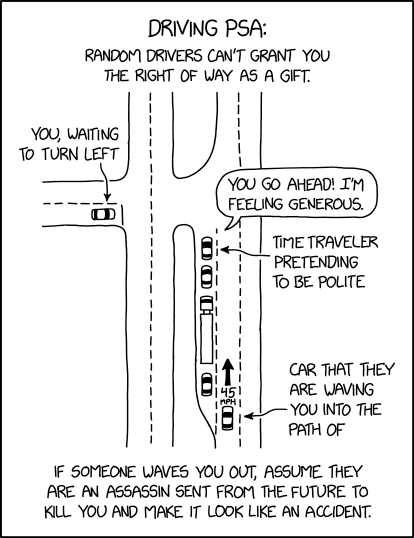

Driving PSA

⌘ Read more

⌘ Read more

@Rob@jsreed5.org Coming from HTTP and discovered both at the same time, my preference might be biased towards Gemini because of the content syntax but I love both equally.

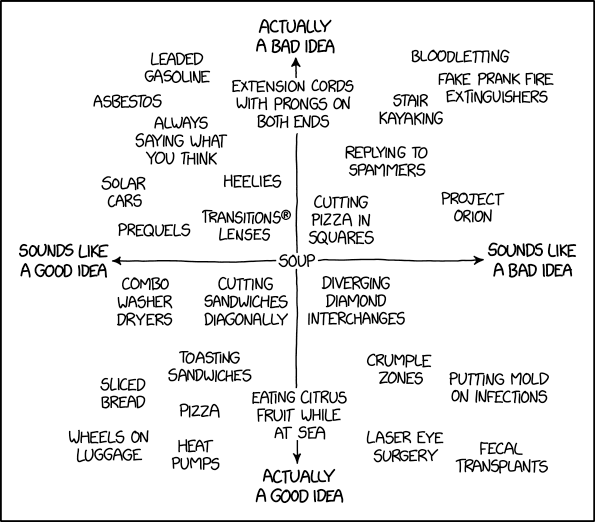

Good and Bad Ideas

⌘ Read more

⌘ Read more

The last time I did so, I ended up injuring my neck reading an old stash of books we’ve had laying around at home. It took months to heal. xD

@bender@twtxt.net LOL! Been there, done that! I can go on for weeks without any of it. Not even a phone, I don’t have that many responsibilities to need one on me all the time. Life is much simpler like that.

Well! My 24 hrs without a GUI Web browser was quite of a nice experience.

As a matter of fact, and as long as I’m not doing any 3D work, I kind of don’t need gui applications as much as it feels like.

Even though, a couple of websites asked me to eff off because they need

JavaScript to work. Some others handed me a cold “402 Upgrade Required” client

error response… (LOL let’s not even talk about how Github repos looked

and felt like). I have managed to fix a couple of things I’ve been meaning to

for quite some time but never got, mainly to because of my browsing

habits. I tend to open a lot of tabs, read some, get distracted then

open some more and down the rabbit hole (or shall I say tabs) I go.

All in all, it was quite a nice experience.

How nice? It was an “I’m dropping into a full TTY experience for another

24 hrs” kind of nice!

Although, I miss using a mouse already, but hey, I would have never

heard about gpm(8) otherwise.

@movq@www.uninformativ.de Oh! Thank you for the link! I’m checking it right away!

I hope I don’t get slapped with a “HTTP/1.1 426 Upgrade Required” there as well.

As for Netflix and Co. I can do without for the time being. I guess I have binge watched enough content I feel like I miss missing it. 😂

I think @abucci@anthony.buc.ci and @stigatle@yarn.stigatle.no are running snac? I didn’t have a closer look at snac (no intention of running it), but if that is a relatively small daemon (maybe comparable to Yarn?) that gives you access to the whole world of ActivityPub, then, well, yeah … That’s tough to beat.

Yes, I am running snac on the same VPS where I run my yarn pod. I heard of it from @stigatle@yarn.stigatle.no, so blame him 😏 snac is written in C and is one simple executable, uses very little resources on the server, and stores everything in JSON files (no databases or other integrations; easy to save and migrate your data) . It’s definitely like yarn in that respect.

I haven’t been around yarn much lately. Part of that is that I’ve been very busy at work and home and only have a limited time to spend goofing off on a social network. Part of it is that I’m finding snac very useful: I’ve connected with friends I’d previously lost touch with, I’ve found useful work-related information, I’ve found colleagues to follow, and even found interesting conferences to attend. There’s a lot more going on over there.

I guess if I had to put it simply, I’d say I have limited time to play and there are more kids in the ActivityPub sandbox than this one. That’s not a ding on yarn–I like yarn and twtxt–I’m just time constrained.

It will be minimal for the time being.

The bare minimum, just … Not blank.