@prologic@twtxt.net @bmallred@staystrong.run Ah, I just found this, didn’t see it before:

https://restic.net/#compatibility

So, yeah, they do use semver and, yes, they’re not at 1.0.0 yet, so things might break on the next restic update … but they “promise” to not break things too lightheartedly. Hm, well. 😅 Probably doesn’t make a big difference (they don’t say “don’t use this software until we reach 1.0.0”).

LXQt 2.2.0 released

LXQt, the Qt-based alternative to KDE as Xfce is the GTK-based alternative to GNOME, has released version 2.2.0. LXQt is in the middle of its transition to Wayland, and as such, this release brings a number of fixes and improvements for Wayland, like improved multi-display support and updated compatibility with Wayland compositors. Beyond all the Wayland work, LXQt Power Management now supports power profiles, text rendering in QTerminal and QTermWidget has been improved, the file manager PC … ⌘ Read more

@movq@www.uninformativ.de That is a good question, I’ve been on v0.17.3 for some time. In the past there has been one scheme update that I remember and the there was no issue. Maybe this next week I will try out v0.18 and post back.

I really don’t mess with it being on a cron so tend to forget until I need it :-)

Even though I really do like the shell, I always use Dolphin to mount my digicam SD card and copy the photos onto my computer. I finally added a context menu item in Dolphin to create a forest stroll directory with the current date in order to save some typing:

The following goes in ~/.local/share/kservices5/ServiceMenus/galmkdir.desktop:

[Desktop Entry]

Type=Service

X-KDE-ServiceTypes=KonqPopupMenu/Plugin,inode/directory

Actions=Waldspaziergang;

[Desktop Action Waldspaziergang]

Name=Heutigen Waldspaziergang anlegen…

Icon=folder-green

Exec=~/src/gelbariab/galmkdir "%f"

In order to update the KDE desktop cache and make this action menu item available in Dolphin, I ran:

kbuildsycoca5

The referenced galmkdir script looks like that:

#!/bin/sh

set -e

current_dir="$1"

if [ -z "$current_dir" ]; then

echo "Usage: $0 DIRECTORY" >&2

exit 1

fi

dir="$(kdialog \

--geometry 350x50 \

--title "Heutigen Waldspaziergang anlegen" \

--inputbox "Neues Verzeichnis in „$current_dir“ anlegen:" \

"waldspaziergang-$(date +%Y-%m-%d)")"

mkdir "$current_dir/$dir"

dolphin "$current_dir/$dir"

This solution is far from perfect, though. Ideally, I’d love to have it in the “Create New” menu instead of the “Actions” menu. But that doesn’t really work. I cannot define a default directory name, not to mention even a dynamic one with the current date. (I would have to update the .desktop file every day or so.) I also failed to create an empty directory. I somehow managed to create a directory with some other templates in it for some reason I do not really understand.

Let’s see how that works out in the next days. If I like it, I might define a few more default directory names.

New version release of twtxt-el!

- Fixed many bugs.

- New back buttons.

- Updated documentation.

I am currently fixing an important bug that break the timeline in some cases and I am working around direct messages.

@lyse@lyse.isobeef.org Just needed to update the version of the tool I packaged as an OCI image 🤣

Amiga OS 3.2 Update 3 released

I’ve long lost the ability to keep track of whatever’s happening in the Amiga community, and personally I tend to just focus on tracking MorphOS and AROS as best I can. The remnants of the real AmigaOS, and especially who owns, maintains, and develops which version, are mired in legal battles and ownership limbo, and since I can think of about a trillion things I’d rather do than keep track of the interpersonal drama by reading various Amiga forums, I honestly didn’t ev … ⌘ Read more

I updated wordwrap.[ch] to more closely match the interface for string(2); it’s now just that plus a margin. I also updated litclock and marquee to match. http://a.9srv.net/src/index.html

Not updated in 7 years, IIS is still a default part of Windows, apparently

This month’s security updates for Windows 11 create a new empty folder on drive C. It is called “inetpub,” and it does not contain any extra folders or files. Its properties window shows 0 bytes in size and that it was created by the system itself. Neowin checked a bunch of Windows 11 PCs with the April 2025 security updates installed, and all of them had inetpub on drive C. ↫ Taras Bu … ⌘ Read more

FreeDOS 1.4 released

With FreeDOS being, well, DOS, you’d think there wasn’t much point in putting out major releases and making big changes, and you’d mostly be right. However, being a DOS clone doesn’t mean there isn’t room for improvement within the confines of the various parts and tools that make up DOS, and that’s exactly where FreeDOS focuses its attention. FreeDOS 1.4 comes about three years after 1.2. This version includes an updated FreeCOM, Install program, and HTML Help system. This also includes i … ⌘ Read more

What’s up with Linux support for Qualcomm X Elite chips?

Remember when Qualcomm promised Linux would be a first-tier platform alongside Windows for its Snapdragon X Elite, almost a year ago now? Well, the Snapdragon X laptop have been out in the market for a while running Windows, but Linux support is still a complete crapshoot, despite the lofty promises by Qualcomm. Tuxedo, a European Linux OEM who promised to ship a Snapdragon X laptop running Linux, has posted an update on … ⌘ Read more

@lyse@lyse.isobeef.org I do agree “the rules of the web”, are far too loose - at least the syntax ones. I do think backwards compatibility is necessary.

As for my website, it might be visually very similar, to how it looked since its creation, many years ago, but it is frequently improved. Features that originally used JavaScript, changed to HTML and CSS components, code simplified, optimised to withstand browser updates and new screen resolutions,… Even a good chunk of the errors on your list, were already addressed and I plan to address the rest soon.

Just find it a bit depressing, that my attempt to bring back some of the old Internet spirit, by making a hidden easteregg page page for this years April 1st, was met with people complaining about April fools day jokes and you insinuating my website sucks.

The 32bit RISC OS needs to be ported to 64bit to survive, seeks help

RISC OS, the operating system from the United Kingdom originally designed to run on Acorn Computer’s Archimedes computers – the first ARM computers – is still actively developed today. Especially since the introduction of the Raspberry Pi, new life was breathed into this ageing operating system, and it has gained quite a bit of steady momentum ever since, with tons of small updates, applications, … ⌘ Read more

Project update + 2 significant news stories

Unmanned rocket explodes 40 seconds after launch in Norway during private space mission; Hamas agrees to Gaza ceasefire; Israel counters with own terms. ⌘ Read more

oh my god I am never using a css grid again ;;;; converted the sdv shrine to a flex layout, works much better on mobile now! now to just push that update!! :D

Hmm I think I can come up with some kind of heuristic.. Maybe if the feed is requested and hasn’t updated in the last few mins it adds to the queue. So the next time it will be fresh.

@eapl.me@eapl.me According to an update of the article, others have suggested the same.

Your explanation seems fitting. I just don’t get why people don’t use feed readers anymore. Anyway.

if it hasn’t updated in a while so i put the request rate to once a week it will take some time before i see an update if it happens today.

I need to figure out a way to back off requests to feeds that don’t update often.

@kat@yarn.girlonthemoon.xyz UPDATE I DID IT!!!!!!! you will now see a cute anime girl that is behind the scenes testing if you are a bot or not in a matter of seconds before being redirected to the site :) https://superlove.sayitditto.net/

I have applied your comments, and I tried to add you as an editor but couldn’t find your email address. Please request editing access if you wish.

Also, could you elaborate on how you envision migrating with a script? You mean that the client of the file owner could massively update URLs in old twts ?

Enlightenment 0.27.1 released

A few months after 0.27.0 was released, we’ve got a small update for Enlightenment today, version 0.27.1. It’s a short list of bugfixes, and one tiny new feature: you can now use the scroll wheel to change the volume when your cursor is hovering over the mixer controls. That’s it. That’s the release. ⌘ Read more

Microsoft accidentally cares about its users, releases update that unintentionally deletes Copilot from Windows

It’s rare in this day and age that proprietary operating system vendors like Microsoft and Apple release updates you’re more than happy to install, but considering even a broken clock is right twice a day, we’ve got one for you today. Microsoft released KB5053598 (OS Build 26100.3476) which “addresses security i … ⌘ Read more

I have released new updates to the twtxt.el client.

- New feature: Notifications.

- Updated: Improved user interface for new posts.

- Updated: Documentation.

- Updated: Some UI elements and included information about shortcuts in each buffer.

- Minor fixes.

Source code: https://codeberg.org/deadblackclover/twtxt-el

In the next version: You will be able to send direct messages.

Enjoy!

#emacs #twtxt #twtxtel

Bit of an update, there is now a general licence for all my stuff:

“Unless projects are accompanied by a different license, Creative Commons apply (“BY-NC-ND” for all art featuring the Canine mascot and “BY-NC” for everything else).”

It’s even included on my website, where most of the demand for a clear licence originated from:

In practice this changes nothing, as I was never enforcing anything more than this anyway and given permission for other use too. Now it’s just official that this is the baseline, of what can be done, without having to ask for permission first.

well (insert stubborn emoji here) 😛, word blog comes from weblog, and microblogging could derivate from ‘smaller weblog’. https://www.wikiwand.com/en/articles/Microblogging

I’d differentiate it from sharing status updates as it was done with ‘finger’ or even a BBS. For example, being able to reply; create new threads and sharing them on a URL is something we could expect from ‘Twitter’, the most popular microbloging model (citation needed)

I like to discuss it, since conversations usually are improved if we sync on what we understand for the same words.

Brother denies using firmware updates to brick printers with third-party ink

Brother laser printers are popular recommendations for people seeking a printer with none of the nonsense. By nonsense, we mean printers suddenly bricking features, like scanning or printing, if users install third-party cartridges. Some printer firms outright block third-party toner and ink, despite customer blowback and lawsuits. Brother’s laser printers have historically worke … ⌘ Read more

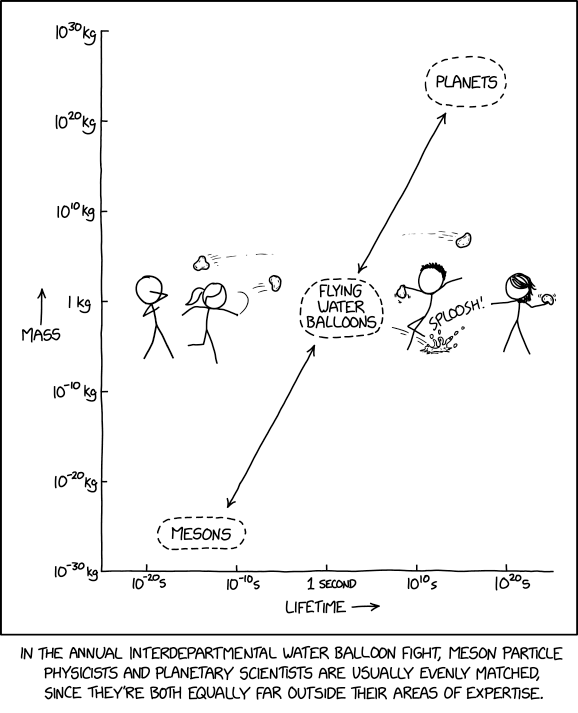

Water Balloons

⌘ Read more

⌘ Read more

I have released new updates to the twtxt.el client.

- New feature: View and interact with threads.

- Optimisation of ordering for long feeds.

- Minor fixes.

In the next version you will be able to see all your mentions.

Enjoy!

@lyse@lyse.isobeef.org i appreciate you updating this with that info. been in the weeds at work so haven’t been tracking the conversation here much. let me sit on this for a bit because often times the edits are within seconds of first post so maybe maybe i just allow them within a certain time frame or do away with them all together. i really only do it because it bugs me once i notice the typo :)

@bmallred@staystrong.run I forgot one more effect of edits. If clients remember the read status of massages by hash, an edit will mark the updated message as unread again. To some degree that is even the right behavior, because the message was updated, so the user might want to have a look at the updated version. On the other hand, if it’s just a small typo fix, it’s maybe not worth to tell the user about. But the client doesn’t know, at least not with additional logic.

Having said that, it appears that this only affects me personally, noone else. I don’t know of any other client that saves read statuses. But don’t worry about me, all good. Just keep doing what you’ve done so far. I wanted to mention that only for the sake of completeness. :-)

@andros@twtxt.andros.dev Oh, this system has an edit button so I can just update the twt as needed. It’s a custom implementation so just kind of through it in when I was building it out.

A love letter to Void Linux

I installed Void on my current laptop on the 10th of December 2021, and there has never been any reinstall. The distro is absurdly stable. It’s a rolling release, and yet, the worst update I had in those years was one time, GTK 4 apps took a little longer to open on GNOME. Which was reverted after a few hours. Not only that, I sometimes spent months without any update, and yet, whenever I did update, absolutely nothing went wrong. Granted, I pretty much only did full upgrades … ⌘ Read more

Mozilla is going to collect a lot more data from Firefox users

I guess my praise for Mozilla’s and Firefox’ continued support for Manifest v2 had to be balanced out by Mozilla doing something stupid. Mozilla just published Terms of Use for Firefox for the first time, as well as an updated Privacy Notice, that come into effect immediately and include some questionable terms. The Terms of Use state: When you upload or input information through Firefox, you hereby grant u … ⌘ Read more

I have released new updates to the twtxt.el client.

- Markdown to Org mode (you need to install Pandoc).

- Centred column.

- Added new logo.

- Added text helper.

The new version I will try to finish the visual thread. You still can’t see the thread yet.

#emacs #twtxt #twtxtel

Qualcomm gives OEMs the option of 8 years of Android updates

Starting with Android smartphones running on the Snapdragon 8 Elite Mobile Platform, Qualcomm Technologies now offers device manufacturers the ability to provide support for up to eight consecutive years of Android software and security updates. Smartphones launching on new Snapdragon 8 and 7-series mobile platforms will also be eligible to receive this extended support. ↫ Mike Genewich I mean, good news of cou … ⌘ Read more

I suspect the problem is that the content is updated. It looks like a design problem.

@aelaraji@aelaraji.com You can update the package 😀

@eapl.me@eapl.me Yeah, you need some kind of storage for that. But chances are that there’s already a cache in place. Ideally, the client remembers etags or last modified timestamps in order to reduce unnecessary network traffic when fetching feeds over HTTP(S).

A newsreader without read flags would be totally useless to me. But I also do not subscribe to fire hose feeds, so maybe that’s a different story with these. I don’t know.

To me, filtering read messages out and only showing new messages is the obvious solution. No need for notifications in my opinion.

There are different approaches with read flags. Personally, I like to explicitly mark messages read or unread. This way, I can think about something and easily come back later to reply. Of course, marking messages read could also happen automatically. All decent mail clients I’ve used in my life offered even more advanced features, like delayed automatic marking.

All I can say is that I’m super happy with that for years. It works absolutely great for me. The only downside is that I see heaps of new, despite years old messages when a bug causes a feed to be incorrectly updated (https://twtxt.net/twt/tnsuifa). ;-)

Redox’ relibc becomes a stable ABI

The Redox project has posted its usual monthly update, and this time, we’ve got a major milestone creeping within reach. Thanks to Anhad Singh for his amazing work on Dynamic Linking! In this southern-hemisphere-Redox-Summer-of-Code project, Anhad has implemented dynamic linking as the default build method for many recipes, and all new porting can use dynamic linking with relatively little effort. This is a huge step forward for Redox, because relibc can now beco … ⌘ Read more

@andros@twtxt.andros.dev Awesome! I’ve seen the demo earlier on mastodon, things are getting better and better with each update 👌 Good luck!

GTK announces X11 deprecation, new Android backend, and much more

Since a number of GTK developer came together at FOSDEM, the project figured now was as good a time as any to give an update on what’s coming in GTK. First, GTK is implementing some hard cut-offs for old platforms – Windows 10 and macOS 10.15 are now the oldest supported versions, which will make development quite a bit easier and will simplify several parts of the codebase. Windows 10 was released in 2 … ⌘ Read more

Heute fahren wir auffe Arbeit ein großen Update für das CMS der zentralen Webseiten. Hoffentlich geht das alles gut. 😱

Ahh yes, what I like to call “wild wild west” upgrading.😂

Felt like that when I upgraded/updated an Arch Linux machine that had been sitting for a couple years unused.

New human-like species discovered in China + 3 more stories

Scientists propose a new human-like species based on ancient fossils; oceans warm four times faster than in the 1980s; researchers recreate endosymbiosis significantly in the lab; CIA updates its Covid-19 origins assessment, hinting at a lab leak. ⌘ Read more

Here’s a twt from @andros@twtxt.andros.dev ’s new version of Twtxt-el 🥳 It feels WAaaaaY better! although it freezes on me as soon as I navigate to the next page complaining about some bad url, but the chronological sorting of the feed as well as the navigation buttons (links?) are a great addition. Looking forward to the next update already! 😁 🥳🥳🥳