wahhh i wanna work towards my dream of offering pay as you can web hosting (static & dynamic) but i don’t know how!!!!! i keep drifting towards hosting panels but i don’t exactly have fresh linux servers for those nor do i like the level of access they require. so i’m like ok i can do the static site part with SFTP chroot jails and a front-end like filebrowser or something…. but then what about the dynamic sites!!!!!!! UGH

granted i doubt i’d get much interest in dynamic sites but i’d like to do this old school where i can offer people isolated mySQL databases or something for some project (i’m thinking PHP based fanlistings), which means i could do it the old school way of… people ask me to run it and i do it for them. but i kind of want to let people have access to be able to do it themselves just short of giving them SSH access which isn’t happening

I make a Emacs theme with a contrast ready for colour blind or visually impaired people.

https://github.com/tanrax/thankful-eyes-theme.el

Enjoy!

#emacs #accessibility

Short summary of Project2025 and Trump’s plans for the US:

Abolish the Federal Reserve

Why? To end what is seen as an unelected, centralized body that exerts too much influence over the economy and monetary policy, replacing it with a more transparent, market-driven approach.Implement a national consumption tax

Why? To replace the current federal income tax system, simplify taxation, and increase government revenue through a broader base that includes all consumers.Lower corporate tax rates

Why? To promote business growth, increase investment, and stimulate job creation by reducing the financial burden on companies.Deregulate environmental policies

Why? To reduce government intervention in the economy, particularly in energy and natural resources sectors, and to foster a more business-friendly environment.Restrict abortion access

Why? To align with conservative pro-life values and overturn or limit abortion rights, seeking to restrict the practice at a federal level.Dismantle LGBTQ+ protections

Why? To roll back protections viewed as promoting LGBTQ+ rights in areas like employment and education, in line with traditional family values.Eliminate diversity, equity, and inclusion (DEI) programs

Why? To end policies that are seen as divisive and to promote a merit-based system that prioritizes individual achievements over group identity.Enforce stricter immigration policies, including mass deportations and detentions

Why? To prioritize border security, reduce illegal immigration, and enforce existing laws more aggressively, as part of a broader strategy to safeguard U.S. sovereignty.Eliminate the Department of Education

Why? To reduce federal control over education and shift responsibilities back to local governments and private sectors, arguing that education decisions should be made closer to the community level.Restructure the Department of Justice

Why? To ensure the department aligns more closely with the administration’s priorities, potentially reducing its scope or focus on areas like civil rights in favor of law-and-order policies.Appoint political loyalists to key federal positions

Why? To ensure that government agencies are headed by individuals who are committed to advancing the administration’s policies, and to reduce the influence of career bureaucrats.Develop training programs for appointees to execute reforms effectively

Why? To ensure that political appointees are equipped with the knowledge and skills necessary to implement the proposed changes quickly and effectively.Provide a 180-day transition plan with immediate executive orders

Why? To ensure that the incoming administration can swiftly implement its agenda and make major changes early in its term without delay.

Do y’all agree with any/all/some of these poliices? Hmmm 🤔

@prologic@twtxt.net I’m speculating, but if I had to guess I’d say it’s probably asking for your user password in order to access some user keyring (or whatever your OS uses to manage user secret credentials) used to safely store your passkeys related data in order to do its passkeys /ME doing air quotes Magic™ … you could try with a different password manager to avoid said scenario.

Also, passkeys UX sucks.

ArcaOS 5.1.1 released

It’s been two years since the release of ArcaOS 5.1, which was a hugely important release because it brought UEFI support to this continuation of IBM’s OS/2, ensuring longevity for the project for years to come. Since I don’t think much is known about what, exactly, Arca Noae, and eComStation before it, has access to within the licensing agreement with IBM, it’s difficult to ascertain just how much room they actually have to make changes to the code at the core of the old OS/2. Regardles … ⌘ Read more

Yesterday I was doing a lot of research on how #hyperdrive and the #holepunch project work. Would it be possible to use it to make #twtxt an easier gateway for new users? Could we stop using web servers?

My conclusion: We would end up being a #nostr. On the one hand it would become more complex to use, it would force the user to have software installed, and on the other hand the community would need a central proxy to make the routes accessible via HTTP. In other words, it’s not a good idea.

However, it’s an AMAZING technology. I want to start playing with it.

I’m continuing my tt rewrite in Go and quickly implemented a stack widget for tview. The builtin Pages is similar but way too complicated for my use case. I would have to specify a mandatory name and some additional options for each page. Also, it allows me to randomly jump around between pages using names, but only gives me direct access the first, however, not the last page. Weird. I don’t wanna remember names. All I really need is a classic stack. You open a new fullscreen dialog and maybe another one on top of that. Closing the upper most brings you back to the previous one and so on.

The very first dialog I added is viewing the raw message text. Unlike in @arne@uplegger.eu’s TwtxtReader, I’m not able to include the original timestamp, though. I don’t have it in its original form in the database. :-/

Next up is a URL view.

It’s really cool how my local public library’s membership includes digital access to thousands of magazines and newspapers.

@sorenpeter@darch.dk It depends on your requirements. If you just want to put your code somewhere for yourself, simply push it over SSH on a server and call it good. That’s what I do with lots of repos. If you want an additional web UI for read access for the public, cgit comes to mind (a mate uses that). Prologic runs Gitea, which offers heaps more functionality like merge requests.

@lyse@lyse.isobeef.org The one in question is more like the javascript version for unwrapping errors when accessing methods.

const value = some?.deeply?.nested?.object?.value

but for handling errors returned by methods. So if you wanted to chain a bunch of function calls together and if any error return immediately. It would be something like this:

b:= SomeAPIWithErrorsInAllCalls()

b.DoThing1() ?

b.DoThing2() ?

// Though its not in the threads I assume one could do like this to chain.

b.Chain1()?.Chain2()?.End()?

I am however infavor of having a sort of ternary ? in go.

PS. @prologic@twtxt.net for some reason this is eating my response without throwing an error :( I assume it has something to do with the CSRF. Can i not have multiple tabs open with yarn?

OpenAI Says It Has Evidence DeepSeek Used Its Model To Train Competitor

OpenAI says it has evidence suggesting Chinese AI startup DeepSeek used its proprietary models to train a competing open-source system through “distillation,” a technique where smaller models learn from larger ones’ outputs.

The San Francisco-based company, along with partner Microsoft, blocked suspected DeepSeek accounts from accessing … ⌘ Read more

SDL 3.2.0 released

SDL, the Simple DirectMedia Layer, has released version 3.2.0 of its development library. In case you don’t know what SDL is: Simple DirectMedia Layer is a cross-platform development library designed to provide low level access to audio, keyboard, mouse, joystick, and graphics hardware via OpenGL and Direct3D. It is used by video playback software, emulators, and popular games including Valve‘s award winning catalog and many Humble Bundle games. ↫ SDL website This new release has a lot of impr … ⌘ Read more

@movq, @prologic@twtxt.net when navigating to a Yarn. If the head twt is missing then the whole thread is not accessible. It only returns an error. so i have no way to view any of the replies within the thread other than the end twt.

Right to root access

I believe consumers, as a right, should be able to install software of their choosing to any computing device that is owned outright. This should apply regardless of the computer’s form factor. In addition to traditional computing devices like PCs and laptops, this right should apply to devices like mobile phones, “smart home” appliances, and even industrial equipment like tractors. In 2025, we’re ultra-connected via a network of devices we do not have full control over. Much of this has t … ⌘ Read more

@kat@yarn.girlonthemoon.xyz Only scp/rsync for me. :-) But I remember there is one server that only provides SFTP access. :-/

I mean bug where jenny don’t know about these id’s and tried to request from twtxt.net (prologic sent access logs)

How in da fuq do you actually make these fucking useless AI bots go way?

proxy-1:~# jq '. | select(.request.remote_ip=="4.227.36.76")' /var/log/caddy/access/mills.io.log | jq -s '. | last' | caddy-log-formatter -

4.227.36.76 - [2025-01-05 04:05:43.971 +0000] "GET /external?aff-QNAXWV=&f=mediaonly&f=noreplies&nick=g1n&uri=https%3A%2F%2Fmy-hero-ultra-impact-codes.linegames.org HTTP/2.0" 0 0

proxy-1:~# date

Sun Jan 5 04:05:49 UTC 2025

😱

I just banned 41 bad user agents from accessing any of my services. 😱

@kat@yarn.girlonthemoon.xyz i also like the separation inherent with using dedicated devices. like i have a DAP, a fiio X1 ii from 2019, and it’s still going strong. it’s perfect for on the go music listening and i never have to worry about like going somewhere with no reception and the music drops out. it’s all local AND the battery lasts longer because i’m not using wi-fi or bluetooth or data. also i can directly access the file system and just add files anytime. this goes for my point & shoot and other devices too. i love this shit i’m such a nerd

Once again I glimpsed at my twtxt feed access log. Now I’m wondering: is there a twtxt client named xt out there? Does anyone know? I did not find anything for “xt/0.0.1”.

@emmanuel@wald.ovh Btw I already figured out why accessing your web server is slow:

$ host wald.ovh

wald.ovh has address 86.243.228.45

wald.ovh has address 90.19.202.229

wald.ovh has 2 IPv4 addresses, one of which is dead and doesn’t respond.. That’s why accessing your website is so slow as depending on client and browser behaviors one of two things may happen 1) a random IP is chosen and ½ the time the wrong one is picked or 2) both are tried in some random order and ½ the time its slow because the broken one is picked.

If you don’t know what 86.243.228.45 is, or it’s a dead backup server or something, I’d suggest you remove this from the domain record.

@emmanuel@wald.ovh It is working! I’ve just noticed your feed link in my access.log and came by to say Hello! 👋 just give it a minute and others will notice your feed as well.

@sorenpeter@darch.dk @bender@twtxt.net @prologic@twtxt.net Right. Also, generally speaking, if you come across a new feed URL, it’s probably either via some mention in another feed or the User-Agent in your access log. Both cases typically advertise also a display name. So, you just reuse whatever you’ve seen there.

China launches first batch of internet satellites + 2 more stories

China launches first 10 Guowang satellites for internet access; NASA’s Webb telescope challenges planet formation theories; NATO takes over coordination of Ukraine military aid. ⌘ Read more

Getting my knowledge refreshed on web accessibility through a course on deque university.

Thank you, @movq@www.uninformativ.de! Luckily, I can disable it. I also tried it, no luck, though. But the problem is, I don’t really know how much snakeoil actually runs on my machine. There is definitely a ClownStrike infestation, I stopped the falcon sensor. But there might be even more, I’ve no idea. From the vague answers I got last time, it feels like even the UHD/IT guys don’t know what is in use. O_o

Yeah, it is definitely something on my laptop that rejects connections to IPv4 ports 80 and 443. All other devices here can access the stuff without issue, only this work machine is unable to. The “Connection refused” happens within a few milliseconds.

Unfortunately, I do not have the slightest idea how it works. But maybe I can look into that tomorrow. Kernel modules are a very good hint, thank you! <3

You’re right, it might be some sort of fail-safe mechanism. But then, why just block IPv4 and not also IPv6? But maybe because the VPN and company servers require IPv4, there is zero IPv6 support. (Yeah, don’t ask, I don’t understand it either.)

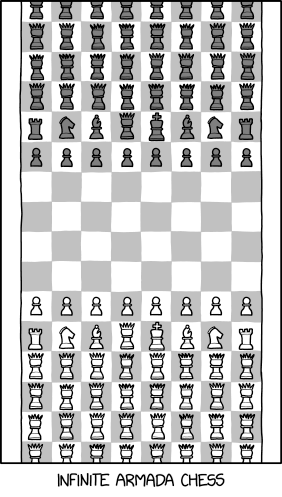

Infinite Armada Chess

⌘ Read more

⌘ Read more

# nick = skinshafi so... should I scream buuug ? 🤔

@prologic@twtxt.net Twtxt wise, it was kind of disparate at first xD with no access to logs as you may have read on the alt-feed itself. But then, @sorenpeter@darch.dk’s script came to the rescue … like, just in time 😁 Otherwise, everything else is fun as publicised, exploring and learning along the way.

Yes it work: 2024-12-01T19:38:35Z twtxt/1.2.3 (+https://eapl.mx/twtxt.txt; @eapl) :D

The .log is just a simple append each request. The idea with the .cvs is to have it tally up how many request there have been from each client as a way to avoid having the log file grow too big. And that you can open the .cvs as a spreadsheet and have an easy overview and filtering options.

Access to those files are closed to the public.

@lyse@lyse.isobeef.org One person had came access it before, but no tried it

I’ve just unlocked access to my /timeline if anyone has been trying to access it earlier.

for example, ejabberd, redka, and litefs. all using sqlite+litefs for their database needs allows agents to communicate over xmpp, matrix, mqtt, and sip. other applications can use sqlite for storage or speak the redis protocol to redka. ejabberd can also handle file uploads, static file publishing, identity, and various other web application services. when scaling, litefs integrates with consul to manage replication which grants the network access to service disco, encrypted mesh networking, and various other features that can be used to build secure service grids. ejabberd and redka can be scaled to multiple nodes that coordinate over the litefs replication protocol without any changes to the db storage config. other components can be configured to plug into this framework fairly easily as well. we keep the network config fairly simple by linking nodes together with yggdrasil to flatten the address space and then linking app nodes together using consul to provide secure routing for the local grid service. yggdrasil also offers utility for buliding federated networks in a similarly flat address space, for more secure communications i2p is also available in yggdrasil mode. minibase is wonderful, and we have not even started to talk about secure IoT.

Righto, @eapl.me@eapl.me, ta for the writeup. Here we go. :-)

Metadata on individual twts are too much for me. I do like the simplicity of the current spec. But I understand where you’re coming from.

Numbering twts in a feed is basically the attempt of generating message IDs. It’s an interesting idea, but I reckon it is not even needed. I’d simply use location based addressing (feed URL + ‘#’ + timestamp) instead of content addressing. If one really wanted to, one could hash the feed URL and timestamp, but the raw form would actually improve disoverability and would not even require a richer client. But the majority of twtxt users in the last poll wanted to stick with content addressing.

yarnd actually sends If-Modified-Since request headers. Not only can I observe heaps of 304 responses for yarnds in my access log, but in Cache.FetchFeeds(…) we can actually see If-Modified-Since being deployed when the feed has been retrieved with a Last-Modified response header before: https://git.mills.io/yarnsocial/yarn/src/commit/98eee5124ae425deb825fb5f8788a0773ec5bdd0/internal/cache.go#L1278

Turns out etags with If-None-Match are only supported when yarnd serves avatars (https://git.mills.io/yarnsocial/yarn/src/commit/98eee5124ae425deb825fb5f8788a0773ec5bdd0/internal/handlers.go#L158) and media uploads (https://git.mills.io/yarnsocial/yarn/src/commit/98eee5124ae425deb825fb5f8788a0773ec5bdd0/internal/media_handlers.go#L71). However, it ignores possible etags when fetching feeds.

I don’t understand how the discovery URLs should work to replace the User-Agent header in HTTP(S) requests. Do you mind to elaborate?

Different protocols are basically just a client thing.

I reckon it’s best to just avoid mixing several languages in one feed in the first place. Personally, I find it okay to occasionally write messages in other languages, but if that happens on a more regularly basis, I’d definitely create a different feed for other languages.

Isn’t the emoji thing “just” a client feature? So, feed do not even have to state any emojis. As a user I’d configure my client to use a certain symbol for feed ABC. Currently, I can do a similar thing in tt where I assign colors to feeds. On the other hand, what if a user wants to control what symbol should be displayed, similar to the feed’s nick? Hmm. But still, my terminal font doesn’t even render most of emojis. So, Unicode boxes everywhere. This makes me think it should actually be a only client feature.

Been curious to see if can filter out my access.log file and output a list of my twtxt followers just in case I’ve missed someone … I came up with this awk -F '\"' '/twtxt/ {print $(NF-1)}' /var/log/user.log | grep -v 'twtxt\.net' | sort -u | awk '{print $(NF-1) $NF}' | awk '/^\(/' spaghetti monster of a command and I’m wondering if there’s a more elegant way for achieving the same thing.

@sorenpeter@darch.dk I’ve been using weechat for a while then when I started learning my way around Emacs I switched to Circe … a couple months later I setup ZNC, rolled with it for some time but wasn’t sure if I wanted to stick with it. Now I’m mainly using TheLounge and do find it convenient accessing it from anywhere. but quite honestly, I don’t have a preference.

I mean sure if i want to run it over on my tooth brush why not use something that is accessible everywhere like md5? crc32? It was chosen a long while back and the only benefit in changing now is “i cant find an implementation for x” when the down side is it breaks all existing threads. so…

Did Apple Just Kill Social Apps?

Apple’s iOS 18 update has introduced changes to contact sharing that could significantly impact social app developers. The new feature allows users to selectively share contacts with apps, rather than granting access to their entire address book. While Apple touts this as a privacy enhancement, developers warn it may hinder the growth of new social platforms. Nikita Bier, a start-up founder, called it “the en … ⌘ Read more

Been curious about how people on Pubnix instances do manage their feed, if they have access to log? Sent in a req to join one still no res.

@doesnm@doesnm.p.psf.lt Fot a sample access log? Which tool are you using?

how to parse caddy access log with useragent tool? seems it dont detect anything in json

@xuu@txt.sour.is I think it is more tricky than that.

“A company or entity …”

Also, as I understand it, “personal or household activity” (as you called it) is rather strict: An example could be you uploading photos to a webspace behind HTTP basic auth and sending that link to a friend. So, yes, a webserver is involved and you process your friend’s data (e.g., when did he access your files), but it’s just between you and him. But if you were to publish these photos publicly on a webserver that anyone can access, then it’s a different story – even though you could say that “this is just my personal hobby, not related to any job or money”.

If you operate a public Yarn pod and if you accept registrations from other users, then I’m pretty sure the GDPR applies. 🤔 You process personal data and you don’t really know these people. It’s not a personal/private thing anymore.

HTTPS is supposed to do [verification] anyway.

TLS provides verification that nobody is tampering with or snooping on your connection to a server. It doesn’t, for example, verify that a file downloaded from server A is from the same entity as the one from server B.

I was confused by this response for a while, but now I think I understand what you’re getting at. You are pointing out that with signed feeds, I can verify the authenticity of a feed without accessing the original server, whereas with HTTPS I can’t verify a feed unless I download it myself from the origin server. Is that right?

I.e. if the HTTPS origin server is online and I don’t mind taking the time and bandwidth to contact it, then perhaps signed feeds offer no advantage, but if the origin server might not be online, or I want to download a big archive of lots of feeds at once without contacting each server individually, then I need signed feeds.

feed locations [being] URLs gives some flexibility

It does give flexibility, but perhaps we should have made them URIs instead for even more flexibility. Then, you could use a tag URI,

urn:uuid:*, or a regular old URL if you wanted to. The spec seems to indicate that theurltag should be a working URL that clients can use to find a copy of the feed, optionally at multiple locations. I’m not very familiar with IP{F,N}S but if it ensures you own an identifier forever and that identifier points to a current copy of your feed, it could be a great way to fix it on an individual basis without breaking any specs :)

I’m also not very familiar with IPFS or IPNS.

I haven’t been following the other twts about signatures carefully. I just hope whatever you smart people come up with will be backwards-compatible so it still works if I’m too lazy to change how I publish my feed :-)

@sorenpeter@darch.dk !! I freaking love your Timeline … I kind of have an justified PHP phobia 😅 but, I’m definitely thinking about giving it a try!

/ME wondering if it’s possible to use it locally just to read and manage my feed at first and then maybe make it publicly accessible later.

@bender@twtxt.net and I saw some conspiracy theory that he knew he was going to be arrested. He was working with French intelligence on a plea deal to defect. And now Russia is freaking out that Ukraine allies can have war comms access.

Yikes! If only they had salty.im!

Nouveauté beta sur https://3r1c.net, la version TXT “smartphone” de chacun des articles. Accessible via le lien [M].

@prologic@twtxt.net Remember when we used to lose access to e-mail, IM and forum accounts after 30 days of inactivity? 😂 … Then storage became cheaper and companies figured out that any tiny bit of someone’s data is worth something to someone(thing) else. 🥲

@prologic@twtxt.net @lyse@lyse.isobeef.org I checked my logs and all I see are 304 responses and a couple of delayed requests here and there due to rate limiting, but not that many. I’ll disable it (the rate limiting) for a couple of days, let me know if you still get the ‘forbidden access’ thing 🫣 I may have effed up my configuration trying to deal with some weird stuff.

@prologic@twtxt.net Yes I suppose that is true. There is an article on Tailscale’s site that explains it all quite a bit: https://tailscale.com/blog/how-nat-traversal-works

To me, with CGNAT, it’s a small miracle that a direct connection can be made between peers (as opposed to going through a relay constantly) but it does indeed work. I guess to host it at home you would need to have it WAN accessible, and if you’ve already gone to the trouble of port forwarding etc… well 😅

Not that I could personally do that, but for those with static IPs etc.

I admit I’ve always compromised on this way too much myself, always to this day having Facebook Messenger just to communicate in my families group chats. Sure I run it in a Work profile on my GrapheneOS phone that I can switch off at any time, I can completely cut it off from network access any time as well, I can have a lot of rudimentary control over it, I use it as sparingly as possible, but it doesn’t change the fact everytime I use it we’re funneling private convos through bloody Meta’s servers and trackers etc.

Microsoft Outage Hits Users Worldwide, Leading To Canceled Flights

Microsoft grappled with a major service outage, leaving users across the world unable to access its cloud computing platforms and causing airlines to cancel flights. From a report: Thousands of users across the world reported problems with Microsoft 365 apps and services to Downdetector.com, a website that tracks service disruptions. “We’re inve … ⌘ Read more