If NICK = DOMAIN then only show @DOMAIN

So instead of @eapl.me@eapl.me it will just be @eapl.me

I’m just having a similar issue with a podcast I just uploaded on Castopod (which supports ActivityPub).

My first thought was creating a subdomain with the name of the podcast mordiscos.eapl.me

Then I watched that the software allows many podcasts in the same domain, so I had to pick a handle:

https://mordiscos.eapl.me/@podcast

So now I have @podcast@mordiscos.eapl.me when this one is ‘more correct’ @mordiscos@podcast.eapl.me or it could even be @mordiscos.eapl.me

I wasn’t aware of all that when I setup Castopod (documentation might improve a lot, IMO)

My point here is that it’s something important to think from the start, otherwise is painful to change if it’s already being used like that.

One thing I’ve learned over the many years now (approaching a decade and a half now) about self-hosting is two things; 1) There are many “assholes” on the open Internet that will either attack your stuff or are incompetent and write stupid shit™ that goes crazy on your stuff 2) You have to be careful about resources, especially memory and disk i/o. Especially disk i/o. this can kill your overall performance when you either have written software yourself or use someone else’s that can do unconfined/uncontrolled disk i/o causing everything to grind to a halt and even fail. #self-hosted

@aelaraji@aelaraji.com will all those run on his hardware? I don’t think @movq@www.uninformativ.de’s problem is the software, it is that his hardware has gotten too old. :-D

Btw about social: found very interesting thing about twitter:

The legal basis that X asserts in the filing is not terribly interesting. But what is interesting is that X has decided to involve itself at all, and it highlights that you do not own your followers or your account or anything at all on corporate social media, and it also highlights the fact that Elon Musk’s X is primarily a political project he is using to boost, or stifle, specific viewpoints and help his friends. In the filing, X’s lawyers essentially say—like many other software companies, and, increasingly, device manufacturers as well—that the company’s terms of service grant X’s users a “license” to use the platform but that, ultimately, X owns all accounts on the social network and can do anything that it wants with them.

all of the software sucks, but i have a solution! we’ll write even more software! get more people involved, make it the Ideal Career, then we can write AL̵L̴ O̵F TH̨E ̧C̀ODE̷S. mountains of shitty garbage that kind-of does the thing. software will still suck, but T͜HE̕N oh then we can write compilers that let us run the old shitty code inside of our mountain of new shitty code. now all of the code is in a giant pile and we’re using it to control space ships that definitely never crash. the more code the better! we can represent NaN easily in undefined systems! developers aren’t particularly bright, so the language is simple and easy for them to understand. we know this, that’s why it was made this way. the͡ moun͢tain ͠m̵us͜t ͠nȩver̢ ̴wa̡ve̴r̵. the more code the better. so instead of writing the code manually we c̴ómpilę t͞or̸t̕u͜red so̷u͜ls ͏i͞n͞to͝ ͟nice ͢b̀l̷oxe̡ls ̸of͠ ̸t̶an̡g͜l̀ed ͞n̢eu͏ra̡l͠ ̕ne̢t͏w͟orkś.̸ w̨e d͠on’t́ know how i̵t ̷w͟ork̡s, ̴but ̷t̴he model̢ ̶is̛ 5̛0GiB ́s̶o ͟i͞t s͞e͘rve͟s ̴tḩe̛ purpośe. WE̕ M͠US̴T B͢U̢ILD ͝T͞HE MO͝UN̶T̨A̵IN.

@prologic@twtxt.net to interact with locally running LLM, which software are you using? LM Studio, or Ollama, or…?

@bender@twtxt.net 😆 Would calling it a Single user Twtxt "Yarn Pod **Like**" software help you sleep better at night? And just in case things are not clear here, I’m being sarcastic (well, kinda…) and not trying to gaslight anyone. Think of my comment as Bromance or something like that LOL.

But seriously, Just like any UNIX-Like system to Unix™, as in non of them are UNIX™, but each of them is providing more or less similar experience and re-implementing what once was parts of “UNIX™ software” their own (more or less better) ways. Timeline is Yarn™ Pod like, (my personal take on the word pod is: “an instance of XYZ software acting an escape POD from X-BS for… ABC reasons.”) providing more or less of a similar experience, implementing some of Yarn.social Extensions, trying to add in some more …etc.

Otherwise, I don’t see the Yarn pod mention as some kind of malicious manoeuvre, but more of a tribute to what (might have) sparked inspiration for creating Timeline? Also, our friend @sorenpeter@darch.dk here has got a valid reason for using PHP (#tms7aka) so let’s let’s put our unease towards the language itself aside and maybe just help however/as much as we can in order to make internet (the World?) a better place.

Hey, @ I know. Just wondering the kind of apps or software and how you all stay up to date in conversations. Is it through webmentions?

@Codebuzz@www.codebuzz.nl Speed is an issue for the client software, not the format itself, but yes I agree that it makes the most sense to append post to the end of the file. I’m referring to the definition that it’s the first url = in the file that is the one that has to be used for the twthash computation, which is a too arbitrary way of defining something that breaks treading time and time again. And this is the case for not using url+date+message = twthash.

Alas, I can’t get myself to resist. Interacting with tech and software makes me feel like a kid in a candy shop: “I wanna taste all of it! Find my favorite Lollipop and wonder about where it came from, who made it? How is it possible to turn any kind of mushy juicy fruit into a hard, forever lasting candy in a freaking stick!? Oh, Wait!! Is THAT chocolate over there!!?”

I’m not even supposed to do be doing any of this, I should be making stuff* with Shapes, forms and color instead of poking at software with a stick like a caveman. 😆

*Stuff: Things I make and refuse to call Art, unless I have to in a resume and what not.

@movq@www.uninformativ.de Although my recent breakage/down time was more of a result of human error than it is something to blame on software itself, I do get your point; and will highly probably end up going the same route in the near future. It’s just that in order to south my forever itching curiosity, I have to learn and try some things first.

Gentlemen, I have a pdf file (1.5MB) which I want to be able to block and copy text writing out of it, but it’s locked, preventing this. All I used to do was write it out by hand, or screen shot the text as an image.

Is there any software that opens pdf format for copying and pasting of the text?

More thoughts about changes to twtxt (as if we haven’t had enough thoughts):

- There are lots of great ideas here! Is there a benefit to putting them all into one document? Seems to me this could more easily be a bunch of separate efforts that can progress at their own pace:

1a. Better and longer hashes.

1b. New possibly-controversial ideas like edit: and delete: and location-based references as an alternative to hashes.

1c. Best practices, e.g. Content-Type: text/plain; charset=utf-8

1d. Stuff already described at dev.twtxt.net that doesn’t need any changes.

We won’t know what will and won’t work until we try them. So I’m inclined to think of this as a bunch of draft ideas. Maybe later when we’ve seen it play out it could make sense to define a group of recommended twtxt extensions and give them a name.

Another reason for 1 (above) is: I like the current situation where all you need to get started is these two short and simple documents:

https://twtxt.readthedocs.io/en/latest/user/twtxtfile.html

https://twtxt.readthedocs.io/en/latest/user/discoverability.html

and everything else is an extension for anyone interested. (Deprecating non-UTC times seems reasonable to me, though.) Having a big long “twtxt v2” document seems less inviting to people looking for something simple. (@prologic@twtxt.net you mentioned an anonymous comment “you’ve ruined twtxt” and while I don’t completely agree with that commenter’s sentiment, I would feel like twtxt had lost something if it moved away from having a super-simple core.)All that being said, these are just my opinions, and I’m not doing the work of writing software or drafting proposals. Maybe I will at some point, but until then, if you’re actually implementing things, you’re in charge of what you decide to make, and I’m grateful for the work.

@prologic@twtxt.net Thanks for writing that up!

I hope it can remain a living document (or sequence of draft revisions) for a good long time while we figure out how this stuff works in practice.

I am not sure how I feel about all this being done at once, vs. letting conventions arise.

For example, even today I could reply to twt abc1234 with “(#abc1234) Edit: …” and I think all you humans would understand it as an edit to (#abc1234). Maybe eventually it would become a common enough convention that clients would start to support it explicitly.

Similarly we could just start using 11-digit hashes. We should iron out whether it’s sha256 or whatever but there’s no need get all the other stuff right at the same time.

I have similar thoughts about how some users could try out location-based replies in a backward-compatible way (append the replyto: stuff after the legacy (#hash) style).

However I recognize that I’m not the one implementing this stuff, and it’s less work to just have everything determined up front.

Misc comments (I haven’t read the whole thing):

Did you mean to make hashes hexadecimal? You lose 11 bits that way compared to base32. I’d suggest gaining 11 bits with base64 instead.

“Clients MUST preserve the original hash” — do you mean they MUST preserve the original twt?

Thanks for phrasing the bit about deletions so neutrally.

I don’t like the MUST in “Clients MUST follow the chain of reply-to references…”. If someone writes a client as a 40-line shell script that requires the user to piece together the threading themselves, IMO we shouldn’t declare the client non-conforming just because they didn’t get to all the bells and whistles.

Similarly I don’t like the MUST for user agents. For one thing, you might want to fetch a feed without revealing your identty. Also, it raises the bar for a minimal implementation (I’m again thinking again of the 40-line shell script).

For “who follows” lists: why must the long, random tokens be only valid for a limited time? Do you have a scenario in mind where they could leak?

Why can’t feeds be served over HTTP/1.0? Again, thinking about simple software. I recently tried implementing HTTP/1.1 and it wasn’t too bad, but 1.0 would have been slightly simpler.

Why get into the nitty-gritty about caching headers? This seems like generic advice for HTTP servers and clients.

I’m a little sad about other protocols being not recommended.

I don’t know how I feel about including markdown. I don’t mind too much that yarn users emit twts full of markdown, but I’m more of a plain text kind of person. Also it adds to the length. I wonder if putting a separate document would make more sense; that would also help with the length.

@prologic@twtxt.net Wikipedia claims sha1 is vulnerable to a “chosen-prefix attack”, which I gather means I can write any two twts I like, and then cause them to have the exact same sha1 hash by appending something. I guess a twt ending in random junk might look suspcious, but perhaps the junk could be worked into an image URL like  . If that’s not possible now maybe it will be later.

. If that’s not possible now maybe it will be later.

git only uses sha1 because they’re stuck with it: migrating is very hard. There was an effort to move git to sha256 but I don’t know its status. I think there is progress being made with Game Of Trees, a git clone that uses the same on-disk format.

I can’t imagine any benefit to using sha1, except that maybe some very old software might support sha1 but not sha256.

@mckinley@twtxt.net To answer some of your questions:

Are SSH signatures standardized and are there robust software libraries that can handle them? We’ll need a library in at least Python and Go to provide verified feed support with the currently used clients.

We already have this. Ed25519 libraries exist for all major languages. Aside from using ssh-keygen -Y sign and ssh-keygen -Y verify, you can also use the salty CLI itself (https://git.mills.io/prologic/salty), and I’m sure there are other command-line tools that could be used too.

If we all implemented this, every twt hash would suddenly change and every conversation thread we’ve ever had would at least lose its opening post.

Yes. This would happen, so we’d have to make a decision around this, either a) a cut-off point or b) some way to progressively transition.

If some of you budding fathers want to know how I created a computer nerd to one day work for Facebook in the big USA, well you purchase a $1000 Xmas present, an enormous thick book with C++ programming, and say, you can play as many games as you like kids, but James has to create them using computer software.

SO James created once a 3D chess program with sound, took 6 months or so, really hard to beat, not based on logic moves point by point like other chess programs, this one was based on the depth of looking for patterns, set it to 5 moves ahead and you were toast every time. Nice program too, sadly gone over the years, computers suffer from bit rot. We used to try and mark rotten hard drive discs once as bad sectors, not sure how UBuntu does this these days, I see a dozen errors on the screen every time I load.

Today I would purchase for my kids AI CAD simulation software with metal 3D printer and get your child to build fancy 3D models and engines from scratch. This will make them an expert in the CAD AI industry by the time they are 14 years old. Sadly AI is here to stay and will spoil the Internet.

Hello twtxt! I’m James (or @falsifian@www.falsifian.org). I live in Toronto. Recent interests include space complexity, simple software, and science fiction.

@movq@www.uninformativ.de This outage did affect me, though not much, via the university where my wife teaches and where I teach sometimes. They actually sent out an alert in their emergency alert system (the one they use to alert people of extreme weather events and bomb threats, mostly), telling people that all IT systems were down.

A friend of mine elsewhere pointed out that they pushed this change on a Friday, which of course no software developer with any experience would ever, ever, ever do. I have to assume there’s some toxic management at CrowdStrike, but who knows. Even more reasons to sympathize with the poor folks who are probably going to be working nights and weekends to clean up this mess.

@movq@www.uninformativ.de Somewhere or another, I think in a William Byrd talk, I heard it suggested that the best ideas in computer science should fit on an index card (ah yes it’s this one: https://paperswelove.org/2017/video/will-byrd-most-beautiful-program/ ). He was referring to the basic principles of LISP/the lambda calculus, which have sometimes been called the Maxwell’s equations of computer programming (by Alan Kay). Simple, short, elegant, but very densely packed with meaning–generations of people have spent their whole careers unpacking what those simple rules can do.

Much of modern software feels like the polar opposite of that. Not only can you not write it on an index card, you never will be able to because people who write software don’t seem to aspire to try. I wish more people thought this way though!

@New_scientist@feeds.twtxt.net It’s insane that a single botched software update can have worldwide impact. We’ve messed up badly.

Windows computers around the world are failing in a major outage

An update to a piece of software called CrowdStrike Falcon Sensor appears to be negatively impacting Windows computers worldwide, with banks, airports, broadcasters and more finding that devices display a “blue screen of death” instead of booting up ⌘ Read more

Regarding complexity budget, slow software, all that:

Very few people do take pride in building simple, elegant, high-quality systems, do they? Why is that? Why are huge shiny things with tons of features more attractive? 🤔

I never explicitly thought about this, to be honest. It was only at the back of my head. And I never tried to teach our younger “students” at work: “Hey, it’s a great achievement to build something simple and elegant. That’s something to be proud of!”

Worse, simple software is often described as “boring”. Yes, in a way, it is boring, because your brain doesn’t have to get into overdrive to understand it. But that’s exactly the point. And it’s hard to achieve that! Simple software isn’t just “fewer lines of code”, you have to be pretty clever to solve a problem in a simple and elegant way. So it’s something to be proud of.

Could this be an intuitive, emotional way to get more people on board the “simple software”-train? 🤔

I’ve been thinking about a new term I’ve come across whilst reading a book. It’s called “Complexity Budget” and I think it has relevant in lots of difficult fields. I specifically think it has a lot of relevant in the Software Industry and organizations in this field. When doing further research on this concept, I was only able find talks on complexity budget in the context of medical care, especially phychiratistic care. In this talk it was describe as, complexity:

- Complexity is confusing

- Complexity is costly

- Complexity kills

When we think of “complexity” in terms of software and software development, we have a sort-of intuitive about this right? We know when software has become too complex. We know when an organization has grown in complexity, or even a system. So we have a good intuition of the concept already.

My question to y’all is; how can we concretely think about “Complexity Budget” and define it in terms that can be leveraged and used to control the complexity of software dns ystems?

I’m starting to embrace containers on my PC for software I want to use once without littering my home folder with junk files. It’s nice.

Software Testing Day

⌘ Read more

⌘ Read more

I’m not a software guy

QOTD: What do you host on your home server? How do you host it? Are you using containers? VMs? Did you install any management interface or do you just SSH in? What OS does it run?

Mine runs Arch (btw) and hosts a handful of things using Docker. Adguard Home, http://mckinley2nxomherwpsff5w37zrl6fqetvlfayk2qjnenifxmw5i4wyd.onion/, and some other things. NFS, Flexo, and Wireguard (peer and bounce server in my personal network) are outside Docker. I have a hotkey in my window manager that spawns a terminal on my server using SSH. It makes things very easy and I highly recommend it.

I am thinking about replacing Docker with Podman because the Common Wisdom seems to say it’s better. I don’t really know if it is or isn’t.

Also, how much of your personal infrastructure is on IPv6? I think all the software I use supports both, but I’ve mostly been using IPv4 because it’s easier to remember the addresses. I’ve been working for the last couple days on making it IPv6-only.

I finally found the NASM assembler.

I had heard that name before, many times, but somehow never looked into it. Weird. 🤨🤔

This is the kind of program I was looking for.

- It is free software. Especially in the DOS ecosystem, free/libre software is a very scarce resource.

- It’s a small command line program, not a huge behemoth.

- Documentation appears to be well written.

- It can even cross-compile DOS binaries from Linux.

I noticed that some of my software projects have a rather long lifetime, so I made a little graph:

when writing a new tool/software, write doc first, explaining how it works. Then, actually writing the code is much easier :)

Lo que aprendí mientras estaba en burn out. (Contado por un Software Engineer)

https://medium.com/@erikalmaraz_/lo-que-aprend%C3%AD-mientras-estaba-en-burn-out-contado-por-un-software-engineer-78b2eb4deda5

With all M$’s apps being basically fancy web apps, there is no need to actually install any of their legacy applications locally anymore. Since I am online basically 100% of the time this turns my Office experience in a Chromebook like one. No installs, never outdated software. Just a yearly subscription contribution to worry about.

Plex Users Fear New Feature Will Leak Porn Habits To Their Friends and Family

Many Plex users were alarmed when they got a “week in review” email last week that showed them what they and their friends had watched on the popular media server software. From a report: Some users are saying that their friends’ softcore porn habits are being revealed to them with the feature, while others are horrified … ⌘ Read more

@movq@www.uninformativ.de Thanks for reaching out - just general wonkiness with the Epson printing job configuration UI. They offer Fedora software, but it seems that not all features are supported

Since I have these simple, yet effective bash shell commands, which allow me to edit notes, plans, todos and statuses from the terminal, I feel liberated from overly complex software - everything is just text files and applications which come preinstalled on every Linux system.

The amount of shady Android apps in Google’s “Play Store” is so large, it makes me want to write my own software instead. 😖

- It’s criminal: Copilot was only possible because of massive theft of other peoples’ work (no compensation or even acknowledgement to any of the developers whose code was used to create Copilot)

- It’s positioned to put software developers out of work or so fully de-skill them that they no longer know how to code anything but prompts (after which come corporate-justified salary and benefits decreases)

Don’t use it. No one should ever use it. You’re destroying your own future as a software developer by leaning on and supporting these things.

@jmjl@tilde.green I’m sorry that I’m not super knowledgeable about alternatives to jmp.chat but I’ll tell you what I know.

You’re probably right about jmp.chat not working for you, at least as it is now. You can only get US and Canadian phone numbers through it last time I checked, so if you’re not in either of those countries you’d be making international calls all the time and people who wanted to call you would be making international calls too.

I’ve seen people talk about using SIP as an intermediary: you can bridge SIP-to-XMPP, and bridge SIP-to-PSTN (PSTN = “packet switched telephone network”, meaning normal telephone). You can skip the SIP-to-XMPP side if you’re comfortable using a SIP client. I don’t know very much about SIP or PSTN so I am not sure what to recommend, but perhaps this helps your search queries.

There are a fair number of services like TextNow that let you sign up for a real telephone number that you can then use via their app (I wouldn’t use TextNow–they had tons of spyware in their app). I don’t know if that kind of service works for you but if it does perhaps you’d be able to find one of them that isn’t horrible. This page (https://alternativeto.net/software/jmp-chat/) has a bunch of alternatives; I can’t vouch for any of them but maybe it’s a starting point if you want to go this route.

Good luck!

Ugh, ffs–the datasette project just added #ChatGPT garbage. Another seemingly nice piece of software and project that I need to stop using.

I guess I can be thankful they self-identify.

@prologic@twtxt.net I don’t get your objection. dockerd is 96M and has to run all the time. You can’t use docker without it running, so you have to count both. docker + dockerd is 131M, which is over 3x the size of podman. Plus you have this daemon running all the time, which eats system resources podman doesn’t use, and docker fucks with your network configuration right on install, which podman doesn’t do unless you tell it to.

That’s way fat as far as I’m concerned.

As far as corporate goes, podman is free and open source software, the end. docker is a company with a pricing model. It was founded as a startup, which suggests to me that, like almost all startups, they are seeking an exit and if they ever face troubles in generating that exit they’ll throw out all niceties and abuse their users (see Reddit, the drama with spyware in Audacity, 10,000 other examples). Sure you can use it free for many purposes, and the container bits are open source, but that doesn’t change that it’s always been a corporate entity, that they can change their policies at any time, that they can spy on you if they want, etc etc etc.

That’s way too corporate as far as I’m concerned.

I mean, all of this might not matter to you, and that’s fine! Nothing wrong with that. But you can’t have an alternate reality–these things I said are just facts. You can find them on Wikipedia or docker.com for that matter.

GnuCOBOL 3.2 Released After 2+ Years In Development

For those fond of the COBOL programming language and continuing to make use of it in new development efforts, GnuCOBOL 3.2 was released on Friday as the latest feature update for this 21+ year old free software effort around being an open-source COBOL implementation… ⌘ Read more

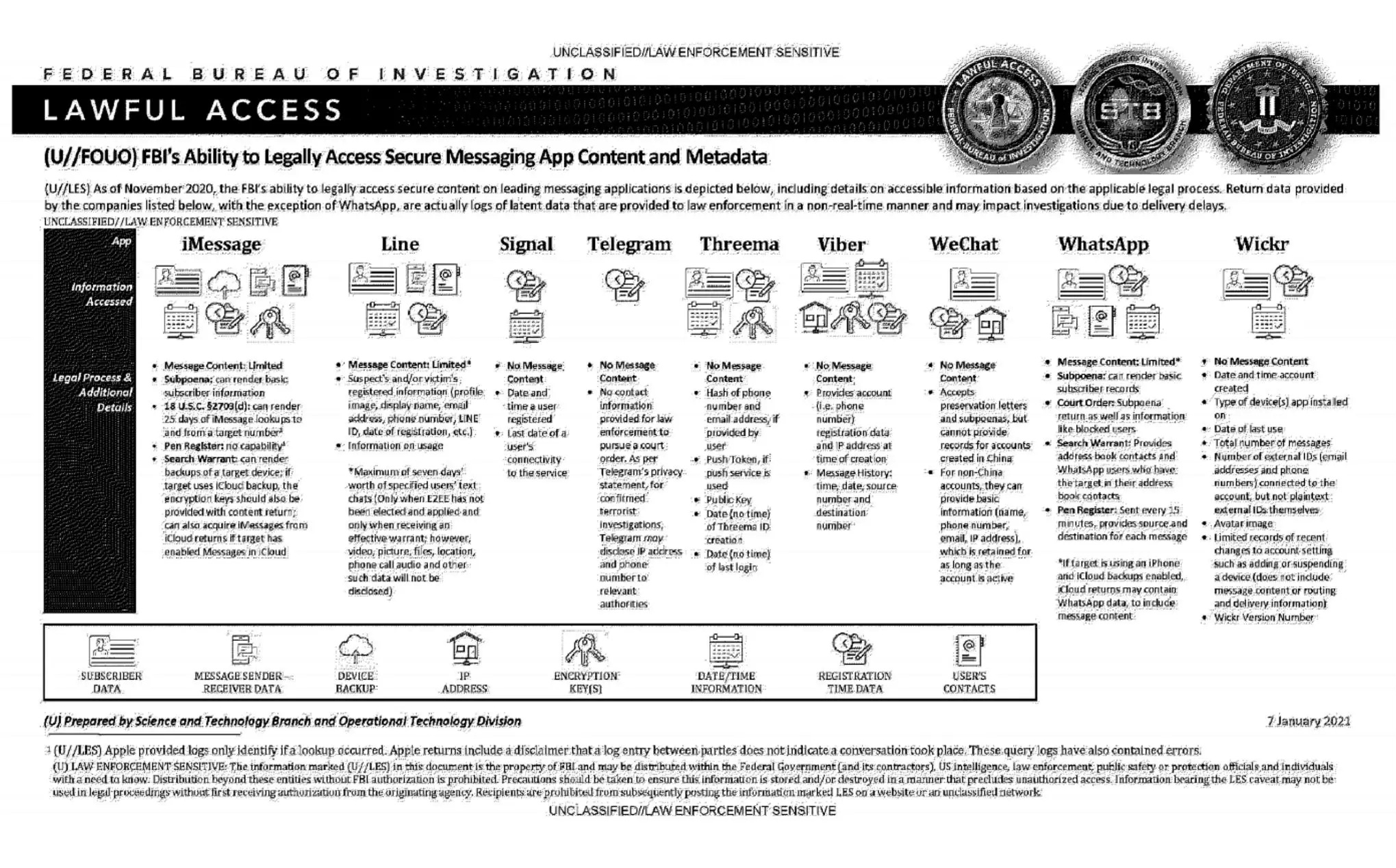

An official FBI document dated January 2021, obtained by the American association “Property of People” through the Freedom of Information Act.

This document summarizes the possibilities for legal access to data from nine instant messaging services: iMessage, Line, Signal, Telegram, Threema, Viber, WeChat, WhatsApp and Wickr. For each software, different judicial methods are explored, such as subpoena, search warrant, active collection of communications metadata (“Pen Register”) or connection data retention law (“18 USC§2703”). Here, in essence, is the information the FBI says it can retrieve:

Apple iMessage: basic subscriber data; in the case of an iPhone user, investigators may be able to get their hands on message content if the user uses iCloud to synchronize iMessage messages or to back up data on their phone.

Line: account data (image, username, e-mail address, phone number, Line ID, creation date, usage data, etc.); if the user has not activated end-to-end encryption, investigators can retrieve the texts of exchanges over a seven-day period, but not other data (audio, video, images, location).

Signal: date and time of account creation and date of last connection.

Telegram: IP address and phone number for investigations into confirmed terrorists, otherwise nothing.

Threema: cryptographic fingerprint of phone number and e-mail address, push service tokens if used, public key, account creation date, last connection date.

Viber: account data and IP address used to create the account; investigators can also access message history (date, time, source, destination).

WeChat: basic data such as name, phone number, e-mail and IP address, but only for non-Chinese users.

WhatsApp: the targeted person’s basic data, address book and contacts who have the targeted person in their address book; it is possible to collect message metadata in real time (“Pen Register”); message content can be retrieved via iCloud backups.

Wickr: Date and time of account creation, types of terminal on which the application is installed, date of last connection, number of messages exchanged, external identifiers associated with the account (e-mail addresses, telephone numbers), avatar image, data linked to adding or deleting.

TL;DR Signal is the messaging system that provides the least information to investigators.

Still undecided between TiddlyWiki, DokuWiki, Bear, Benotes, Memos, my blog software, standardnotes, apple notes and more. I like them all quite a bit, but standardnotes, the only one that has reall multiplatform is so fucking complicated to host on your own and then they have this stupid offline subscription thing that allows rich text or the block editor that works like notion. I also found codex docs which is really really nice. Unfortunately they lack proper authentication. 1 / 2

@prologic@twtxt.net I think those headsets were not particularly usable for things like web browsing because the resolution was too low, something like 1080p if I recall correctly. A very small screen at that resolution close to your eye is going to look grainy. You’d need 4k at least, I think, before you could realistically have text and stuff like that be zoomable and readable for low vision people. The hardware isn’t quite there yet, and the headsets that can do that kind of resolution are extremely expensive.

But yeah, even so I can imagine the metaverse wouldn’t be very helpful for low vision people as things stand today, even with higher resolution. I’ve played VR games and that was fine, but I’ve never tried to do work of any kind.

I guess where I’m coming from is that even though I’m low vision, I can work effectively on a modern OS because of the accessibility features. I also do a lot of crap like take pictures of things with my smartphone then zoom into the picture to see detail (like words on street signs) that my eyes can’t see normally. That feels very much like rudimentary augmented reality that an appropriately-designed headset could mostly automate. VR/AR/metaverse isn’t there yet, but it seems at least possible for the hardware and software to develop accessibility features that would make it workable for low vision people.

@stigatle@yarn.stigatle.no @prologic@twtxt.net @eldersnake@we.loveprivacy.club I love VR too, and I wonder a lot whether it can help people with accessibility challenges, like low vision.

But Meta’s approach from the beginning almost seemed like a joke? My first thought was “are they trolling us?” There’s open source metaverse software like Vircadia that looks better than Meta’s demos (avatars have legs in Vircadia, ffs) and can already do virtual co-working. Vircadia developers hold their meetings within Vircadia, and there are virtual whiteboards and walls where you can run video feeds, calendars and web browsers. What is Meta spending all that money doing, if their visuals look so weak, and their co-working affordances aren’t there?

On top of that, Meta didn’t seem to put any kind of effort into moderating the content. There are already stories of bad things happening in Horizon Worlds, like gangs forming and harassing people off of it. Imagine what that’d look like if 1 billion people were using it the way Meta says they want.

Then, there are plenty of technical challenges left, like people feeling motion sickness or disoriented after using a headset for a long period of time. I haven’t heard announcements from Meta that they’re working on these or have made any advances in these.

All around, it never sounded serious to me, despite how much money Meta seems to be throwing at it. For something with so much promise, and so many obvious challenges to attack first that Meta seems to be ignoring, what are they even doing?

On LinkedIn I see a lot of posts aimed at software developers along the lines of “If you’re not using these AI tools (X,Y,Z) you’re going to be left behind.”

Two things about that:

- No you’re not. If you have good soft skills (good communication, show up on time, general time management) then you’re already in excellent shape. No AI can do that stuff, and for that alone no AI can replace people

- This rhetoric is coming directly from the billionaires who are laying off tech people by the 100s of thousands as part of the class war they’ve been conducting against all working people since the 1940s. They want you to believe that you have to scramble and claw over one another to learn the “AI” that they’re forcing onto the world, so that you stop honing the skills that matter (see #1) and are easier to obsolete later. Don’t fall for it. It’s far from clear how this will shake out once governments get off their asses and start regulating this stuff, by the way–most of these “AI” tools are blatantly breaking copyright and other IP laws, and some day that’ll catch up with them.

That said, it is helpful to know thy enemy.

The EU’s Proposed CRA Law May Have Unintended Consequences for the Python Ecosystem (as well as the entire free software movement).