@lyse@lyse.isobeef.org thanks for checking! it is a work in progress. i journal as well so may just be a manual process to get some of the data back in, but not going to rush it unless it is dorking someone’s feed.

i thought about making a chill little vlog putting together my new pi4 for KVM purposes but unless i make it go fast somehow i’d probably quickly exceed the 30 mins on the last mini DVD i have for recording lol

@kat@yarn.girlonthemoon.xyz ok yeah idk what’s going on whatever i’ll mess with config later

Pinellas County - Long Run: 11.00 miles, 00:09:38 average pace, 01:46:02 duration

just one of those ones you never want to stop. it was dark, quiet, and lonely which just let me zone out with the cool weather and maintain what felt easy. didn’t look at the watch until the end when it notified me it was going to die. adjusted the mileage and time to reflect.

#running

How in da fuq do you actually make these fucking useless AI bots go way?

proxy-1:~# jq '. | select(.request.remote_ip=="4.227.36.76")' /var/log/caddy/access/mills.io.log | jq -s '. | last' | caddy-log-formatter -

4.227.36.76 - [2025-01-05 04:05:43.971 +0000] "GET /external?aff-QNAXWV=&f=mediaonly&f=noreplies&nick=g1n&uri=https%3A%2F%2Fmy-hero-ultra-impact-codes.linegames.org HTTP/2.0" 0 0

proxy-1:~# date

Sun Jan 5 04:05:49 UTC 2025

😱

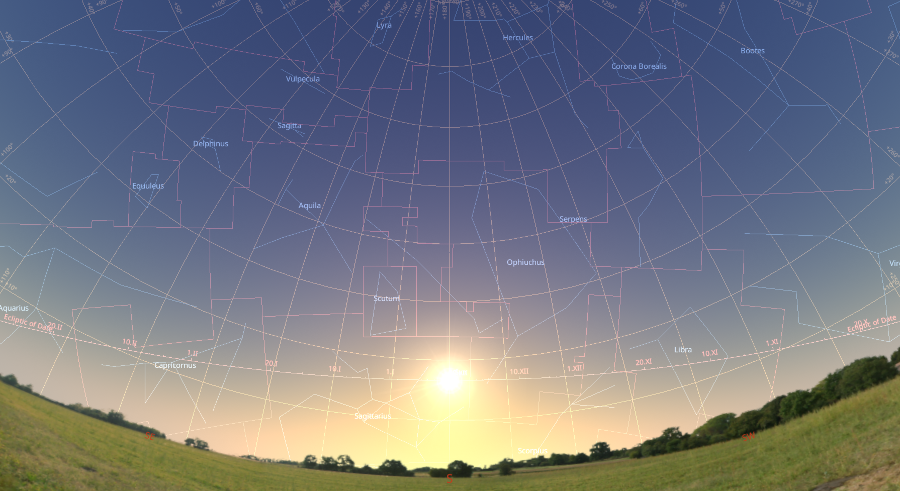

Noon in summer:

And noon in winter:

The difference never fails to make me go “whoa”. 😅

Having a lot of fun with Coraza today. A Web Application Firewall library written in Go that also happens to have a Caddy module.

@bender@twtxt.net aw thank you so much!!! rambling is the best… just gotta keep on going about what we love and somehow people like it lol!

Rode my bicycle into town. What the hell is wrong with some of these motorists!? Here in right lane traffic land, a car reversed out of the driveway on the left into the road and nearly hit me. And this happened twice! If you don’t fucking see, how about you go slowly and not just hope that nobody is coming!? The first one even decided to honk at me. SUV drivers confirming prejudice…

Well, at least I could help a lady with transfering her child in a pram.

my friend is getting into snh48 and its sister groups and it makes me miss being a fan but my oshi (shen mengyao) graduated so i don’t have any reason to follow them. but the thing about snh48 is that it’s a group full of messy toxic lesbians with mental problems so there is always some wild ass drama going on. you can never leave snh48 standom

is sooo good my friend just shared it and i’m obsessed already

my camcorder videos are gonna go so hard yall like i can post them exclusively to my own youtube site and just do whatever tf i want with it. i should make more vlogs

@kat@yarn.girlonthemoon.xyz i also like the separation inherent with using dedicated devices. like i have a DAP, a fiio X1 ii from 2019, and it’s still going strong. it’s perfect for on the go music listening and i never have to worry about like going somewhere with no reception and the music drops out. it’s all local AND the battery lasts longer because i’m not using wi-fi or bluetooth or data. also i can directly access the file system and just add files anytime. this goes for my point & shoot and other devices too. i love this shit i’m such a nerd

really wanna make an ssh zine app inspired by a telnet zine cms i found on github. i’m gonna probably go ahead with the telnet zine idea i have if i can get people for it but if i could build my own ssh mirror for it with golang and the charmbracelet wish library that’d be epic

@movq@www.uninformativ.de It’s not any better on the “ground” with trees and buildings around. They don’t dampen at all, in fact the houses just cause reverb and amplify the bangs. Rest assured, I did not hear any people laughing or anything in that nature. Just grenades going off. Talking to my mates, it appears that I live in an especially bad shithole, they reported a noticable reduction of explosions around 00:20. Over here, there was constant fire till around 02:00.

Yep, that’s exactly how I imagine a war zone, too.

3°C today, it was quite nice in the sun. A lot of hunting and tree felling going on in the forest. And we met the heron again, that was very cool: https://lyse.isobeef.org/waldspaziergang-2024-12-28/

And now some stupid fuckwits are burning firecrackers again. Very annoying. Can we please ban this shit once and forever!?

Easy: 5.06 miles, 00:09:53 average pace, 00:50:02 duration

nice easy run.e definitely feeling the extra weight from all the holidays. probably could go hibernate safely if i wanted to.

#running

@bender@twtxt.net Bahahaha in hindsight I got rid of that 🤣 Just silly nonsense, just one of those things when you create an account on yet-another silly centralized platform(s) and go “fuck” someone’s already taken the username I want 😅

and going back to a handle you could input in your client to look for the user/file, like @nick@domain.tls I think Webfinger is the way to go. It has enough information to know where to find that nick’s URL.

@prologic@twtxt.net does that webfinger fork made by darch work OK with yarn as it is now? (I’ve never used it, so I’m researching about it)

https://darch.dk/.well-known/webfinger/

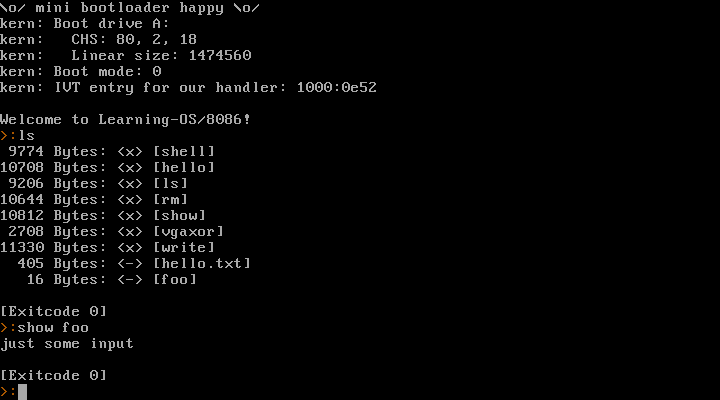

I’ve been making a little toy operating system for the 8086 in the last few days. Now that was a lot of fun!

I don’t plan on making that code public. This is purely a learning project for myself. I think going for real-mode 8086 + BIOS is a good idea as a first step. I am well aware that this isn’t going anywhere – but now I’ve gained some experience and learned a ton of stuff, so maybe 32 bit or even 64 bit mode might be doable in the future? We’ll see.

It provides a syscall interface, can launch processes, read/write files (in a very simple filesystem).

Here’s a video where I run it natively on my old Dell Inspiron 6400 laptop (and Warp 3 later in the video, because why not):

https://movq.de/v/893daaa548/los86-p133-warp3.mp4

(Sorry for the skewed video. It’s a glossy display and super hard to film this.)

It starts with the laptop’s boot menu and then boots into the kernel and launches a shell as PID 1. From there, I can launch other processes (anything I enter is a new process, except for the exit at the end) and they return the shell afterwards.

And a screenshot running in QEMU:

@bender@twtxt.net Dud! you should see the updated version! 😂 I have just discovered the scratch #container image and decided I wanted to play with it… I’m probably going to end up rebuilding a LOT of images.

~/htwtxt » podman image list htwtxt

REPOSITORY TAG IMAGE ID CREATED SIZE

localhost/htwtxt 1.0.7-scratch 2d5c6fb7862f About a minute ago 12 MB

localhost/htwtxt 1.0.5-alpine 13610a37e347 4 weeks ago 20.1 MB

localhost/htwtxt 1.0.7-alpine 2a5c560ee6b7 4 weeks ago 20.1 MB

docker.io/buckket/htwtxt latest c0e33b2913c6 8 years ago 778 MB

Way to go F*** Book! With another $263M going down the drains … And people’s lives/data with it.

@prologic@twtxt.net Well I just mirrored yarnd’s JSON in my webfinger endpoint and lookup, so not much else to do for standardization.

And for people who don’t like PHP you can always just go with Added WebFinger support to my email address using one rewrite rule and one static file. or simply putting a static JSON in place for .well-know/webfinger

since twtxt is based on text files, I think you can consider @domain.tld as an alias of http://domain.com/twtxt.txt (or https://domain.com/tw.txt, among other combinations in the wild).

Or perhaps you can use DNS TXT records?

Although I think that’s a bit more complicated for some environments and users, I’d go with looking for a default /tw*.txt

One benefit with bluesky is your username is also a website. And not a clunky URL with slashes and such. I wish twtxt adopted that. I have advocated for webfinger to for twtxt to let us do something like it with usernames. Nostr has something like it

By default the bsky.social urls all redirect to their feeds like: hmpxvt.bsky.social

Many custom urls will redirect to some kind of linktree or just their feed cwebonline.com or la.bonne.petite.sour.is or if you are a major outlet just to your web presence like https://theonion.com or https://netflix.com

Its just good SEO practice

Do all nostr addresses take you to the person if typed into a browser? That is the secret sauce.

No having to go to some random page first. no accounts. no apps to install. just direct to the person.

Tab and expected it to auto-complete. 🤦

@movq@www.uninformativ.de HAHA! speaking of reflexes, Ctrl+SHIFT+v to paste and Ctrl+a to get to the start of the line, get me all the time when I’m using a browser … Ctrl+w (delete back a word) is the worst! tabs go Pouf! 🥲

haha, that’s gold xD.

#randomMemory I remember when I was starting to code, like 30 years ago, not understanding why my Basic file didn’t run when I renamed it to .exe

And nowadays, I’ve seen a few Go apps in a single executable, so twtxt.exe could be a thing, he!

I really, really have to go get some sleep. If you see me online before at least 6 hours, punch me in the face!

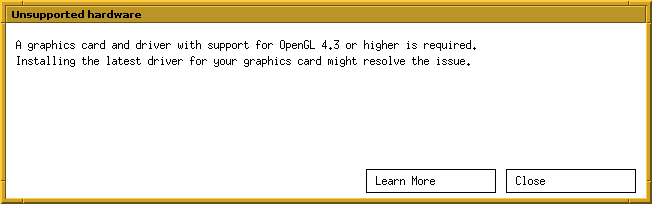

Goodbye Blender, I guess? 🤔

A bit annoying, but not much of a problem. The only thing I did with Blender was make some very simple 3D-printable objects.

I’ll have a look at the alternatives out there. Worst case is I go back to Art of Illusion, which I used heavily ~15 years ago.

Easy: 7.08 miles, 00:10:03 average pace, 01:11:04 duration

nice cool run. well rested, and kept it mainly in zone 2 as intended. was not sure how my back was going to be after i tweaked it moving weights around yesterday, but it was fine. cushy mach 6s on and some ibuprofen just in case.

#running

tt Go rewrite produces some colors. There is definitely a lot more tweaking necessary. But this is a first step in the right direction.

Thank you @bender@twtxt.net and @movq@www.uninformativ.de!

I partially fixed the code block rendering. With some terrible hacks, though. :-( I see that empty lines in code block still need some more work. There are also some other cases around line continuation where the result looks ugly. I have to refactor some parts to make this go more smoothly and do this properly. No way around that.

Turns out, my current message text parser does not even parse plain links. That’s next on the agenda.

Oh, I also noticed that this thing crashes when there is not enough space to actually draw stuff. No shortage of work. Anyway, time is up, good night. :-)

Finally, the message rendering in my tt Go rewrite produces some colors. There is definitely a lot more tweaking necessary. But this is a first step in the right direction.

My neighbour is always either coming in or going out

@bender@twtxt.net I wonder where that dude who was hosting his twtxt feed in a google drive go? 😆 that was hilarious!!

although the only #Go things I’m running in there are a WriteFreely blog and the Saltyd #SaltyIM broker … each running in separate #FreeBSD #jail, those are still running the 14.1-Release (at the moment) anyways.

Upgrading my FreeBSD box to 14.2-RELEASE … I may have read something about some-Go-thing breaking but 🤞

Fuck me dead, what a giant piece of shit. On my Linux work laptop I have the problem that some unknown snakeoil “security” junk is dropping any IPv4 connections to ports 80 and 443. All other ports and IPv6 seem unaffected. I get an immediate “connection refused” when trying to estabslish a connection.

I had this problem four weeks ago on Friday morning the very first time at home. On Thursday evening, everything was perfectly fine. Eventually, I plugged in the LAN cable in the office and everything got automatically fixed. Nobody can explain what’s happening.

Then, last week Friday morning out of the blue, the same issue was back. So, I went to the office yesterday and it got fixed again by plugging in the network cable. This evening, I have exactly the same bloody problem again.

What the hell is going on? Does anyone have any ideas? I’m certainly not an expert, but I don’t see anything suspicious in iptables or nft rules. I also do not see anything showing up in /var/log/kern.log. Even tried to stop firewalld, flush the iptables and nft rules, but that didn’t result in any changes.

# nick = skinshafi so... should I scream buuug ? 🤔

@prologic@twtxt.net I’ve seen plenty but I doubt you could use any (other than PHP) to actually serve your pages, anything you want to serve, you put in a ~/public_xyz folder (your php files go there too for Apache to serve), no config files/server setting.

But don’t take my word for it, I just got there and still yet to meet people and learn a bit of IT wizardry from them ;)

@movq@www.uninformativ.de if it’s just notifications that are bothering you could just go to your /settings/preferences/notifications and uncheck as much boxes as you need … unless you’ve already done that, then… sorry, not sorry we love your posts my friend!! xD And just so you know, you put a smile on my face whenever I stumble upon any of your retro-computing posts! 😁

Pinellas County Running: 3.14 miles, 00:08:34 average pace, 00:26:55 duration

needed to get out. was going a bit crazy.

#running

Trying one last thing before going Berserk on that MF …

I had to go to the office today and both train rides worked out just fine. Surprising!

What’s made you unlock twitch.tv?

A couple of events where my only choices for watching them are: Twitch, Youtube or Fartbook.

What are you doing differently?

TL;DR: I stopped going there unless I have to for the reason above.

I used to spend Waaaaay too much time on the platform. I had a whole setup using Streamlink, MPV and Chatterino where sometimes, I’d have up to 10 concurrent open streams all day long on a secondary monitor (thanks to tiling window managers’ magic), some I was interested in watching, some I moderated for a couple of friends and some I’ve had open just for support (helping new streamers in the community with their numbers till they take off and such). Theeen something happened to one of my loved ones, so I had to stop all the nonsense and spend that time and attention with the person who deserves it the most. I blocked the platform at first since I had a habit to type twit... as soon as I opened a browser 😅 (addiction is real) and now I don’t. (That reflex got replaced with typing twtxt... instead 😂)

go build is working but not go build main.go

@prologic@twtxt.net I’ve just seen that one as well as MicroBin on selfh.st , it looks prettier on your instance than it did on their live demo 😆. But I’ve already started playing around with microBin and will see how things go from there.

Termux same thing @doesnm uses and it worked 👍 Media

@doesnm@doesnm.p.psf.lt No it’s all good… I’ve just rebuilt it from master and it doesn’t look like anything is broken:

~/GitRepos> git clone https://github.com/plomlompom/htwtxt.git

Cloning into 'htwtxt'...

remote: Enumerating objects: 411, done.

remote: Total 411 (delta 0), reused 0 (delta 0), pack-reused 411 (from 1)

Receiving objects: 100% (411/411), 87.89 KiB | 430.00 KiB/s, done.

Resolving deltas: 100% (238/238), done.

~/GitRepos> cd htwtxt

master ~/GitRepos/htwtxt> go mod init htwtxt

go: creating new go.mod: module htwtxt

go: to add module requirements and sums:

go mod tidy

master ~/GitRepos/htwtxt> go mod tidy

go: finding module for package github.com/gorilla/mux

go: finding module for package golang.org/x/crypto/bcrypt

go: finding module for package gopkg.in/gomail.v2

go: finding module for package golang.org/x/crypto/ssh/terminal

go: found github.com/gorilla/mux in github.com/gorilla/mux v1.8.1

go: found golang.org/x/crypto/bcrypt in golang.org/x/crypto v0.29.0

go: found golang.org/x/crypto/ssh/terminal in golang.org/x/crypto v0.29.0

go: found gopkg.in/gomail.v2 in gopkg.in/gomail.v2 v2.0.0-20160411212932-81ebce5c23df

go: finding module for package gopkg.in/alexcesaro/quotedprintable.v3

go: found gopkg.in/alexcesaro/quotedprintable.v3 in gopkg.in/alexcesaro/quotedprintable.v3 v3.0.0-20150716171945-2caba252f4dc

master ~/GitRepos/htwtxt> go build

master ~/GitRepos/htwtxt> ll

.rw-r--r-- aelaraji aelaraji 330 B Fri Nov 22 20:25:52 2024 go.mod

.rw-r--r-- aelaraji aelaraji 1.1 KB Fri Nov 22 20:25:52 2024 go.sum

.rw-r--r-- aelaraji aelaraji 8.9 KB Fri Nov 22 20:25:06 2024 handlers.go

.rwxr-xr-x aelaraji aelaraji 12 MB Fri Nov 22 20:26:18 2024 htwtxt <-------- There's the binary ;)

.rw-r--r-- aelaraji aelaraji 4.2 KB Fri Nov 22 20:25:06 2024 io.go

.rw-r--r-- aelaraji aelaraji 34 KB Fri Nov 22 20:25:06 2024 LICENSE

.rw-r--r-- aelaraji aelaraji 8.5 KB Fri Nov 22 20:25:06 2024 main.go

.rw-r--r-- aelaraji aelaraji 5.5 KB Fri Nov 22 20:25:06 2024 README.md

drwxr-xr-x aelaraji aelaraji 4.0 KB Fri Nov 22 20:25:06 2024 templates

Termux same thing @doesnm uses and it worked 👍 Media

I’m cloned repo and go mod init/go mod tidy/go build, only master are broken?

@bender@twtxt.net Glad you could find it useful … as for like I’m glad they’re not a thing here xD otherwise we wouldn’t be having as much conversations going on in here. but I get it, and do appreciate it. 🙏

@bender@twtxt.net here:

FROM golang:alpine as builder

ARG version

ENV HTWTXT_VERSION=$version

WORKDIR $GOPATH/pkg/

RUN wget -O htwtxt.tar.gz https://github.com/plomlompom/htwtxt/archive/refs/tags/${HTWTXT_VERSION}.tar.gz

RUN tar xf htwtxt.tar.gz && cd htwtxt-${HTWTXT_VERSION} && go mod init htwtxt && go mod tidy && go install htwtxt

FROM alpine

ARG version

ENV HTWTXT_VERSION=$version

RUN mkdir -p /srv/htwtxt

COPY --from=builder /go/bin/htwtxt /usr/bin/

COPY --from=builder /go/pkg/htwtxt-${HTWTXT_VERSION}/templates/* /srv/htwtxt/templates/

WORKDIR /srv/htwtxt

VOLUME /srv/htwtxt

EXPOSE 8000

ENTRYPOINT ["htwtxt", "-dir", "/srv/htwtxt", "-templates", "/srv/htwtxt/templates"]

Don’t forget the --build-arg version="1.0.7" for example when building this one, although there isn’t much difference between the couple last versions.

P.S: I may have effed up changing htwtxt’s files directory to /srv/htwtxt when the command itself defaults to /root/htwtxt so you’ll have to throw in a -dir whenever you issue an htwtxt command (i.e: htwtxt -adduser somename:somepwd -dir /srv/htwtxt … etc)

@doesnm@doesnm.p.psf.lt I tried to go install github.com/plomlompom/htwtxt@1.0.7 as well as

# this is snippet from what I used for the Dockerfile but I guess it should work just fine.

cd ~/go/pkg && wget -O htwtxt.tar.gz https://github.com/plomlompom/htwtxt/archive/refs/tags/1.0.7.tar.gz

tar xf htwtxt.tar.gz && cd htwtxt-1.0.7 && go mod init htwtxt && go mod tidy && go install htwtxt

both worked just fine…