Recovery run: 3.11 miles, 00:11:42 average pace, 00:36:22 duration

Pinellas County - 3 mile run: 3.16 miles, 00:09:07 average pace, 00:28:50 duration

Recovery run: 3.11 miles, 00:10:53 average pace, 00:33:48 duration

New human-like species discovered in China + 3 more stories

Scientists propose a new human-like species based on ancient fossils; oceans warm four times faster than in the 1980s; researchers recreate endosymbiosis significantly in the lab; CIA updates its Covid-19 origins assessment, hinting at a lab leak. ⌘ Read more

Pinellas County - 3 mile run: 3.17 miles, 00:09:08 average pace, 00:28:57 duration

kept it what felt easy. my right chest is still hurting a bit when i breathe. really hard to get out of bed these last two days.

#running

i feel sooo bad for disappearing here but i have been doing so much IRL and it has been exhausting

Android 16 Beta 1 has started rolling out for Pixel devices

Basically, this seems to mean applications will no longer be allowed to limit themselves to phone size when running on devices with larger screens, like tablets. Other tidbits in this first beta include predictive back support for 3-button navigation, support for the Advanced Professional Video codec from Samsung, among other things. It’s still quite early in the release process, so more is sure to come, and some … ⌘ Read more

Recovery run: 3.11 miles, 00:10:52 average pace, 00:33:47 duration

Fusion reactor breaks 1,000 seconds record + 3 more stories

Chinese scientists break nuclear fusion record with 1,066 seconds at 100 million Celsius; US launches $500 billion AI infrastructure The Stargate Project; AI-designed drugs from Isomorphic Labs set for clinical trials by 2026; New AI method shows 90-100% accuracy in early breast cancer detection. ⌘ Read more

Pinellas County - 3 mile run: 3.15 miles, 00:08:59 average pace, 00:28:16 duration

another cold one. slept hard ran light.

#running

SDL 3.2.0 released

SDL, the Simple DirectMedia Layer, has released version 3.2.0 of its development library. In case you don’t know what SDL is: Simple DirectMedia Layer is a cross-platform development library designed to provide low level access to audio, keyboard, mouse, joystick, and graphics hardware via OpenGL and Direct3D. It is used by video playback software, emulators, and popular games including Valve‘s award winning catalog and many Humble Bundle games. ↫ SDL website This new release has a lot of impr … ⌘ Read more

@lyse@lyse.isobeef.org Die meisten Hersteller von Internetradios (Sony, Denon, Marantz, …) binden einen externen Dienstleister (vTuner) fest(!) in ihre Geräte ein, damit die Nutzer sich durch eine große große Liste von weltweiten Internetradio-Stationen hören können.

Nun hat vTuner seit ca. 2020 sein Geschäftsmodell geändert. Man darf da nun für jedes Gerät (MAC-Adresse) bezahlen. Die Kosten steigen auch von $3 auf $7 pro Jahr. Die Hersteller zucken einfach mit den Schultern. Im schlimmsten Fall schaltet vTuner einfach die Domain ab und dann steht man da - wie bei mir: http://sagem.vtuner.com

Der XML-Parser von der alten Sagem-Huddel verlangt zeilenweise Einträge ohne Einzüge. Vielleicht standest Du mit Deinem Parser ja Pate!? 😉

Recovery run: 3.11 miles, 00:10:52 average pace, 00:33:47 duration

Pinellas County - 3 mile run: 3.15 miles, 00:09:39 average pace, 00:30:26 duration

legs feel really beat up but i have no idea why.

#running

MorphOS 3.19 released

It’s been about 18 months, but we’ve got a new release for MorphOS, the Amiga-like operating system for PowerPC Macs and some other PowerPC-based machines. Going through the list of changes, it seems MorphOS 3.19 focuses heavily on fixing bugs and addressing issues, rather than major new features or earth-shattering changes. Of note are several small but important updates, like updated versions of OpenSSL and OpenSSH, as well as a ton of new filetype definitions – and so much more. Havin … ⌘ Read more

@prologic@twtxt.net yellowjackets is about a girls soccer team that gets into a plane crash in the wilderness and start hunting and killing and eating each other. also there’s lesbians. it rules. season 3 comes out valentines day

@kat@yarn.girlonthemoon.xyz AKB48 and other spinoffs sound so great. I’m listening and whistling to them for hours now. I have no clue what the lyrics are about, but it’s just fantastic music. Thanks for introducing me to them. <3

Israel and Hamas agree to ceasefire + 3 more stories

Scientists showcase new antimony atom method in quantum computing; UK leader signs treaty with Ukraine enhancing security; Israel and Hamas agree on ceasefire and hostages; SpaceX launches Falcon 9 with lunar landers for commercial missions. ⌘ Read more

Recovery run: 3.11 miles, 00:10:37 average pace, 00:32:58 duration

easy miles

#running #treadmill

@lyse@lyse.isobeef.org thank youuuu the songs are all instrumental versions of idol songs! here’s a list:

right now - newjeans

onna no ko otoko no ko - ogura yuko

nattou angel - nattou angels (akb48 sub unit)

tsumiki no jikan - skek48

supernatural - newjeans

cookie (FRNK remix) - newjeans

hype boy (250 remix) - newjeans

hurt (250 remix) - newjeans

new moon ni koishite - momoiro clover z

tenshi wa doko ni iru - fairy wink

@suitechic@yarn.girlonthemoon.xyz thank you nikki <3

Pinellas County - 3 mile run: 3.12 miles, 00:09:24 average pace, 00:29:20 duration

good pace finally. honestly it was mainly because my body is exhausted today and could not imagine pushing it any more.

#running

I’m refactoring (mangling four lines of of code with assignments into one function call) and man, do I love vim macros! Such a bloody amazing invention. Saves me heaps of manual labor.

@kingdomcome@yarn.girlonthemoon.xyz all me hahah! thank you <3

Recovery: 3.11 miles, 00:10:02 average pace, 00:31:11 duration

getting some extra miles in. been a little anxious and cannot seem to focus as of late.

#running #treadmill

Pinellas County - 3 mile run: 3.13 miles, 00:09:09 average pace, 00:28:38 duration

really nice out. still went out faster than i wanted and i really need to get better at keeping it on track.

#running

new icon from a very dark comic mini series that just came out (doll parts by luana vecchio

curl: (3) URL rejected: Malformed input to a URL function. Writing sender in bash was BAD idea

@<url> form of mentions. Strictly require that all mentions include a nickname/name; i.e: @<name url>.

@prologic@twtxt.net I say we should find a way to support mentions with only url, no nick, as per the original spec.

- For

@<nick url>we already got support

- For

@<nick>the posting client should expand it to@<nick url>, if not then the reading client should just render it as@nickwith no link.

- For

@<url>the sending client should try to expand it to@<nick url>, if not then the reading client should try to find or construct a nick base on:

- Look in twtxt.txt for a

nick =

- Use (sub)domain from URL

- Use folder or file name from URL

- Look in twtxt.txt for a

@suitechic@yarn.girlonthemoon.xyz shes the best <3

Scientists extract 1.2 million-year-old ice core from Antarctica + 3 more stories

James Webb Telescope finds 44 stars in the Dragon Arc galaxy; Greenland’s importance escalates due to climate change; scientists drill 1.2 million-year-old ice core; Trump considers national economic emergency for tariffs. ⌘ Read more

Pinellas County Running: 3.14 miles, 00:09:12 average pace, 00:28:55 duration

third day of training with just a base run. definitely feeling the squats and lunges i did two days ago!

#running

Pinellas County Running: 3.12 miles, 00:08:53 average pace, 00:27:44 duration

first day of marathon training. i do not have a race in mind but wanted to start training again. was going too fast but 48F and just feeling overall good.

#running

upgraded kavita because i was behind a couple versions, new UI looks snazzy, i kinda miss the old one because well i’ve seen it for 3 years now but things change

ok yay we’re back <3

yesterday i was gonna buy new batgirl & birds of prey issues but the comic store didn’t have either

For some reason, I was using calc all this time. I mean, it’s good, but I need to do base conversions (dec, hex, bin) very often and you have to type base(2) or base(16) in calc to do that. That’s exhausting after a while.

So I now replaced calc with a little Python script which always prints the results in dec/hex/bin, grouped in bytes (if the result is an integer). That’s what I need. It’s basically just a loop around Python’s exec().

$ mcalc

> 123

123 0x[7b] 0b[01111011]

> 1234

1234 0x[04 d2] 0b[00000100 11010010]

> 0x7C00 + 0x3F + 512

32319 0x[7e 3f] 0b[01111110 00111111]

> a = 10; b = 0x2b; c = 0b1100101

10 0x[0a] 0b[00001010]

> a + b + 3 * c

356 0x[01 64] 0b[00000001 01100100]

> 2**32 - 1

4294967295 0x[ff ff ff ff] 0b[11111111 11111111 11111111 11111111]

> 4 * atan(1)

3.141592653589793

> cos(pi)

-1.0

@movq@www.uninformativ.de That’s neat, good old $\sum_{i=1}^{9} i^3$ (let’s see if yarnd’s markdown parser has LaTeX support or not ;-)).

@kat@yarn.girlonthemoon.xyz anyway when i get the memory stick i will record something silly and exclusive for yarn friends i can’t wait <3

happy new year to anyone who sees this <3

cli test 3

3°C today, it was quite nice in the sun. A lot of hunting and tree felling going on in the forest. And we met the heron again, that was very cool: https://lyse.isobeef.org/waldspaziergang-2024-12-28/

And now some stupid fuckwits are burning firecrackers again. Very annoying. Can we please ban this shit once and forever!?

Oh no!

Wife and I agreed on hibernate until January, just visiting relatives but avoiding any kind of shopping. I tried buying something like 2 or 3 days ago and it’s insane :o

Good luck! :)

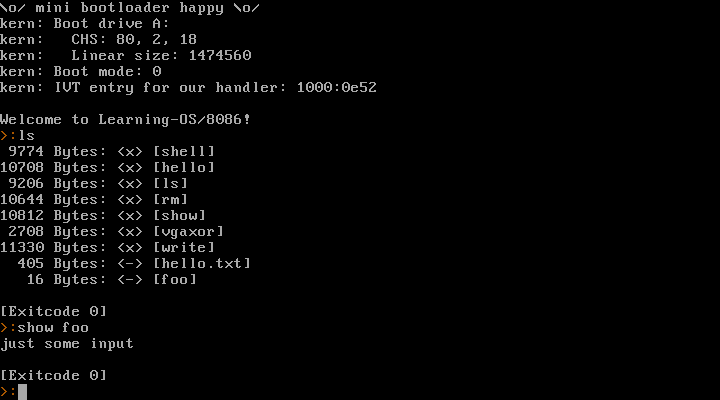

I’ve been making a little toy operating system for the 8086 in the last few days. Now that was a lot of fun!

I don’t plan on making that code public. This is purely a learning project for myself. I think going for real-mode 8086 + BIOS is a good idea as a first step. I am well aware that this isn’t going anywhere – but now I’ve gained some experience and learned a ton of stuff, so maybe 32 bit or even 64 bit mode might be doable in the future? We’ll see.

It provides a syscall interface, can launch processes, read/write files (in a very simple filesystem).

Here’s a video where I run it natively on my old Dell Inspiron 6400 laptop (and Warp 3 later in the video, because why not):

https://movq.de/v/893daaa548/los86-p133-warp3.mp4

(Sorry for the skewed video. It’s a glossy display and super hard to film this.)

It starts with the laptop’s boot menu and then boots into the kernel and launches a shell as PID 1. From there, I can launch other processes (anything I enter is a new process, except for the exit at the end) and they return the shell afterwards.

And a screenshot running in QEMU:

Glad you like them, @aelaraji@aelaraji.com. Anytime!

Recovery run: 3.11 miles, 00:11:27 average pace, 00:35:33 duration

@movq@www.uninformativ.de I know, nobody asked 🤡 but, here are a couple of suggestions:

- If you’re willing to pay for a licence I’d highly recommend plasticity it’s under

GNU LESSER GENERAL PUBLIC LICENSE, Version 3.

- Otherwise if you already have experience with CAD/Parametric modeling you could give freeCAD a spin, it’s under

GNU Library General Public License, version 2.0, it took them years but have just recently shipped their v1.0 👍

- or just roll with Autodesk’s Fusion for personal use, if you don’t mind their “Oh! You need to be online to use it” thing.

(Let’s face it, Blender is hard to use.)

I bet you’re talking about blender 2.79 and older! 😂 you are, right? JK

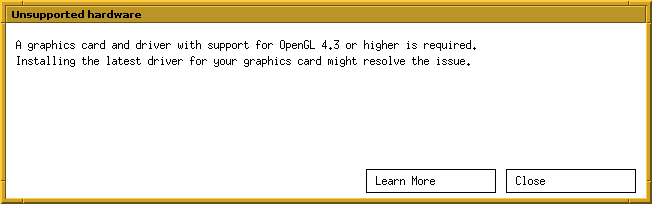

Goodbye Blender, I guess? 🤔

A bit annoying, but not much of a problem. The only thing I did with Blender was make some very simple 3D-printable objects.

I’ll have a look at the alternatives out there. Worst case is I go back to Art of Illusion, which I used heavily ~15 years ago.