I finally watched “C++17: I See a Monad in Your Future” and it was rather nice (at least in 1.8 times speed): https://www.youtube.com/watch?v=BFnhhPehpKw

I finally also learned why the auto syntax exists (to allow specifying a return type that depends on the argument).

@bender@twtxt.net @prologic@twtxt.net Reminds me of: https://img.ifunny.co/images/d07b9a2014e3b3901abe5f4ab22cc2b89a0308de8a21d868d2022dac7bb0280d_1.jpg :-D

I saw a paraglider after sunset. Must have been super cold up there in the sky, we just had 1-2°C on the ground. And I passed a heron at just 5-6 meters distance. I think that’s a new record low. The sunset itself wasn’t all that shabby either. Hence, a very good stroll.

One thing I’ve learned over the many years now (approaching a decade and a half now) about self-hosting is two things; 1) There are many “assholes” on the open Internet that will either attack your stuff or are incompetent and write stupid shit™ that goes crazy on your stuff 2) You have to be careful about resources, especially memory and disk i/o. Especially disk i/o. this can kill your overall performance when you either have written software yourself or use someone else’s that can do unconfined/uncontrolled disk i/o causing everything to grind to a halt and even fail. #self-hosted

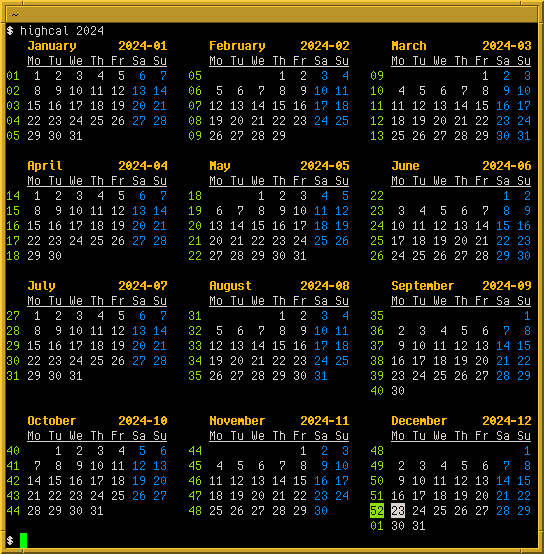

2024 was a funny year: The year begins and ends with calendar week 1:

The one in January being 2024-W01 and the one in December 2025-W01.

🤓

(Hmmm, my printed LaTeX calendar using tikz-kalender gets it wrong or uses different week definitions. It shows next week as 53. 🤔)

You really cannot beat UNIX, no really. Everything else ever invented sucks in comparison 🤣

$ diff -Ndru <(restic snapshots | grep minio | awk '{ print $1 }' | sort -u) <(restic snapshots | grep minio | awk '{ print $1 }' | xargs -I{} restic forget -n {} | grep -E '\{.*\}' | sed -e 's/{//g;s/}//g' | sort -u) | tee | wc -l; echo $?

0

0

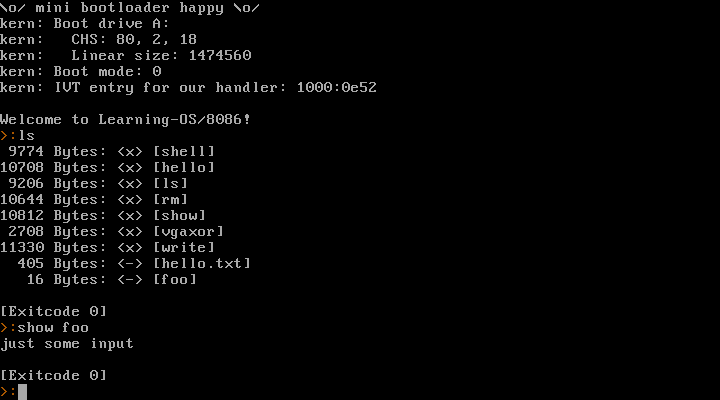

I’ve been making a little toy operating system for the 8086 in the last few days. Now that was a lot of fun!

I don’t plan on making that code public. This is purely a learning project for myself. I think going for real-mode 8086 + BIOS is a good idea as a first step. I am well aware that this isn’t going anywhere – but now I’ve gained some experience and learned a ton of stuff, so maybe 32 bit or even 64 bit mode might be doable in the future? We’ll see.

It provides a syscall interface, can launch processes, read/write files (in a very simple filesystem).

Here’s a video where I run it natively on my old Dell Inspiron 6400 laptop (and Warp 3 later in the video, because why not):

https://movq.de/v/893daaa548/los86-p133-warp3.mp4

(Sorry for the skewed video. It’s a glossy display and super hard to film this.)

It starts with the laptop’s boot menu and then boots into the kernel and launches a shell as PID 1. From there, I can launch other processes (anything I enter is a new process, except for the exit at the end) and they return the shell afterwards.

And a screenshot running in QEMU:

@bender@twtxt.net Dud! you should see the updated version! 😂 I have just discovered the scratch #container image and decided I wanted to play with it… I’m probably going to end up rebuilding a LOT of images.

~/htwtxt » podman image list htwtxt

REPOSITORY TAG IMAGE ID CREATED SIZE

localhost/htwtxt 1.0.7-scratch 2d5c6fb7862f About a minute ago 12 MB

localhost/htwtxt 1.0.5-alpine 13610a37e347 4 weeks ago 20.1 MB

localhost/htwtxt 1.0.7-alpine 2a5c560ee6b7 4 weeks ago 20.1 MB

docker.io/buckket/htwtxt latest c0e33b2913c6 8 years ago 778 MB

A R C O N 1 T E A R C O N I T E A R C

R C O N 2 T E A R C O N I T E A R C 0

C O N 8 T E A R C O N I T E A R C 0 N

China deploys historic naval fleet near Taiwan + 2 more stories

Global advertising revenue is projected to exceed $1 trillion; garment workers face increased heat risks; Taiwan raises alert over China’s largest naval fleet deployment. ⌘ Read more

POSSE-ing these articles from by nekoweb in case bluesky and/or fedi explode ½

Change

GNU Shepherd 1.0 Service Manager Released As “Solid Tool” Alternative To systemd

GNU Shepherd as a service manager for both system and user services that is used by Guix and relying on Guile Scheme has finally reached version 1.0. For those not pleased with systemd, GNU Shepherd can be used as an init system and now has finally crossed the version 1.0 milestone after 21 years of development… ⌘ Read more

Cleaned up my npm package for twthash; made it CommonJS compatible, added more documentation and even a test. Current version is 1.2.2

although the only #Go things I’m running in there are a WriteFreely blog and the Saltyd #SaltyIM broker … each running in separate #FreeBSD #jail, those are still running the 14.1-Release (at the moment) anyways.

World Bank launches record $100 billion aid + 1 more story

World Bank announces $100 billion support for struggling nations as Amnesty reports possible genocide in Gaza ⌘ Read more

My 7-year old invented the word guakilijion which is a 1 with a bazillion zeroes following after it. He wants to be a word inventor.

Infinite Armada Chess

⌘ Read more

⌘ Read more

Bluesky Passes Threads for Active Website Users, But Confronts ‘Scammers and Impersonators’

Bluesky now has more active website users than Threads in the U.S., according to a graph from the Financial Times. And though Threads still leads in app usage, “Prior to November 5 Threads had five times more daily active users in the U.S. than Bluesky… Now, Threads is only 1.5 times larger tha … ⌘ Read more

Yes it work: 2024-12-01T19:38:35Z twtxt/1.2.3 (+https://eapl.mx/twtxt.txt; @eapl) :D

The .log is just a simple append each request. The idea with the .cvs is to have it tally up how many request there have been from each client as a way to avoid having the log file grow too big. And that you can open the .cvs as a spreadsheet and have an easy overview and filtering options.

Access to those files are closed to the public.

Landmark climate hearings set at UN court + 1 more story

More than 100 countries join ICJ for climate hearings; Germany plans a $25 billion hydrogen network; ⌘ Read more

Nations pledge $300 billion for climate aid + 1 more story

Taiwan’s president plans South Pacific tour for stronger ties; UN talks result in $300 billion climate funding for developing nations. ⌘ Read more

Termux same thing @doesnm uses and it worked 👍 Media

@doesnm@doesnm.p.psf.lt No it’s all good… I’ve just rebuilt it from master and it doesn’t look like anything is broken:

~/GitRepos> git clone https://github.com/plomlompom/htwtxt.git

Cloning into 'htwtxt'...

remote: Enumerating objects: 411, done.

remote: Total 411 (delta 0), reused 0 (delta 0), pack-reused 411 (from 1)

Receiving objects: 100% (411/411), 87.89 KiB | 430.00 KiB/s, done.

Resolving deltas: 100% (238/238), done.

~/GitRepos> cd htwtxt

master ~/GitRepos/htwtxt> go mod init htwtxt

go: creating new go.mod: module htwtxt

go: to add module requirements and sums:

go mod tidy

master ~/GitRepos/htwtxt> go mod tidy

go: finding module for package github.com/gorilla/mux

go: finding module for package golang.org/x/crypto/bcrypt

go: finding module for package gopkg.in/gomail.v2

go: finding module for package golang.org/x/crypto/ssh/terminal

go: found github.com/gorilla/mux in github.com/gorilla/mux v1.8.1

go: found golang.org/x/crypto/bcrypt in golang.org/x/crypto v0.29.0

go: found golang.org/x/crypto/ssh/terminal in golang.org/x/crypto v0.29.0

go: found gopkg.in/gomail.v2 in gopkg.in/gomail.v2 v2.0.0-20160411212932-81ebce5c23df

go: finding module for package gopkg.in/alexcesaro/quotedprintable.v3

go: found gopkg.in/alexcesaro/quotedprintable.v3 in gopkg.in/alexcesaro/quotedprintable.v3 v3.0.0-20150716171945-2caba252f4dc

master ~/GitRepos/htwtxt> go build

master ~/GitRepos/htwtxt> ll

.rw-r--r-- aelaraji aelaraji 330 B Fri Nov 22 20:25:52 2024 go.mod

.rw-r--r-- aelaraji aelaraji 1.1 KB Fri Nov 22 20:25:52 2024 go.sum

.rw-r--r-- aelaraji aelaraji 8.9 KB Fri Nov 22 20:25:06 2024 handlers.go

.rwxr-xr-x aelaraji aelaraji 12 MB Fri Nov 22 20:26:18 2024 htwtxt <-------- There's the binary ;)

.rw-r--r-- aelaraji aelaraji 4.2 KB Fri Nov 22 20:25:06 2024 io.go

.rw-r--r-- aelaraji aelaraji 34 KB Fri Nov 22 20:25:06 2024 LICENSE

.rw-r--r-- aelaraji aelaraji 8.5 KB Fri Nov 22 20:25:06 2024 main.go

.rw-r--r-- aelaraji aelaraji 5.5 KB Fri Nov 22 20:25:06 2024 README.md

drwxr-xr-x aelaraji aelaraji 4.0 KB Fri Nov 22 20:25:06 2024 templates

@bender@twtxt.net here:

FROM golang:alpine as builder

ARG version

ENV HTWTXT_VERSION=$version

WORKDIR $GOPATH/pkg/

RUN wget -O htwtxt.tar.gz https://github.com/plomlompom/htwtxt/archive/refs/tags/${HTWTXT_VERSION}.tar.gz

RUN tar xf htwtxt.tar.gz && cd htwtxt-${HTWTXT_VERSION} && go mod init htwtxt && go mod tidy && go install htwtxt

FROM alpine

ARG version

ENV HTWTXT_VERSION=$version

RUN mkdir -p /srv/htwtxt

COPY --from=builder /go/bin/htwtxt /usr/bin/

COPY --from=builder /go/pkg/htwtxt-${HTWTXT_VERSION}/templates/* /srv/htwtxt/templates/

WORKDIR /srv/htwtxt

VOLUME /srv/htwtxt

EXPOSE 8000

ENTRYPOINT ["htwtxt", "-dir", "/srv/htwtxt", "-templates", "/srv/htwtxt/templates"]

Don’t forget the --build-arg version="1.0.7" for example when building this one, although there isn’t much difference between the couple last versions.

P.S: I may have effed up changing htwtxt’s files directory to /srv/htwtxt when the command itself defaults to /root/htwtxt so you’ll have to throw in a -dir whenever you issue an htwtxt command (i.e: htwtxt -adduser somename:somepwd -dir /srv/htwtxt … etc)

P.S:

~/remote/htwtxt » podman image list htwtxt the@wks

REPOSITORY TAG IMAGE ID CREATED SIZE

localhost/htwtxt 1.0.5-alpine 13610a37e347 3 hours ago 20.1 MB

localhost/htwtxt 1.0.7-alpine 2a5c560ee6b7 3 hours ago 20.1 MB

docker.io/buckket/htwtxt latest c0e33b2913c6 8 years ago 778 MB

@doesnm@doesnm.p.psf.lt I tried to go install github.com/plomlompom/htwtxt@1.0.7 as well as

# this is snippet from what I used for the Dockerfile but I guess it should work just fine.

cd ~/go/pkg && wget -O htwtxt.tar.gz https://github.com/plomlompom/htwtxt/archive/refs/tags/1.0.7.tar.gz

tar xf htwtxt.tar.gz && cd htwtxt-1.0.7 && go mod init htwtxt && go mod tidy && go install htwtxt

both worked just fine…

@doesnm@doesnm.p.psf.lt up to you. I have mine to rotate at 1,000 twtxts. I have vomited over 400, so far. I have some way to go till rotation. :-D

I think it’s centralized shit with lying about decentralization. All network is worked by two centralized things: plc.directory (did storage?) and network relay (bsky.network). You can host your relay but this require TOO MUCH resources (2TB storage and 32GB RAM read more ). Also i try running PDS and: 1. I can’t register account via app,only via cli 2. It leaked on 2GB virtual machine then killed by oom after trying to register account via cli

@bender@twtxt.net I now read the German Wikipedia article on fog. These are some really beautiful pictures:

- https://upload.wikimedia.org/wikipedia/commons/a/a9/Nebelbank_in_der_W%C3%BCste_Namib_bei_Aus_%282018%29.jpg

- https://upload.wikimedia.org/wikipedia/commons/1/17/Space_Shuttle_Challenger_moving_through_fog.jpg

- https://upload.wikimedia.org/wikipedia/commons/9/96/Fog_Bow_%2819440790708%29.jpg

- https://upload.wikimedia.org/wikipedia/commons/a/ac/360_degrees_fogbow.jpg

China unveils $1.4 trillion stimulus package + 1 more story

China launches a $1.4 trillion fiscal package to support its economy; Earth is set to experience its hottest year ever, exceeding 1.5 degrees Celsius. ⌘ Read more

@eapl.me@eapl.me here are my replies (somewhat similar to Lyse’s and James’)

Metadata in twts: Key=value is too complicated for non-hackers and hard to write by hand. So if there is a need then we should just use #NSFS or the alt-text file in markdown image syntax

if something is NSFWIDs besides datetime. When you edit a twt then you should preserve the datetime if location-based addressing should have any advantages over content-based addressing. If you change the timestamp the its a new post. Just like any other blog cms.

Caching, Yes all good ideas, but that is more a task for the clients not the serving of the twtxt.txt files.

Discovery: User-agent for discovery can become better. I’m working on a wrapper script in PHP, so you don’t need to go to Apaches log-files to see who fetches your feed. But for other Gemini and gopher you need to relay on something else. That could be using my webmentions for twtxt suggestion, or simply defining an email metadata field for letting a person know you follow their feed. Interesting read about why WebMetions might be a bad idea. Twtxt being much simple that a full featured IndieWeb sites, then a lot of the concerns does not apply here. But that’s the issue with any open inbox. This is hard to solve without some form of (centralized or community) spam moderation.

Support more protocols besides http/s. Yes why not, if we can make clients that merge or diffident between the same feed server by multiples URLs

Languages: If the need is big then make a separate feed. I don’t mind seeing stuff in other langues as it is low. You got translating tool if you need to know whats going on. And again when there is a need for easier switching between posting to several feeds, then it’s about building clients with a UI that makes it easy. No something that should takes up space in the format/protocol.

Emojis: I’m not sure what this is about. Do you want to use emojis as avatar in CLI clients or it just about rendering emojis?

Pinellas County - 4 x {1km [1’30”]} 4 x {400m [1’]}: 5.52 miles, 00:09:56 average pace, 00:54:52 duration

first four intervals were good. needed more time to rest i think between the 400m intervals because the humidity was tough again. stopped after the fourth because it was so bad. fema out in full force on the trail, too.

#running

Nearly 20,000 displaced in Paris for Olympics + 1 more story

Nearly 20,000 people displaced in Paris as Olympics approach, with eviction rates rising; US voters decide on abortion rights measures in nine states for the 2024 elections. ⌘ Read more

Been curious to see if can filter out my access.log file and output a list of my twtxt followers just in case I’ve missed someone … I came up with this awk -F '\"' '/twtxt/ {print $(NF-1)}' /var/log/user.log | grep -v 'twtxt\.net' | sort -u | awk '{print $(NF-1) $NF}' | awk '/^\(/' spaghetti monster of a command and I’m wondering if there’s a more elegant way for achieving the same thing.

@movq@www.uninformativ.de Some more options:

- Summer lightning.

- Obviously aliens!11!!!1

I once saw a light show in the woods originating most likely from a disco a few kilometers away. That was also pretty crazy. There was absolutely zero sound reaching the valley I was in.

I’m seeing strange lights in the sky. None of my cameras are sensitive enough to make a video.

It’s probably one of two things:

- A ship on the nearby river with a lightshow going. It’s rare but it happens.

- A steap hill nearby, cars driving “upwards”, and since super bright LED lights are normal nowadays, they reflect from the clouds.

Either way, looks fancy.

@doesnm@doesnm.p.psf.lt May I ask which hardware you have? SSD or HDD? How much RAM?

I might be spoiled and very privileged here. Even though my PC is almost 12 years old now, it does have an SSD and tons of RAM (i.e., lots of I/O cache), so starting mutt and opening the mailbox takes about 1-2 seconds here. I hardly even notice it. But I understand that not everybody has fast machines like that. 🫤

Pinellas County - 4 x 5’ (hard) [1’]: 5.00 miles, 00:09:42 average pace, 00:48:26 duration

nothing to note.

#running

1/4 to mean "first out of four".

@bender@twtxt.net I try to avoid editing. I guess I would write 5/4, 6/4, etc, and hopefully my audience would be sympathetic to my failing.

Anyway, I don’t think my eccentric decision to number my twts in the style of other social media platforms is the only context where someone might write ¼ not meaning a quarter. E.g. January 4, to Americans.

I’m happy to keep overthinking this for as long as you are :-P

@bender@twtxt.net @prologic@twtxt.net I’m not exactly asking yarnd to change. If you are okay with the way it displayed my twts, then by all means, leave it as is. I hope you won’t mind if I continue to write things like 1/4 to mean “first out of four”.

What has text/markdown got to do with this? I don’t think Markdown says anything about replacing 1/4 with ¼, or other similar transformations. It’s not needed, because ¼ is already a unicode character that can simply be directly inserted into the text file.

What’s wrong with my original suggestion of doing the transformation before the text hits the twtxt.txt file? @prologic@twtxt.net, I think it would achieve what you are trying to achieve with this content-type thing: if someone writes 1/4 on a yarnd instance or any other client that wants to do this, it would get transformed, and other clients simply wouldn’t do the transformation. Every client that supports displaying unicode characters, including Jenny, would then display ¼ as ¼.

Alternatively, if you prefer yarnd to pretty-print all twts nicely, even ones from simpler clients, that’s fine too and you don’t need to change anything. My 1/4 -> ¼ thing is nothing more than a minor irritation which probably isn’t worth overthinking.

J-1, ou presque pour ceux qui y vont dès cet après-midi! #utopiales2024

@prologic@twtxt.net I’m not a yarnd user, so it doesn’t matter a whole lot to me, but FWIW I’m not especially keen on changing how I format my twts to work around yarnd’s quirks.

I wonder if this kind of postprocessing would fit better between composing (via yarnd’s UI) and publishing. So, if a yarnd user types ¼, it could get changed to ¼ in the twtxt.txt file for everyone to see, not just people reading through yarnd. But when I type ¼, meaning first out of four, as a non-yarnd user, the meaning wouldn’t get corrupted. I can always type ¼ directly if that’s what I really intend.

(This twt might be easier to understand if you read it without any transformations :-P)

Anyway, again, I’m not a yarnd user, so do what you will, just know you might not be seeing exactly what I meant.

@prologic@twtxt.net I wrote ¼ (one slash four) by which I meant “the first out of four”. twtxt.net is showing it as ¼, a single character that IMO doesn’t have that same meaning (it means 0.25). Similarly, ¾ got replaced with ¾ in another twt. It’s not a big deal. It just looks a little wrong, especially beside the 2/4 and 4/4 in my other two twts.

Simplified twtxt - I want to suggest some dogmas or commandments for twtxt, from where we can work our way back to how to implement different feature like replies/treads:

It’s a text file, so you must be able to write it by hand (ie. no app logic) and read by eye. If you edit a post you change the content not the timestamp. Otherwise it will be considered a new post.

The order of lines in a twtxt.txt must not hold any significant. The file is a container and each line an atomic piece of information. You should be able to run

sorton a twtxt.txt and it should still work.Transport protocol should not matter, as long as the file served is the same. Http and https are preferred, so it is suggested that feed served via Gopher or Gemini also provide http(s).

Do we need more commandments?

hop, entraînement terminé, j’ai fait le plein d’énergie avant d’aller donner un sang de qualitté ^^ #EFS #dondusang. Le niveau 7 de la méthode #lafay est par contre trop longue, je n’ai pas assez de temps pour faire ça bien. Tant pis dans ce cas, retour à la n°6 et j’y ajouterai 1 exercice jusqu’à épuisement tiré au sort. Ou alors je ressort le #TRX, il faut qu eje trouve où l’accrocher. #sport #training

gg=G and to va", ci", di{... in vim the other day 😆 Life will never be the same, I can feel it. ref

@aelaraji@aelaraji.com one i use quite frequently is when i have a list of items (1 per line) and want them sorted but only keep those which are unique: ggV}:sort u

Installing Devuan 3.1 and Migrating to Ceres | https://starbreaker.org/blog/tech/installing-devuan-31-migrating-ceres/index.html

I share I did write up an algorithm for it at some point I think it is lost in a git comment someplace. I’ll put together a pseudo/go code this week.

Super simple:

Making a reply:

- If yarn has one use that. (Maybe do collision check?)

- Make hash of twt raw no truncation.

- Check local cache for shortest without collision

- in SQL:

select len(subject) where head_full_hash like subject || '%'

- in SQL:

Threading:

- Get full hash of head twt

- Search for twts

- in SQL:

head_full_hash like subject || '%' and created_on > head_timestamp

- in SQL:

The assumption being replies will be for the most recent head. If replying to an older one it will use a longer hash.

If we stuck with Blake2b for Twt Hash(es); what do we think we need to reasonably go to in bit length/size?

=> https://gist.mills.io/prologic/194993e7db04498fa0e8d00a528f7be6

e.g: (turns out @xuu@txt.sour.is is right about Blak2b being easy/simple too!):

$ printf "%s\t%s\t%s" "https://example.com/twtxt.txt" "2024-09-29T13:30:00Z" "Hello World!" | b2sum -l 32 -t | awk '{ print $1 }'

7b8b79dd

@prologic@twtxt.net Regarding the new way of generating twt-hashes, to me it makes more sense to use tabs as separator instead of spaces, since the you can just copy/past a line directly from a twtxt-file that already go a tab between timestamp and message. But tabs might be hard to “type” when you are in a terminal, since it will activate autocomplete…🤔

Another thing, it seems that you sugget we only use the domain in the hash-creation and not the full path to the twtxt.txt

$ echo -e "https://example.com 2024-09-29T13:30:00Z Hello World!" | sha256sum - | awk '{ print $1 }' | base64 | head -c 12