@movq@www.uninformativ.de lovely, thanks for sharing! Now you know what I will be using today on a loop.

Use more WebP, I guess.

- Lossless PNG, 635 kB: https://movq.de/v/b239c54838/scrot.png

- Lossless WebP, 469 kB: https://movq.de/v/b239c54838/scrot.webp

- Lossy WebP, 110 kB: https://movq.de/v/b239c54838/scrot%2Dlossy.webp

- Lossy JPEG, 110 kB: https://movq.de/v/b239c54838/scrot%2Dlossy.jpg

@prologic@twtxt.net Here you go:

(LTT = “Linus Tech Tips”, that’s the host.)

LTT: There was a recent thing from a major tech company, where developers were asked to say how many lines of code they wrote – and if it wasn’t enough, they were terminated. And there was someone here that was extremely upset about that approach to measuring productivity, because–

Torvalds: Oh yeah, no, you shouldn’t even be upset. At that point, that’s just incompetence. Anybody who thinks that’s a valid metric is too stupid to work at a tech company.

LTT: You do know who you just said that about, right?

Torvalds: No.

LTT: Oh. Uh, he was a prominent figure in the, uh, improved efficiency of the US government recently.

Torvalds: Oh. Apparently I was spot on.

But it is weird that none of the slot plates (that I can find) appear to have the correct pin order. 🤔

The two mainboards I have here use this order:

2468x

13579

But the slot plates use this:

12345

6789x

I tripped over this at first and wondered why it didn’t work.

Has this changed recently or what? 🥴

@prologic@twtxt.net Ah, shit, you might be right. You can even buy these slot plates on Amazon. I didn’t even think to check Amazon, I went straight to eBay and tried to find it there, because I thought “it’s so old, nobody is going to use that anymore, I need to buy second-hand”. 🤦🤦🤦

It really shows that I built my last PC so long ago … I know next to nothing about current hardware. 😢

@prologic@twtxt.net Bwahahaha! I tried to establish some form of “convention” for commit messages at work (not exactly what you linked to, though), but it’s a lost cause. 😂 Nobody is following any of that. Nobody wants to invest time in good commit messages. People just want to get stuff done.

I’m just glad that 80% are at least somewhat useful – instead of “wip” or “shit i screwed up”.

@lyse@lyse.isobeef.org I couldn’t agree more! I think good commit messages are very useful, however, and I’d much prefer the conventional mood style for Commit messages, but rather prefer telling a story rather than this weird syntax all over the shop!

@shinyoukai@neko.laidback.moe Are you using your Gitea username instead of got@ ? Are you forwarding auth?

@prologic@twtxt.net he uses subdomains. Which do you think the identity be associated with? (hint, “it is not so hard!”).

@shinyoukai@neko.laidback.moe yeah, that’s the only reason why I use sub-domains when trying anything federated (I believe Matrix has the same problem), in case things didn’t go as planned I can just migrate and take it down.

@itsericwoodward@itsericwoodward.com Nice to see someone else also participating! 🥳

(Btw, they don’t want us to share our inputs: https://www.reddit.com/r/adventofcode/wiki/faqs/copyright/inputs/ Yeah, it’s a bit annoying. I also have to do quite a bit of filtering on my repo …)

FWIW, day 03 and day 04 where solved on SuSE Linux 6.4:

https://movq.de/v/faaa3c9567/day03.jpg

https://movq.de/v/faaa3c9567/day04%2Dv3.jpg

Performance really is an issue. Anything is fast on a modern machine with modern Python. But that old stuff, oof, it takes a while … 😅

Should have used C or Java. 🤪 Well, maybe I do have to fall back on that for later puzzles. We’ll see.

@bender@twtxt.net Nothing will make me use Discord, though. 😅 Not voluntarily.

@prologic@twtxt.net I couldn’t find the exact blog post from before, one that used redirection directives in its nginx config. but I found [this one ](https://melkat.blog/p/unsafe-pricing#:~:text=Something%20else%20I’ve%20been%20doing%20this%20year,%20fine.) mentioning a similar process but done differently.

@lyse@lyse.isobeef.org no wonder I picked that cake (albeit coincidentally), I adore almonds, and hazelnuts! Your teammates are absolutely amazing, dude! A very nice project farewell! On leaving places I have a small anecdote.

I know someone who on 3 February 2004 left his job to go elsewhere. At the time his teammates threw a party, and gave him a very nice portable storage. Twenty days later, he returned, and jokingly they asked him for the storage, and money spent on farewell party back. I heard, from a close source, that he gave them his middle finger, but don’t quote me on that. 😂😂😂

@prologic@twtxt.net right! I’ve been looking at used ones I might be able to use…

Before smartphones people used to use the Sony Camcorders, but even though they still exist today, they’re uber expensive 😂

@prologic@twtxt.net Using your own language?! That’s really nice! I hope you get home soon so you can give the code a try. 😅

Thinking about doing Advent of Code in my own tiny language mu this year.

mu is:

- Dynamically typed

- Lexically scoped with closures

- Has a Go-like curly-brace syntax

- Built around lists, maps, and first-class functions

Key syntax:

- Functions use

fnand braces:

fn add(a, b) {

return a + b

}

- Variables use

:=for declaration and=for assignment:

x := 10

x = x + 1

- Control flow includes

if/elseandwhile:

if x > 5 {

println("big")

} else {

println("small")

}

while x < 10 {

x = x + 1

}

- Lists and maps:

nums := [1, 2, 3]

nums[1] = 42

ages := {"alice": 30, "bob": 25}

ages["bob"] = ages["bob"] + 1

Supported types:

int

bool

string

list

map

fn

nil

mu feels like a tiny little Go-ish, Python-ish language — curious to see how far I can get with it for Advent of Code this year. 🎄

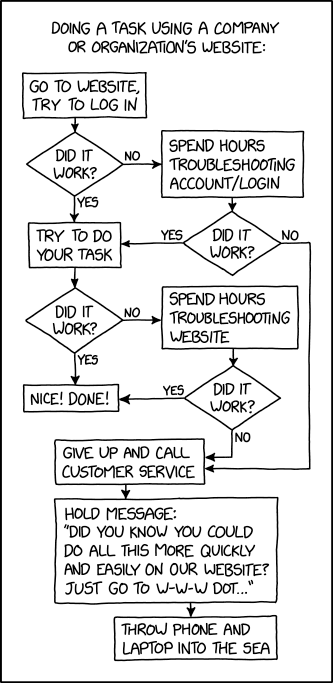

Website Task Flowchart

⌘ Read more

⌘ Read more

@movq@www.uninformativ.de yeah, you fetched it too quickly, it was edited seconds after picking the wrong image. LOL. Which brings us back in a whole, huge circle, to twtxt edits, and how to handle them. 😅

Advent of Code 2025 starts tomorrow. 🥳🎄

This year, I’m going to use Python 1 on SuSE Linux 6.4, writing the code on my trusty old Pentium 133 with its 64 MB of RAM. No idea if that old version of Python will be fast enough for later puzzles. We’ll see.

@lyse@lyse.isobeef.org Damn. That was stupid of me. I should have posted examples using 2026-03-01 as cutoff date. 😂

In my actual test suite, everything uses 2027-01-01 and then I have this, hoping that that’s good enough. 🥴

def test_rollover():

d = jenny.HASHV2_CUTOFF_DATE

assert len(jenny.make_twt_hash(URL, d - timedelta(days=7), TEXT)) == 7

assert len(jenny.make_twt_hash(URL, d - timedelta(seconds=3), TEXT)) == 7

assert len(jenny.make_twt_hash(URL, d - timedelta(seconds=2), TEXT)) == 7

assert len(jenny.make_twt_hash(URL, d - timedelta(seconds=1), TEXT)) == 7

assert len(jenny.make_twt_hash(URL, d, TEXT)) == 12

assert len(jenny.make_twt_hash(URL, d + timedelta(seconds=1), TEXT)) == 12

assert len(jenny.make_twt_hash(URL, d + timedelta(seconds=2), TEXT)) == 12

assert len(jenny.make_twt_hash(URL, d + timedelta(seconds=3), TEXT)) == 12

assert len(jenny.make_twt_hash(URL, d + timedelta(days=7), TEXT)) == 12

(In other words, I don’t care as long as it’s before 2027-01-01. 😏😅)

I have to say. A well designed Hypermedia Driven Web Application such as yarnd‘ using HTMX is just as good, i'd not better, than one written in React.

As someone that almost exclusively uses “Discover”, that is. If you do use it like I do, you know I mean.

easily the only one not using Mastodon either, lol

then again I don’t use Mastodon…

Pleroma may have worked, perhaps

I think i may have fixed threading too but can’t easily test now as i’ve left for my

holiday and don’t really use Mastodon 😂

I’m kind of tired of late of telling support folks, for example, ym registrar, how to do their fucking goddamn jobs 🤦♂️

Hi James,

Thank you for your patience.

There are several reasons why a .au domain registration might fail or be cancelled, including inaccurate registrant information, ineligibility for a .au domain licence, or issues related to Australian law.

For a full list of possible reasons, please see this article: https://support.onlydomains.com/hc/en-gb/articles/6415278890141-Why-has-my-au-domain-registration-been-cancelled

If you believe none of these reasons apply to your case, please let us know so we can investigate further.

Best regards,

Yes, so tell me support person, why the fuck did it fail?! 🤬

I have a question! I’m looking for a small personal camera(specifically good for videos because that’s what I’ll use it for) that’s cheap enough for a teen to afford but also actually good. Do any of you tech people have any good recs?

@aelaraji@aelaraji.com I think I’ll just end up using the Official CrowdSec Go library 🤔

PSA: Just in case you start getting 5xxs on my end, I’m not dead 😂 (well, unless I am). Well be changing ISPs and hopefully get the new line up and running before the old provider cuts us off.

@aelaraji@aelaraji.com Yeah and I think I can basically pull the crowssec rules every N interval right and use this to make blocking decisions? – I’ve actually considered this part of a completely new WAF design that I just haven’t built yet (just designing it).

git.mills.io today (after finishing work) and this is what I found 🤯 Tehse asshole/cunts are still at it !!! 🤬 -- So let's instead see if this works:

@prologic@twtxt.net I remember reading a blog-post where someone has been throwing redirects to some +100GB files (usually used for speed testing purposes) at a swarm of bots that has been abusing his server in order to criple them, but I can’t find it anymore. I’m pretty sure I’ve had it bookmarked somewhere.

Anyone on my pod (twtxt.net) finding the new Filter(s) useful at all? 🤔

Tired to re-enable the Ege route to git.mills.io today (after finishing work) and this is what I found 🤯 Tehse asshole/cunts are still at it !!! 🤬 – So let’s instead see if this works:

$ host git.mills.io 1.1.1.1

Using domain server:

Name: 1.1.1.1

Address: 1.1.1.1#53

Aliases:

git.mills.io is an alias for fuckoff.mills.io.

fuckoff.mills.io has address 127.0.0.1

PS: Would anyone be interested if I started a massive global class action suit against companies that do this kind of abusive web crawling behavior, violate/disregards robots.txt and whatever else standards that are set in stone by the W3C? 🤔

@zvava@twtxt.net I am waiting for that v1, so that I can start using it. 🙏🏻

@prologic@twtxt.net as per #kzirx3a I managed to avoid having to use it (there’s also a thing or two wrong about its creator as well, which is more on a personal level than technical here)

When I try to login to PayPal I now see:

Please enable JS and disable any ad blocker

Here’s the thing. PayPal takes fees from transactions and payments received and sent.

I have very right not have ads shoved in my face for something that isn’t actually free in the first place and costs money to use. If PayPal would like to continue to piss off folks me like, then I’ll happily close my PayPal account and go somewhere else that doesn’t shove ads in my face and consume 30-40% of my Internet bandwidth on useless garbage/crap.

I just noticed this pattern:

uninformativ.de 201.218.xxx.xxx - - [22/Nov/2025:06:53:27 +0100] "GET /projects/lariza/multipass/xiate/padme/gophcatch HTTP/1.1" 301 0 "" "Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/112.0.0.0 Safari/537.36"

www.uninformativ.de 103.10.xxx.xxx - - [22/Nov/2025:06:53:28 +0100] "GET http://uninformativ.de/projects/lariza/multipass/xiate/padme/gophcatch HTTP/1.1" 400 0 "" "Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/112.0.0.0 Safari/537.36"

Let me add some spaces to make it more clear:

uninformativ.de 201.218.xxx.xxx - - [22/Nov/2025:06:53:27 +0100] "GET /projects/lariza/multipass/xiate/padme/gophcatch HTTP/1.1" 301 0 "" "Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/112.0.0.0 Safari/537.36"

www.uninformativ.de 103.10.xxx.xxx - - [22/Nov/2025:06:53:28 +0100] "GET http://uninformativ.de/projects/lariza/multipass/xiate/padme/gophcatch HTTP/1.1" 400 0 "" "Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/112.0.0.0 Safari/537.36"

Some IP (from Brazil) requests some (non-existing, completely broken) URL from my webserver. But they use the hostname uninformativ.de, so they get redirected to www.uninformativ.de.

In the next step, just a second later, some other IP (from Nepal) issues an HTTP proxy request for the same URL.

Clearly, someone has no idea how HTTP redirects work. And clearly, they’re running their broken code on some kind of botnet all over the world.

17, 21, and 22 are my favourites. Thank you for sharing! On 17, the pulley might be dangerously hanging, but if you manage to make it work, you will have a couple of nails to use! :-D

I was looking at some ancient code and then thought: Hmm, maybe it would be a good idea to see more details in this error message. Which of the values don’t line up. On the other hand, that feature isn’t probably used anyway, because it’s a bit ugly to use (historically evolved). And on top of that, most teams need something slightly different, if they deal with that sort of thing.

I still told my workmates about it, so they could also have a look at it and we can decide tomorrow what to do about it. Speaking of the devil, no kidding, not even half an hour later, a puzzled tester contacted me. She received exactly that rather useless error message. Looks like I had an afflatus. ;-)

It’s interesting, though, that in all those years, nobody stumbled across this before. At least we now know for sure that this is not dead code. :-)

@arne@uplegger.eu @lukas@lukasthiel.de In fact, Yarn.social’s yarnd client implementation actually uses (or did, still kinda does today) PicoCSS 🤟 It was/is a good CSS library! 👍

what i imagine is a pipeline like 2682 -> readable -> scheme-target + support libs. the first two have flip-flopped a bit, i WOULD like to do everything i want syntax-wise using only reader macros.

@prologic@twtxt.net oh dear god. Keep us posted! 😅

No, I was using an empty hash URL when the feed didn’t specify a url metadata. Now I’m correctly falling back to the feed URL.

tilde.club feeds have no # nick and is messing with yarnd's behavior 😅

@prologic@twtxt.net And none of them use Yarn-style threading. I don’t think they’re aware of us, they’re probably using plain twtxt. Other than one hit by @threatcat@tilde.club a few days ago, I’ve seen no traffic from them. 🤔

@lyse@lyse.isobeef.org nginx allows logging per user, via using defined variables on configuration. Not sure, though, if a Tilde would be willing to go to those “extremes”.

I used Gemini (the Google AI) twice at work today, asking about Google Workspace configuration and Google Cloud CLI usage (because we use those a lot). You’d think that it’d be well-suited for those topics. It answered very confidently, yet completely wrong. Just wrong. Made-up CLI arguments, whatever. It took me a while to notice, though, because it’s so convincing and, well, you implicitly and subconsciously trust the results of the Google AI when asking about Google topics, don’t you?

Will it get better over time? Maybe. But what I really want is this:

- Good, well-structured, easy-to-read, proper documentation. Google isn’t doing too bad in this regard, actually, it’s just that they have so much stuff that it’s hard to find what you’re looking for. Hence …

- … I want a good search function. Just give me a good fuzzy search for your docs. That’s it.

I just don’t have the time or energy to constantly second-guess this stuff. Give me something reliable. Something that is designed to do the right thing, not toy around with probabilities. “AI for everything” is just the wrong approach.